Image credit: “The Order: 1886” courtesy of Ready at Dawn

SIGGRAPH 2016 Games Committee Member Juan Miguel de Joya shares his perspective on the hottest trends in games and the future of game development. To catch up with him and fellow developers during SIGGRAPH 2016, register for conference.

A good friend once told me that art informs technology, and technology informs art. I don’t recall who he attributed the saying to, but it is a through-line that I believe strongly reflects our industry’s passion to continually redefine what computer graphics and interactive technology can accomplish. This, to me, is what makes being part of SIGGRAPH exciting. The mediums through which we express our ideas may be vastly different — AR/VR production is going to be vastly different from a film, let alone the work being done in the Maker community — but we are all, in our own ways, artists in the service of communicating meaningful immersive experiences.

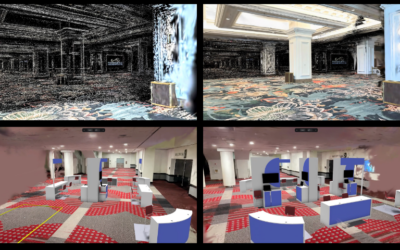

Games in particular have, over the years, exploded into the public consciousness in a manner by which the impact has breached into our everyday lives. The cumulative effort of the game developer community to create impactful interactive experiences has also led to industry-level considerations as to how we can iterate on both the technology we create and the art that motivates the former’s creation. While gaming technology continues to wrestle with contemporary performance and hardware limitations, we are at point where real-time preview and playback for compute-intensive tasks is a feasible route of development and production. Two examples of these in games were the incorporation of a system for camera-based shot lighting in Ready at Dawn’s The Order: 1886, which allowed their technical artists to immediately compose shot-specific light groups based from hundreds of dynamic lights in the scene, and the implementation of a real-time volumetric shader in Guerrilla Games’ Horizon: Zero Dawn that placed an emphasis on directability of rendered cloud shapes and formations without sacrificing quality or draw time. On the other end, Walt Disney Animation Studios presented a method at SIGGRAPH 2015 that provides a seamless and non-obtrusive technique for real-time playback on production rigs inside a host application such as Maya. Beyond these, there is exciting work being done as the games community continues to build and share new perspectives and techniques for real-time rendering, physically-based shading, and animation.

Taking the games explosion to the next level, broader accessibility to cross-platform game engines and related production tools have empowered game developers to create a diversity of content, a paradigm that continues to become increasingly relevant at a time when production budgets and risks are soaring in parallel to an increased desire to craft high-quality mobile and mixed-reality experiences. Acclaimed independent games such as Campo Santo’s Firewatch and The Molasses Flood’s The Flame in the Flood were developed using Unity 5 and Unreal Engine 4, respectively; on the mobile front, Epic Games recently showcased ProtoStar at Samsung Galaxy Unpacked 2016, promising a high-end console quality experience built with Unity Engine 4 and the Vulkan API for Samsung Galaxy S7 in mind. Mixed-reality software development and production companies such as Oculus’ Story Studio and Magic Leap are either building a pipeline around similar tools or taking them in consideration for their content, and their work will be heavily featured as part of the consumer-directed content. This is particularly relevant as 2016 will be the year that virtual reality headsets, such as the Oculus Rift, HTC’s Vive, and Sony’s Morpheus, will be readily available as consumer products. While the VR medium needs to gain traction in its first year in order to meet the scale of the expectations levied on it, the promise of virtual reality and the experiences that have been released have contributed immensely to the continuing discourse on how we can utilize immersion and interaction as storytelling tools for immersive film and gaming experiences. As it stands, ILMxLab recently announced the “Star Wars: Trials on Tatooine” VR experiment using Vive, CCP Games’ EVE: Valkyrie is poise to launch with Rift and Morpheus, and Story Studio is hard a work on their VR film, “Dear Angelica.”

Equally important as the technical development and implications of gaming technology is the growth of creative output coming from game developers. We are seeing both AAA game studios and independent developers experiment with different storytelling and gameplay mechanics, which in turn diversifies the types of games that are available to consumers. The critical success and acceptance that independent developers such as The Fullbright Company, Lucas Pope, and Galactic Café have gotten with Gone Home, Papers Please, and The Stanley Parable respectively have in turn paved the way for games such as 11 bit Studios’ This War of Mine, Sam Barlow’s Her Story, and Thekla Inc.’s The Witness, all of which explore how gameplay mechanics can serve the non-traditional narratives that the games seek to tell. On the other end, Crystal Dynamics’ Rise of the Tomb Raider focused on crafting its main protagonist into a nuanced character, CD PROJEKT RED’s The Witcher 3: Wild Hunt strove to add emotional weight to the player choices being made, and Eidos Montreal‘s Deus Ex: Mankind Divided seeks to engage players with multilayered discussion on societal issues and the protracted consequences of human nature through the lens of science fiction.

Putting all this together, it is easy to conclude that these are exciting times to be part of the games community, be it as a developer or enthusiast. At the same time, there is much work that needs to be done to continue building on the momentum that the community has engendered from its efforts. For me, the excitement of working tangentially with studios and being involved with SIGGRAPH provides the opportunity to see how games continues to become a crucial thread in the narrative of computer graphics and interactive technology, and what will be possible as a result. Most of the examples that I’ve brought up here come from the lens of production specifically, but gaming technology has been and continues to be applicable as an engineering and simulation tool in a variety of fields.

As a member of the SIGGRAPH 2016 Games Committee, I look forward to what’s to come in games and will continue to watch trends evolve as developers shape the future of how we can use new technologies to develop great experiences. I hope you’ll join us.

Juan Miguel de Joya is a Technical Director Resident at Pixar Animation Studios with a degree in Computer Science from the University of California, Berkeley. Prior to Pixar, he interned at Walt Disney Animation Studios and was an undergraduate research assistant at the Visual Computing Lab under Professor James F. O’Brien. He enjoys learning about both the technical and aesthetic applications of computer graphics and interactive techniques, and is constantly seeking ways to improve his knowledge base. Juan is a longtime ACM SIGGRAPH volunteer and currently serves on the Games Committee for SIGGRAPH 2016.