Images and graphs provided by Jon Peddie

Over 25 years, the versatile processor has touched almost every application.

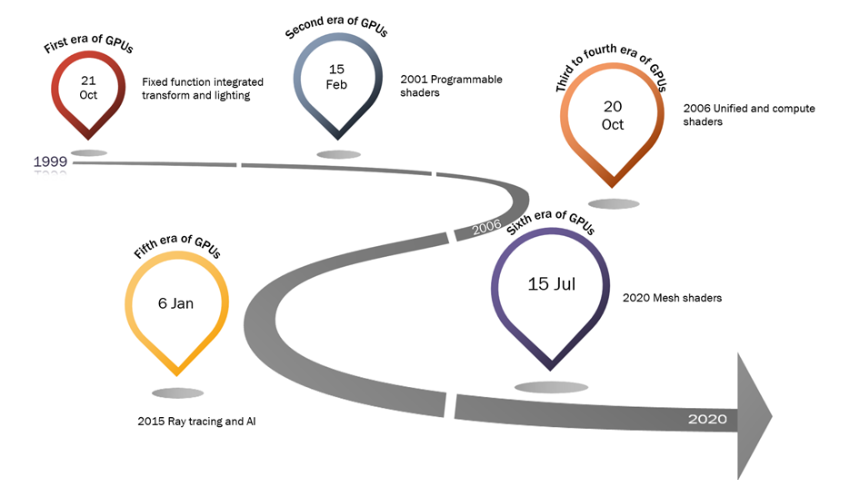

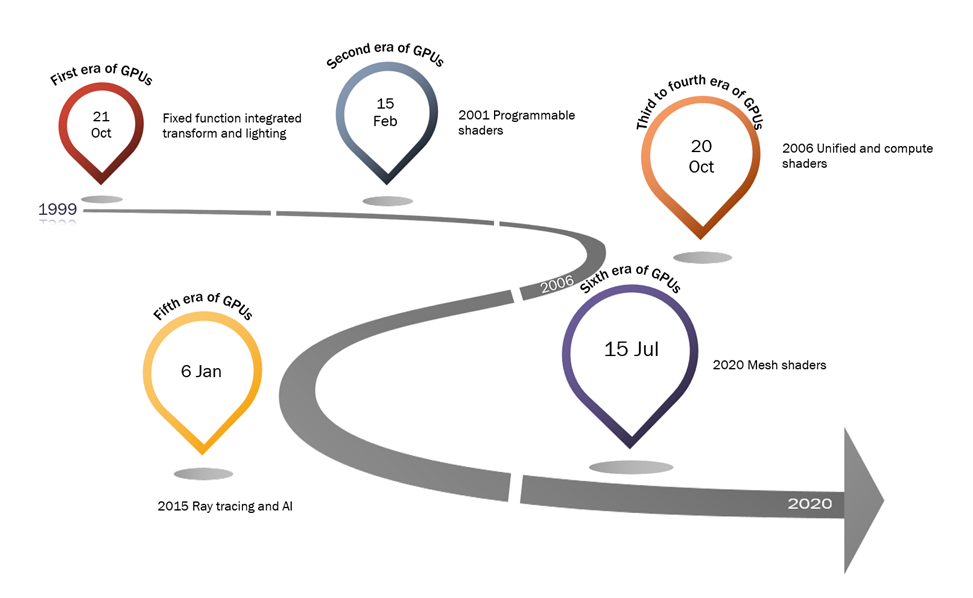

As the GPU emerged, the number of processors within it expanded rapidly, surpassing the pace of Moore’s Law. This growth led to significant increases in compute density, enabling GPUs to handle not only computer graphics but also a broad range of computational tasks. The GPU has progressed through six distinct eras, with the latest advancements pushing it toward a more universal compute engine — an evolution that continues to unfold. Each era built upon the understanding and limitations of the previous one, progressing in a stepwise manner. These transitions were marked by the introduction of increasingly sophisticated APIs, primarily driven by Microsoft and closely followed by Khronos.

The Major GPU Eras

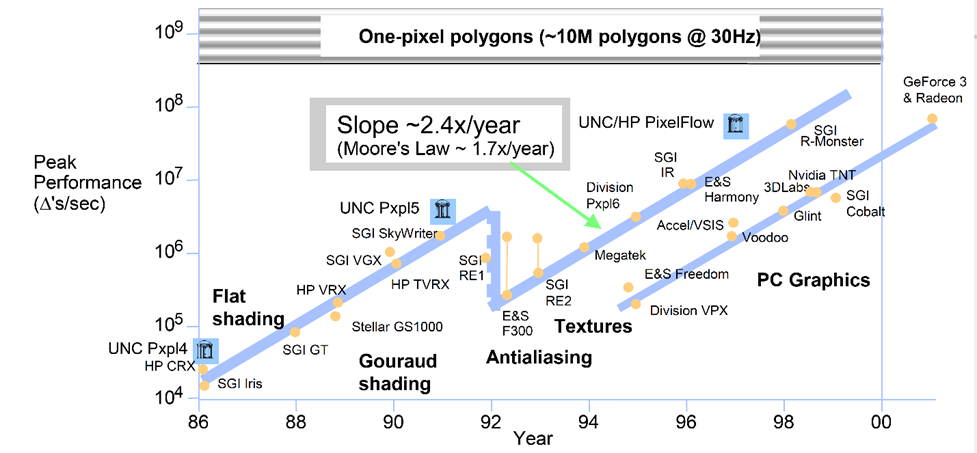

Between the 1970s and 1999, graphics hardware was developed with specific applications in mind, including CAD, simulation, special effects, and gaming. The demand for greater processing power has always driven advancements in graphics technology. During this period, performance improvements outpaced transistor scaling, increasing at nearly 2.5 times per year — significantly exceeding the approximate 1.8 times annual growth predicted by transistor doubling. Figure 1. illustrates this trajectory, as documented by Professor John Poulton from the University of North Carolina. (UNC).[i]

Figure 1. Performance of Graphics leading up to GPUs. Graphics hardware had been progressing at a rate faster than Moore’s law. (Data Courtesy of John Poulton, UNC Chapel Hill)

Using triangles per second as a metric, graphics hardware performance increased beyond what Moore’s Law predicted. This growth occurred because of the parallel nature of computer graphics computations, which hardware designers implemented effectively. PC graphics capabilities had advanced enough by 2001 that they made expensive specialized graphics systems unnecessary.

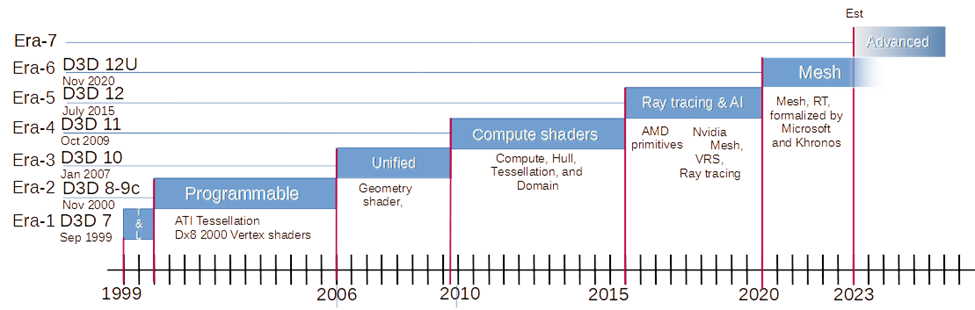

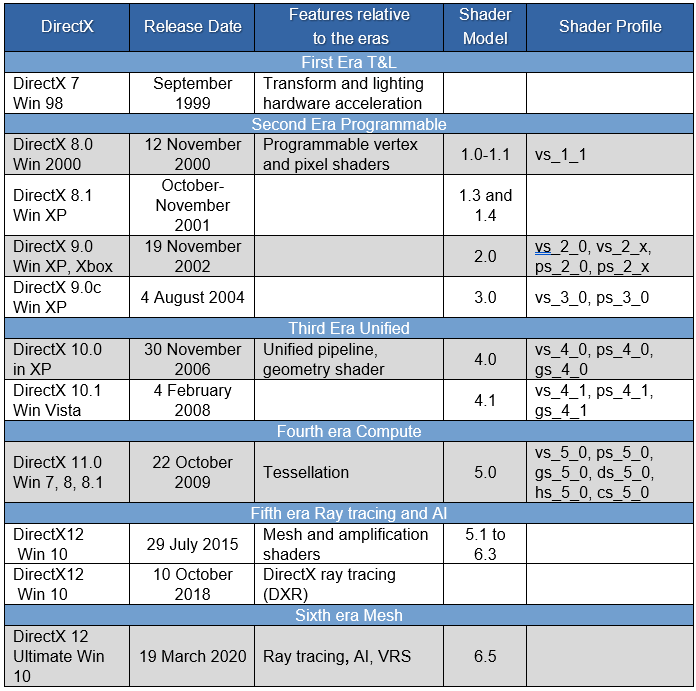

The development of GPUs corresponds with the introduction and evolution of DirectX, Microsoft’s graphics API that gained widespread adoption. DirectX became the framework that defined different GPU time periods because it standardized how hardware features were made available to software developers.

Hardware manufacturers initially created their own interfaces before Microsoft standardized the graphics landscape. These proprietary solutions gave developers access to specific features of graphics devices, yet resulted in fragmentation known as the “API wars.” This period created instability that posed risks to industry growth. Understanding GPU development requires examining how DirectX evolved and established new standards for graphics processing through its various iterations. The first era of the GPU began with the release of Microsoft’s DirectX 7.0, as shown in Figure 2.

Figure 2. The six eras of GPU evolution and development.

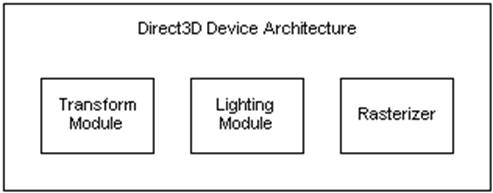

DirectX 7.0. Microsoft released DirectX 7.0 in September 1999, marking a significant advancement in graphics processing capabilities. This version introduced hardware acceleration for transform and lighting operations alongside the ability to allocate vertex buffers directly in hardware memory. The implementation of hardware vertex buffers represented a notable improvement in Microsoft’s Direct3D technology, which was later renamed DirectX, giving it advantages over the competing OpenGL standard. DirectX 7.0 further enhanced rendering capabilities by incorporating multitexture hardware resources into its framework. During this period, DirectX 7.0 exemplified the pinnacle of fixed function multitexture pipeline capabilities, establishing new performance benchmarks before the industry’s eventual shift toward programmable shaders. (Figure 3)

Figure 3. DirectX basic pipeline.

The most exciting features of DirectX 7 included hardware acceleration of transformation and lighting, cube environment mapping, vertex blending, and particle systems.

DirectX 8.0. Microsoft launched DirectX 8.0 in November 2000, introducing programmability through vertex and pixel shaders. This innovation freed developers from manually tracking hardware states, marking a fundamental shift in graphics programming.

DirectX 8.1 arrived on 25 October 2001, targeting Windows 2000, XP, and derivative systems. This was followed by DirectX 8.1a in 2002, which updated the Direct3D component. June 2002 saw the release of DirectX 8.1b, addressing DirectShow issues on Windows 2000 systems. Microsoft also developed DirectX 8.2 specifically for DirectPlay functionality.

December 2002 brought Direct3D 9, enhancing the high-level shading language while supporting floating-point texture formats, multiple render targets, multiple-element textures, vertex shader texture lookups, and advanced stencil buffer techniques.

DirectX 9.0c emerged in August 2004, featuring Shader Model a3.0. This update expanded instruction capabilities for both vertex and pixel shaders, enabling developers to create substantially more sophisticated shader effects.

Direct3D 10 arrived in November 2006, revolutionizing the traditional one-vertex-in/one-vertex-out model by allowing geometry generation entirely within GPU hardware. This version incorporated Shader Model 4.0, which extended vertex and pixel shader functionality while introducing the new geometry shader capability.

Microsoft released DirectX 11 in October 2009 with Shader Model 5.0, which further expanded vertex, pixel, and geometry shader capabilities. This version introduced tessellation and compute shader profiles. Tessellation technology enabled runtime detail enhancement of wireframes using GPU rather than CPU resources.

Tessellation effectively transforms low-detail subdivision surfaces into higher-detail primitives directly on the GPU, breaking up high-order surfaces into structures optimized for rendering. This hardware implementation generated substantial visual detail, including displacement mapping support, without increasing model sizes or compromising refresh rates.

July 2015 saw the introduction of DirectX 12, providing lower-level hardware abstraction compared to previous versions. This approach improved multithreaded scaling and significantly reduced CPU utilization for games. DirectX 12 shared architectural elements with AMD’s Mantle API developed for consoles, similar to the Vulkan API.

DirectX 12 implemented Shader Model 5.1, adding volume-tiled resources, shader-specified stencils, improved collision and culling through conservative rasterization, rasterizer-ordered views, standard swizzles, default texture mapping, compressed resources, additional blend modes, and efficient order-independent transparency.

In November 2020, Microsoft introduced DirectX 12 Ultimate, bringing numerous new compute techniques and creating alignment between PC and game console development, substantially enhancing development efficiency. DirectX 12 Ultimate featured innovations such as Mesh Shaders and Sampler Feedback, with mesh shading particularly transforming image detail and quality without performance penalties.

A summary of the generations of DirectX is shown in Table 1.

Vs=vertex shader, gs=geometry shader, hs=hull shader, ps=pixel shader

Table 1. The evolution of the APIs and OS relative to the eras of GPUs.

DirectX 12 extended the API to incorporate ray tracing and Direct12 Ultimate introduced mesh shaders and established the GPU platform as a computing device for graphics.

DirectX was the milestone of the eras of the GPU. Mesh shaders may not be the end of the trail. New designs are being experimented with using RISC-V which would create a new class of GPUs using MIMD architecture rather than the current and historical SIMD. Had Intel’s Larabee gone to market, it would have been the first MIMD GPU, but that’s a story for another time.

If you’re interested in GPUs and their history, take a look at the three volumes set of “The History of the GPU”.

[i] Ming C., Lin, M. C., and Manocha, D. Interactive Geometric and Scientific Computations Using Graphics Hardware Siggraph’03 Tutuorial Course #11. (August 2003), http://gamma.cs.unc.edu/SIG03_COURSE/SIGCOURSE-11.pdf