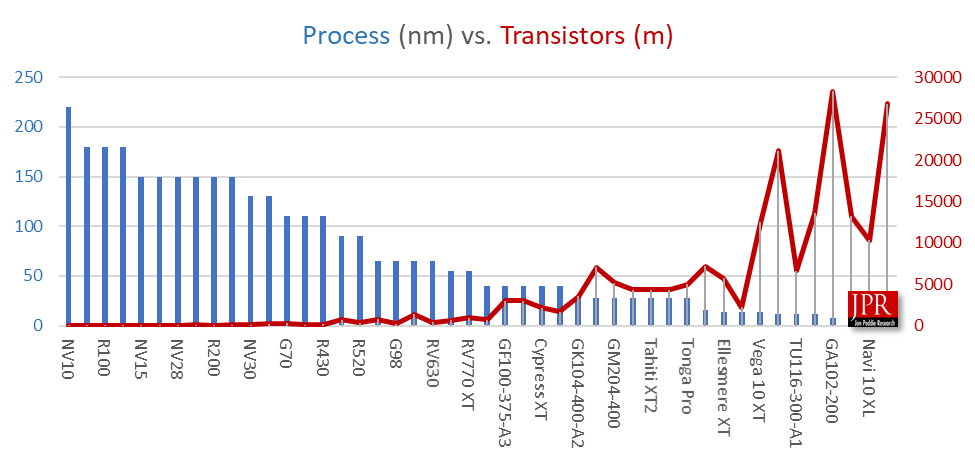

Image via Jon Peddie Research

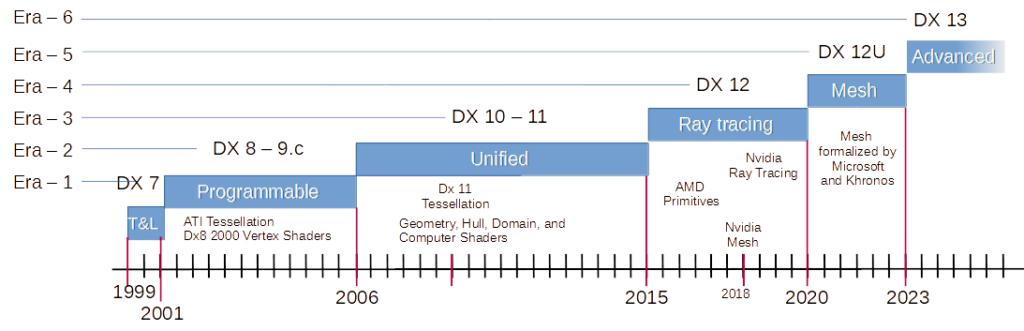

The GPU is in its fifth or sixth era, depending on how you evaluate the developments since DirectX 7 in late 1999.

The first era of the GPU began with the release of Microsoft’s DirectX 7.0, as shown in Figure 1.

During the following two-decade-plus period, GPUs have grown from four-pixel shaders — called pipes in those days — to 10,496 shaders in Nvidia’s Ampere GA102 used on the RTX 3090 add-in board (AIB). During that same time, the process size went from 220 nm to 8 nm for Nvidia and 7 nm for AMD. The physical size of the chips went from 139 mm2 for the Nvidia NV10 to a gigantic 628.4 mm2 for the GA102.

As the process node shrunk following Moore’s Law prediction, and the die sizes increased, so did the number of processors, shaders, in a GPU, as illustrated in Figure 2. As the number of processors increased, additional types were added. The basic SIMD processor/shader is a 32-bit FP ALU. As the GPU got applied to compute acceleration, a specialized matrix processor, known as tensor processor, was added in 2017 to accelerate AI training scenarios. Then, in 2018, a third novel processor — an instance processor — was added for ray-tracing acceleration. Also lurking in GPUs for audio processing were DSPs and fixed-function Codecs for video processing.

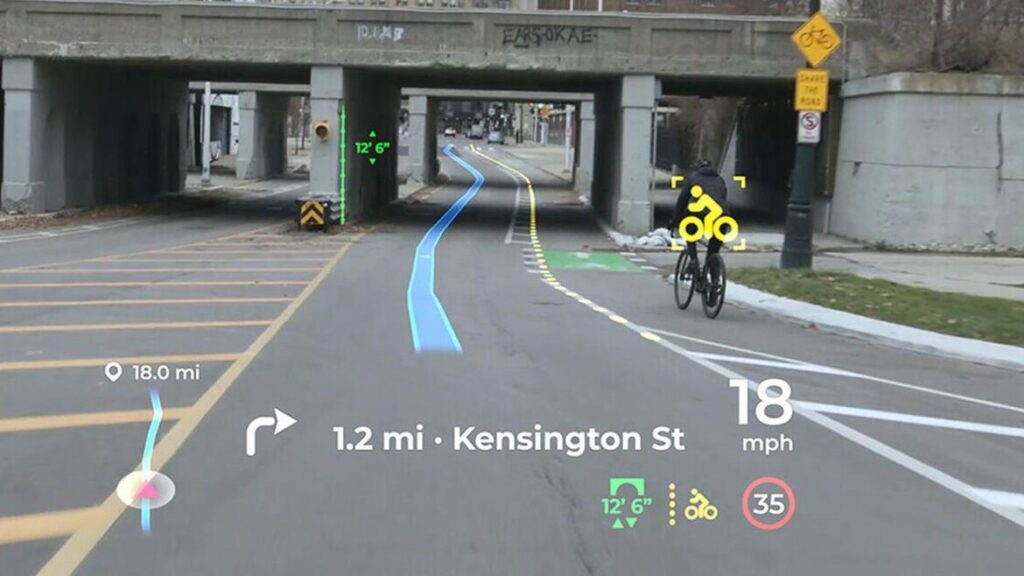

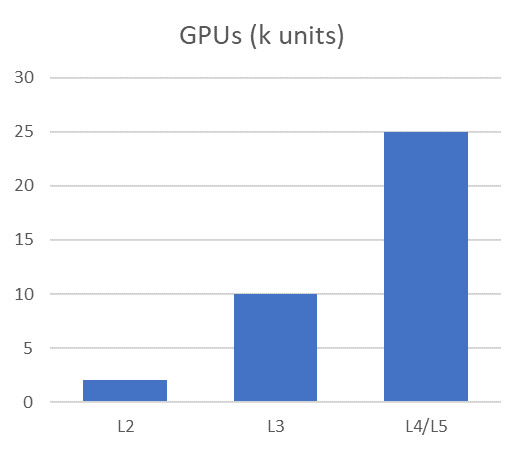

In addition to AI training, which gets a lot of headlines and attention from investors looking for the next “golden egg laying” goose (which they now call unicorns), GPUs are being used to take you for a ride. In the process, we are learning how insidiously complicated autonomous driving is and developing a new respect for what a fantastic job we mere humans do when we drive. At CES, Qualcomm, Intel, and Nvidia showed some fantastic examples of what has been developed so far, and Nvidia offered the chart shown in Figure 3.

The number of decisions that need to be made in split seconds navigating a car or truck through traffic in all kinds of weather and time of day is astonishing to contemplate. The industry established five levels of autonomous vehicles:

- Level 0: No Autonomy.

- Level 1: Driver Assistance.

- Level 2: Partial Automation.

- Level 3: Conditional Automation.

- Level 4: High Automation.

- Level 5: Full Automation.

We are seeing vehicles today at Level 2, and very soon — maybe this year — Level 3 vehicles will appear. Almost all of them will be powered not only by GPUs in the vehicle, but GPUs in the data center because autonomous vehicles will rely heavily on cloud communications.

So, whereas GPUs were developed for games, and gaming is still the largest consumer of GPUs, the powerful little massively parallel processors have found their way into the glass cockpit of all modern airplanes, smartphones, and smart TVs, VR, AR, XR, and your-momma’s-R, which includes HUD (see Figure 4), flight simulators, and giant signs in major metropolitan areas around the world, and even into watches and cash registers and dashboards of your car.

This year we will see a new member joining the discrete GPU club when Intel starts shipping its Arc GPUs. Already being installed in some laptops, later this year they are expected to show up on AIBs from the usual suspects.

In China, there are three and possibly four startups making GPUs for the data center and AI training. And in Greece, Think Silicon makes the tiniest GPUs for smart watches and IoT devices. GPU IP designs come out of Japan and the UK, as well as the U.S. and Canada. GPUs are ubiquitous and from suppliers worldwide. That keeps them competitive and the producers innovative, costs down, and new applications appearing every year. GPUs power everything and are everywhere.

Dr. Jon Peddie is a recognized pioneer in the graphics industry, president of Jon Peddie Research, and has been named one of the world’s most influential analysts. Peddie has been an ACM distinguished speaker and is currently an IEEE Distinguished speaker. He lectures at numerous conferences and universities on topics about graphics technology and the emerging trends in digital media technology. Former President of SIGGRAPH Pioneers, he serves on the advisory boards of several conferences, organizations, and companies, and contributes articles to numerous publications. In 2015, he was given the Lifetime Achievement award from the CAAD society. Peddie has published hundreds of papers to date; and authored and contributed to eleven books, His most recent, “Augmented Reality, where we all will live.” Jon is a former ACM Distinguished Speaker. His latest book is “Ray Tracing, a Tool for all.”

Jon Peddie Research (JPR) is a technically oriented marketing, research, and management consulting firm. Based in Tiburon, California, JPR provides specialized services to companies in high-tech fields including graphics hardware development, multimedia for professional applications and consumer electronics, entertainment technology, high-end computing, and Internet access product development.