“Baba Yaga” © 2020 Baobab Studios, Inc.

In August, the team at Baobab Studios presented some of the ideas that went into its latest interactive VR narrative experiment, “Baba Yaga”, including their in-house Storyteller toolset and the film’s novel use of hand tracking technology. Read on to learn more about the studio’s latest project. plus some hints at when it might be available in a headset near you.

SIGGRAPH: Your latest project “Baba Yaga”, which premiered at the Venice Film Festival and was teased in a SIGGRAPH 2020 Talk in August, once again gives the viewer a role. How is this role different from the roles viewers have played in your past work (“Invasion!”, “Bonfire”, etc.)?

Team: We started Baobab Studios five years ago to transform animated storytelling in VR by placing you, the viewer, directly inside the story. It has been an incredible journey for our team, and SIGGRAPH has played a meaningful role in it! With each project, we have experimented in giving you a role to play and empowering you to participate in the narrative. Because everything in VR is rendered in real time, the characters can acknowledge your presence and respond to you based on your actions. We can empower you to develop meaningful relationships with the characters where you actually matter in the story. “Baba Yaga” marks our studio’s sixth interactive narrative, and it represents the next step forward in terms of interactivity, VR storytelling, and making your role in the story consequential.

The role you play is the hero character Sasha. Along with your sister Magda, you venture into an enchanted forest and stop at nothing to cure your sick mother, The Chief of the village, even if that means facing the witch Baba Yaga. As Sasha, your actions have meaningful consequences, and your choices have a profound impact on the ending of the narrative. In a traditional film, we watch the hero character make difficult choices under pressure that reveal something about themselves. In “Baba Yaga” you yourself must make the difficult decision that will have consequences for your village and the forest.

“Baba Yaga” is the first VR project where we have tackled human characters. Through our past experiences creating CG films, we know that audiences are quite adept at detecting when human CG or AI characters don’t look right, since we carry with us a lifetime of experience interacting with humans. Bringing “Baba Yaga”’s characters to life posed numerous challenges for our engineering and animation teams, who needed to capture incredible nuance and emotional detail, while rendering in real-time at 72 fps on a VR headset, like the Oculus Quest. Needless to say, “Baba Yaga” has the most complex characters we have created and animated in VR to date.

SIGGRAPH: Let’s talk story. Can you share a bit about the process of finalizing the story? How did it evolve throughout production? How did the all-female voice cast factor in?

Team: We were inspired by the large number of slavic fairy tales written about the witch Baba Yaga. Our version reinvents these classic Eastern European tales and incorporates themes of self-sacrifice and environmental conservation into an interactive VR experience that spans over 30 minutes in length.

Our early story development was similar to that of a feature film. Writer and Director Eric Darnell iterated with our team on the story structure, character development, and overall themes by writing numerous drafts of the script. Unlike a typical film project, though, the script accounted for various user actions, and how the characters should react to them. We then began boarding the story, sometimes with VR storyboards that we viewed in-headset and other times with traditional storyboards cut together in reels.

“Baba Yaga” was our first project that adopted a game-development production process. We first created a three-minute vertical slice (the first half of the rainforest sequence), which captured the final look and feel of the project along with the core interactivity mechanics. As we entered into full production, we set up several intermediate milestones that we would playtest with external users and creative advisors. While we have always conducted user tests on our previous projects, this became much more challenging to do with COVID-19, where we could no longer bring users into our office to observe.

We have been incredibly honored to have such a powerful all-female voice cast for “Baba Yaga”. Kate Winslet plays the title character, Daisy Ridley plays your sister Magda, Jennifer Hudson portrays the voices of the Forest, and Glenn Close is your mother, The Chief of the village. Jennifer Hudson is also an executive producer on the project. Each of these amazing actresses added incredible depth and gravitas in bringing the characters and the story to life.

SIGGRAPH: Piggybacking off of the last question, the “Baba Yaga” story contains heavy environmental themes. What message about the environment do you hope viewers walk away with?

Team: “Baba Yaga” is a story about courage, in this case the courage to undertake the herculean journey of saving your mother and village. Unlike the villagers and Baba Yaga, who are locked in escalating struggle, you have the chance to choose a different path, one where the forest and humanity can live in balance. We see this story of courage played out in the real world where younger generations are focused on combating climate change through meaningful action. We hope viewers will walk away feeling empowered that their choices matter and to push for environmental change themselves.

SIGGRAPH: Now, let’s get technical. In “Bonfire” and now again with “Baba Yaga”, your team has employed character AI as well as advanced hand tracking technology. Can you talk a bit about how this has evolved to the final version of “Baba Yaga” that you premiered?

Team: In “Baba Yaga”, interactivity plays a critical role in making you matter. “Baba Yaga” and our more recent interactive projects have a non-linear story structure that can vary widely depending upon the actions viewers take. Adopting the above methodology allows for flexible, rich interactions, where the characters and the environmental elements can always be “alive” and reacting to your decisions and participation.

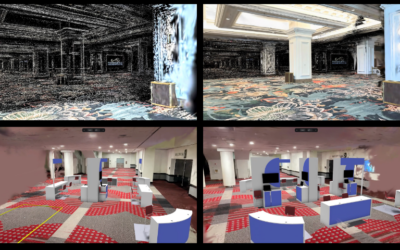

We have built a system called Storyteller to author non-linear VR animated content, which allows artists and engineers to easily encode interactive designs in the game engine. Storyteller allows artists to encode the story structure into hierarchical behavior trees, and acts as the brain at the top level of the experience. It monitors game states, evaluates triggered conditions, and orchestrates story sections and individual moments to happen at the exactly the right time. Behavior trees have been used extensively in robotics and game development, and are well suited for this problem space.

In “Baba Yaga” and our previous projects “Bonfire” and “Asteroids!” the characters have AI brains that generate autonomous behavior based on your actions. A key innovation with our character AI system is that it generates procedural motion that blends seamlessly with our hand-crafted, straight head animations, and is hopefully indistinguishable. Storyteller combined with our character AI allows you to deepen your relationship with Magda during the course of the experience as well as with other creatures (e.g., little baby chompy plants) and the rainforest itself.

Hand controllers have been the primary input device in VR for the past four years. This year, the Oculus Quest introduced hand tracking where the headset tracks your hand and finger positions without the use of any controller hardware. Hand tracking provides us the opportunity to make VR experiences even more natural and immersive because you don’t have to hold the controllers and users can see their individual fingers moving versus only overall hand motion.

We dove full steam into creating “Baba Yaga” so that the interactivity works seamlessly with hand tracking throughout. We first prototyped a core mechanic that worked intuitively with hand tracking. Once we proved out the hand-tracked core mechanic, we began applying this to our other interactions. “Baba Yaga”works with both controllers and hand tracking, but our early feedback from the Venice Film Festival and from viewer feedback is that people actually prefer the experience with hand tracking. We are very excited about the potential for hand tracking to push the level of immersion, especially as the technology becomes more mainstream.

SIGGRAPH: In the video above, Paper Sculptor Megan Brain shares how she created lightbox artwork for the “Baba Yaga” announcement at Tribeca. Can you talk a bit about the inspiration for this promotion?

Team: We were looking for the best way to translate the dioramic look of “Baba Yaga” into a real-world object that would be memorable but also have a handcrafted feel. Taking the “Baba Yaga” key art, we enlisted Megan Brain to create a paper sculpture that reflects the tone and feel of the experience into something people would want to show off on their desks. Megan, an excellent artist who specializes in creating lightboxes, was the perfect fit to design this one-of-a-kind creation.

SIGGRAPH: Finally, can you tell us when headset users will see “Baba Yaga” hit the Oculus store? Also, as regular SIGGRAPH contributors, any hints as to what else might be in the works? Will we hear from Baobab again during SIGGRAPH 2021?

Team: It goes without saying that 2020 has been anything but normal, and a challenging year for everyone. As a studio, we feel incredibly fortunate that we could finish this production from home amidst the pandemic, and it was a true testament of our team’s resolve that we were able to do so. “Baba Yaga” premiered at the Venice Film Festival in September, which along with SIGGRAPH, were some of the first conferences and festivals to showcase VR again (albeit virtually). We look forward to presenting “Baba Yaga” to the public at other festivals and to releasing “Baba Yaga” commercially in the near future. As a content studio, Baobab is always working on multiple new properties at the same time, and we have many exciting projects in various states of development. SIGGRAPH has been a huge part of our careers and an equally large part of our company’s DNA these past five years. We have presented each of our VR narratives since 2016 (back when it hosted a VR Village), and we look forward to next year where we can hopefully show off our next project at SIGGRAPH 2021 … IN PERSON!

Over 250 hours of SIGGRAPH 2020 content is available on-demand through 27 October, including Baobab’s Talk, “Baba Yaga: Designing An Interactive Folktale”.

Meet the Team

Larry Cutler (Producer) is Chief Technology Officer and co-founder of Baobab Studios. Larry brings deep technical leadership over the past 20 years in the area of animation creation and development. He currently serves on the Digital Imaging Technology Subcommittee for the Oscar Technical Achievement Awards, and was most recently the Vice President of Solutions at Metanautix, a big data analytics startup company acquired by Microsoft. Previously, Larry was Global Head of Character Technology at DreamWorks Animation, leading character development, animation workflow, rigging, and character simulation. Larry began his career at Pixar Animation Studios as a Technical Director on the films “Monster’s Inc.,” “Toy Story 2,” and “A Bug’s Life.” He holds undergraduate and master’s degrees in computer science from Stanford University.

Eric Darnell (Director) is Chief Creative Officer and co-founder of Baobab Studios. Eric’s career spans 25 years as a computer animation director, screenwriter, story artist, film director, and executive producer. He was the director and screenwriter on all four films in the “Madagascar” franchise, which together have grossed more than $2.5 billion at the box office. He was also executive producer on “The Penguins of Madagascar.” Previously, Eric directed DreamWorks Animation’s very first animated feature film “Antz,” which features the voices of Woody Allen, Gene Hackman, Christopher Walken, and Sharon Stone. Eric earned a degree in journalism from the University of Colorado and a master’s degree in experimental animation from The California Institute of the Arts.

Nathaniel Dirksen (VFX Supervisor) oversees the technical aspects of production on the company’s narrative VR experiences, including “Invasion!,” “Crow: The Legend,” “Bonfire,” and “Baba Yaga.” Previously, he worked in feature animation and visual effects, most recently as the character technical director supervisor for “The Penguins of Madagascar” at PDI/DreamWorks.

Justin Fischer (Rigging Lead) he has led the rigging efforts for several projects at Baobab Studios, including “Baba Yaga”, “Bonfire”, and “Crow: The Legend”. Prior to Baobab, Justin brings more than a decade of feature film animation and production experience at LAIKA Studios and Dreamworks Animation.

Amy Rebecca Tucker (Lighting Supervisor/Engineer) Amy has worked as a Lighter and Engineer on Baobab’s projects “Baba Yaga”, “Bonfire”, and “Crow: The Legend”. Amy earned her Master of Visualization Sciences degree from Texas A&M University in 2002. She moved to California in 2009 to work in the lighting department at PDI/DreamWorks having already worked in television, print and Web design, as an interactive developer for museum exhibits, as an adjunct professor of Web design and development, and as a flight simulation software developer specializing in radar and infrared simulations. Her feature film credits include “Home”, “Penguins of Madagascar”, “Mr. Peabody & Sherman”, and “Rise of the Guardians” as a Production Lighter and Compositor.