“Interactive Augmented Reality Storytelling Guided by Scene Semantics” © 2022 Changyang Li, Haikun Huang, Lap-Fai Yu, George Mason University; and, Wanwan Li, George Mason University, University of South Florida

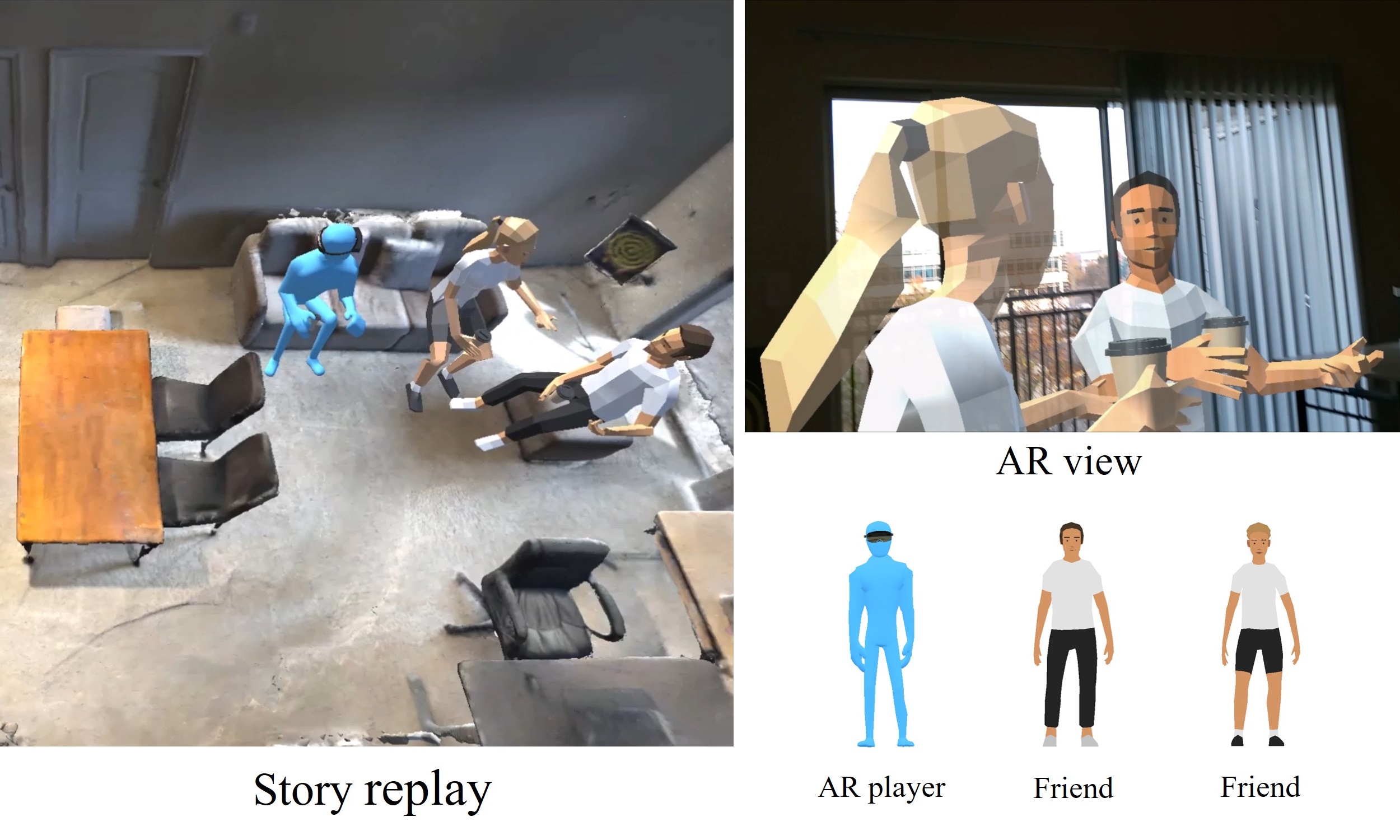

Are we in the real world or an AR-based metaverse? SIGGRAPH 2022 Technical Papers selection “Interactive Augmented Reality Storytelling Guided by Scene Semantics” uses real-word environments and automatically populates an AR story that allows virtual characters and items to adapt to the player’s actions. We sat down with Associate Professor Lap-Fai (Craig) Yu and student Changyang Li to discuss the ins and outs of developing their fascinating research and to hear what they are most excited about for SIGGRAPH 2022.

SIGGRAPH: Share some background on “Interactive Augmented Reality Storytelling Guided by Scene Semantics.” What inspired this research?

Lap-Fai (Craig) Yu (CY): There are many “stories” happening every day. Think about the “story” of a family enjoying an evening: the parents are cooking dinner while the kids are working on their schoolwork. Then, they get dinner together. After that, they tidy up the table and then enjoy some family activities, like watching TV, playing board games, etc. If we can model such stories using some high-level representations, and then apply a computational approach to automatically adapt such stories to happen at someone’s apartment, we can provide numerous realistic experiences that someone can visualize, experience, and even interact with within augmented reality! That would greatly enhance the fun and interactive content people can enjoy in the future, AR-based metaverse.

Changyang Li (CL): In recent years, an increasing number of AR research topics are about virtual content adaptation regarding the physical environments’ semantics. Although many prior arts studied how to adapt virtual content (e.g., characters, objects, user interfaces) statically considering only spatial relations, they inspired us to investigate the exciting research direction about accommodating a dynamic story, which comprises a sequence of events and thus involves additional temporal relations. This work is also a follow-up to previous computational level design research [done by] our lab.

SIGGRAPH: Describe the process that led to the approach for populating virtual content in real-world environments that is the basis of the paper. How many people were involved? How long did the research take? What problem(s) were you trying to solve?

CL: Our approach mainly consists of a preprocessing stage, followed by an interactive storytelling stage for populating virtual content in real-world environments.

In the preprocessing stage, our approach first extracts semantics from the target scene for storytelling in a semi-automatic manner (automatic instance segmentation and oriented bounding boxes estimation followed by manual refinements). The extracted scene semantics — and a story graph that encodes story plots — are then used to prepare spatial candidates (i.e., candidate solutions about how to populate virtual characters and items at a specific event frame) via a Markov chain Monte Carlo sampling.

In the interactive storytelling stage, based on the story graph and prepared spatial candidates, our approach selects one spatial candidate for each story event to propose an event instantiation. It then links all event instantiations together to assemble a story considering temporal relations. The module dynamically updates the story by reassembling it based on the players’ actions in real time.

While we have a small team (four authors) for this research, we got help from our lab members, friends, and families on user studies and demonstrations. For this work only, our team focused on the project with our full passion for about six months. However, this research also benefits from years of experience accumulation in the research of computational level design and VR/AR in our lab.

The problem we were trying to solve was to automatically adapt the same story to different real-world environments, while ensuring that the populated virtual characters and items are contextually compatible with the scene. In addition, we aim to deliver interactive storytelling experiences that enable players to take the role of a character and interact with other virtual content according to the story plots.

SIGGRAPH: What challenges did you face while developing your research?

CY: This project integrates multiple visual computing techniques (e.g., 3D scene reconstruction, segmentation, understanding, registration) and interactive techniques into a single AR storytelling framework, where our approach comes into play for the story adaptation part. The integration of the techniques was quite challenging, especially because some of those techniques were still in the research stage and might not work very robustly. Another challenge is to devise an abstract representation of AR stories to enable adaption to different real-world environments — our work provides an answer. More than that, we also show how to use our representation and sampling approach to adapt stories considering the dynamic choices (e.g., where to sit) of the user in real time — this is an essential requirement of interactive AR storytelling experiences and our approach provides a solution.

CL: The biggest challenge was how to enable interactivity during AR storytelling since we had to consider various cases for a story to evolve corresponding to different users’ actions in dynamic scenarios. This consideration introduced expensive computational needs for real-time AR applications. We thus proposed the hierarchical story sampling strategy to migrate computations regarding the spatial relations into the preprocessing stage, and only retain computations regarding the temporal relations at runtime.

The problem we were trying to solve was to automatically adapt the same story to different real-world environments while ensuring that the populated virtual characters and items are contextually compatible with the scene. In addition, we aim to deliver interactive storytelling experiences that enable players to take the role of a character and interact with other virtual content according to the story plots.

SIGGRAPH: How do you envision this research being used in the future?

CY: We believe what we are doing here is just a start. We provide a basis, which has lots of extension opportunities in both the computer graphics (CG) research community and the industry. Assuming AR keeps developing at a fast pace and everyone has a pair of AR glasses 10 years from now, would people have access to a multitude of AR content like the number of web pages we now have on the internet? Our research essentially provides a useful framework on how to represent, scale, and adapt such AR content to enrich the immersive experiences, possibilities, and values that AR content creators could provide.

CL: We envision that our research has the potential to be applied in application scenarios like AR gaming and education, where content is usually delivered with tangled spatial-temporal relations. Since our method automatically adapts virtual characters and objects to different real environments, users can easily create personalized AR experiences at home.

SIGGRAPH: What excites you most about developing computer graphics and interactive techniques research?

CY: Our group, Design Computing and Extended Reality, at George Mason University focuses on AR/VR content creation. I believe our future world will be fused with a countless amount of immersive and imaginative virtual content. How exciting it is to devise content creation tools for everyone to build such a futuristic world together! We devise toolkits to enable the general public to unleash their creativity and create miracles. We want to enable all people, not just the 3D modeling professionals, to be able to create virtual content, tell their stories, and express themselves through future immersive technologies.

CL: The most exciting thing is that our research is very close to applications, thus we can always envision its potential to be utilized in future AR applications. For this work, the developing stage was a lot of fun because we witnessed that the virtual characters interacted with the real environments more, and more realistically and naturally. This was an amazing and compelling experience!

SIGGRAPH: What are you most looking forward to experiencing at SIGGRAPH 2022? Anything in Vancouver you plan on visiting before or after the conference?

CY: We are excited to exchange ideas with other researchers and industry practitioners who are also passionate about VR/AR. There are so many things happening every day in the VR/AR space, which means many potential opportunities for collaboration. We highly look forward to collaboration opportunities, especially with the industry, to transform our inventions into real-world impacts.

CL: We are excited to see there are more technical papers and demos about VR/AR to be presented at SIGGRAPH 2022. The conference gives us a good opportunity to get some insights from other VR/AR research of top-level quality.

Vancouver is just a flight away! Join us for SIGGRAPH 2022 this 8–11 August in person, virtually, or both. Find the right registration type for you and embark on your SIGGRAPH journey. Register today.

Lap-Fai (Craig) Yu is an associate professor in the Computer Science Department at George Mason University, where he leads the Design Computing and Extended Reality (DCXR) group. He obtained his Ph.D. in computer science from UCLA, with an Outstanding Recognition in Research Award. His research has been featured by New Scientist, the UCLA Headlines, and the IEEE Xplore Innovation Spotlight, and has won Best Paper Honorable Mention awards at 3DV and CHI conferences. He received an NSF CRII award and an NSF CAREER award for his research achievements in computational design and virtual reality. He regularly serves on the technical program committees of ACM SIGGRAPH, ACM CHI, and IEEE Virtual Reality.

Changyang Li is currently a Ph.D. student in computer science at George Mason University, advised by Prof. Lap-Fai (Craig) Yu. He received an M.S. in computer science in 2019 from the University of Virginia, and a B.S. in computer science in 2017 from the Beijing Institute of Technology in Beijing, China. His research interests are in integrating computer vision/graphics and AI techniques into VR/AR applications.