“Towards Occlusion-aware Multifocal Displays” © 2020 Carnegie Mellon University, Technion

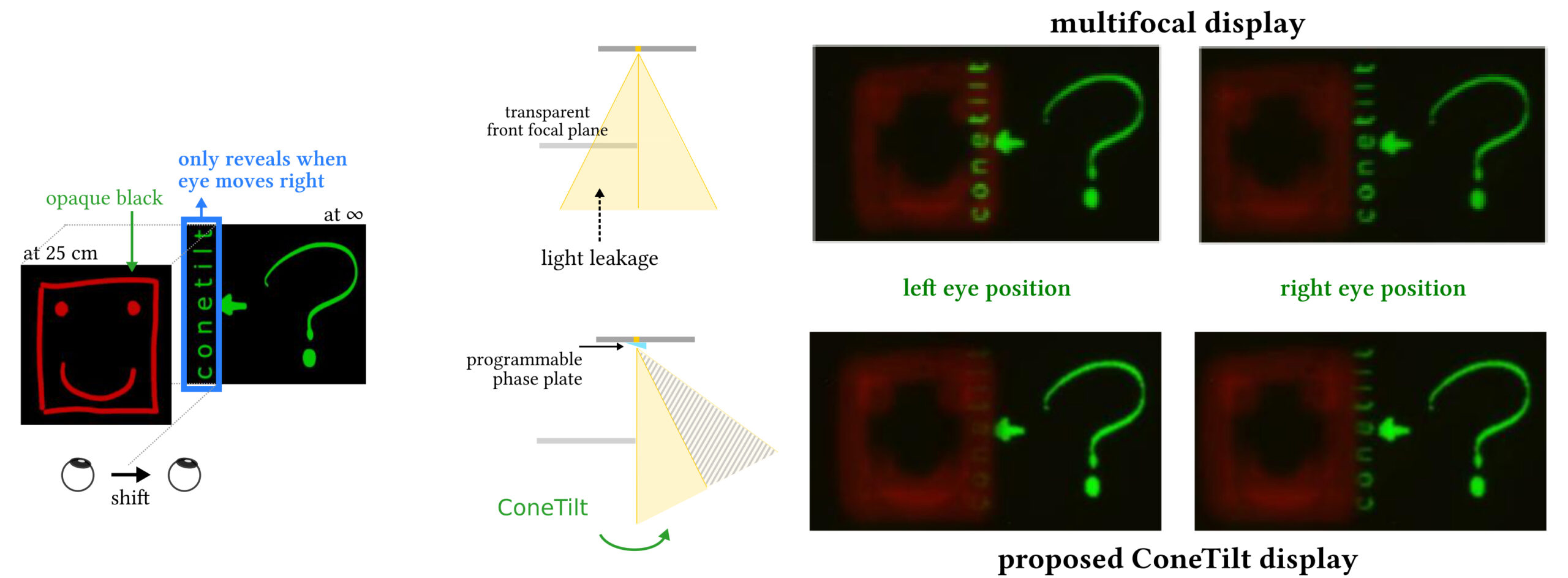

The human visual system uses numerous cues for depth perception, including disparity, accommodation, motion parallax, and occlusion. Through the SIGGRAPH 2020 Technical Paper “Towards Occlusion-aware Multifocal Displays”, researchers from Carnegie Mellon University and Technion demonstrate how use of a novel ConeTilt operator can enable occlusion-aware multifocal displays for virtual reality immersive environments. We sat down with two researchers behind the project to learn more about this novel method. Check out a preview of the paper below, then continue reading for insight from the research team.

SIGGRAPH: Talk a bit about the process for developing the research for your technical paper “Towards Occlusion-aware Multifocal Displays.” What inspired it?

Rick Chang (RC): ConeTilt display is a happy byproduct. We wanted to solve the occlusion problem of multifocal displays, and our original idea was to replace the display panel with a light-field display and use its angular resolution to control the light emitted by virtual objects. However, we soon found out that the angular resolution we needed was too high, and it would reduce the resolution drastically. We also considered using a phase spatial light modulator (SLM) to control, locally, the spatial-angular tradeoff of the light-field display, but it made the system too complex. So, I was on a bus thinking about the problems, looking at cars passing by, and I suddenly realized that to create occlusion we only need to bend the entire light cone. I was very excited, and I directly went into Aswin’s office and drew the diagram on a whiteboard. This is how we came up with the idea of ConeTilt.

Aswin Sankaranarayanan (AS): It was all Rick. He did come into my office all excited and sketched the idea on my board. I was sold by the time he was done presenting the idea.

SIGGRAPH: Let’s get technical. How did you develop the multifocal display technique in terms of execution?

RC: It was during the winter vacation of 2018 when I built the display. Our lab just bought our first phase SLM. Aswin and I were trying to figure out how to use it — from mounting, calibrating, and choosing a light source to getting it to display a nice-looking image. I spent all that Christmas in the lab, trying to make it work. It was Anat [Levin] who helped the process! She jumped in and provided many useful suggestions and insights. She was in Israel, so we had to go through the prototype piece by piece with a webcam. The remote debugging of an optical setup was really fun.

In my opinion, the key to improving image quality is the field lens attached to the display panel. Adding the field lens makes our derivation simpler and the phase function easier to understand intuitively. The biggest challenge was to align the transparent phase-SLM pixels with the DMD (digital micromirror device). In the end, we changed the polarization state and made the phase SLM act like an amplitude SLM to solve the problem.

AS: This was a painstaking process as we discovered new challenges while building the display. Rick probably rebuilt the prototype at least three times from scratch. There were two pain points in the prototype. The first having to align DMD and SLM — two arrays — pixel to pixel. The second having to do with light sources; the SLM operates best with monochromatic sources, but those cause severe speckles. In the end, Rick went with an LED that was narrow enough to not have severe phase distortions, and avoided speckle in the process.

SIGGRAPH: What do you find most exciting about the final research you presented during SIGGRAPH 2020?

RC: To me, the most exciting thing about ConeTilt is its capability to reduce computation with hardware design. The dense focal planes of the device allow us to create a beautiful 3D scene with only the z-buffer, so we do not need to render defocus blur. ConeTilt further improves the fidelity by allowing our eyes to move while maintaining occlusion cue without gaze tracking or re-rendering. It is very cool to me that a simple change to the display hardware can drastically reduce the overall computational burden of the VR display system.

AS: For me, it is just fascinating how much of a difference occlusion makes in depth perception. Till we got the first result up and running, I was worried we might be missing something basic.

SIGGRAPH: What’s next for “Towards Occlusion-aware Multifocal Displays”?

RC: At a high level, ConeTilt provides a new way to generate and manipulate light fields in high spatial resolution. I am interested in seeing how these capabilities can be applied to applications other than VR displays.

AS: Since Rick went high level, let me go the opposite route. Multifocal displays would really benefit from advancements in SLM technology. We really need better devices for phase modulation. I feel advances there could really make ConeTilt very practical.

SIGGRAPH: If you’ve attended a SIGGRAPH conference in the past, share your favorite SIGGRAPH memory. If you have not, tell us what you most enjoyed about for your first experience.

RC: This was my first SIGGRAPH. To me, SIGGRAPH is the coolest conference. It maintains the number of technical papers, so all papers have full presentations. Beyond this, there is also an Art Gallery, demos of emerging technologies, and so much more! All of these created a unique conference experience. It was unfortunate that this year we had to attend virtually, but this gives more motivation to build a better VR display!

SIGGRAPH: What advice do you have for someone looking to submit to Technical Papers for a future SIGGRAPH conference?

RC: I will let Aswin answer this. [smiles]

AS: Reserve your best ideas for the conference. Reviews are uniformly thorough and very detailed. So it is hard for anything except complete pieces of work to survive the review process. It also never hurts to have cool pictures and illustrations.

Submissions for the SIGGRAPH 2021 Technical Papers program are now open. Share your resesarch at the conference this summer.

Rick Chang is a machine learning researcher at Apple. His research interests include computational photography and displays, computer vision, and machine learning. He received his Ph.D. from Carnegie Mellon University in 2019, where he worked on computational displays. Before that, Rick was a research assistant at Academia Sinica in Taiwan and received his M.S. and B.S. degrees from National Taiwan University, where he worked on image filtering and genetic algorithms, respectively.

Aswin Sankaranarayanan is an associate professor in the ECE department at Carnegie Mellon University, where he is the PI of the Image Science Lab. His research interests are broadly in compressive sensing, computational photography, signal processing and machine vision. His doctoral research was in the University of Maryland where his dissertation won the distinguished dissertation award from the ECE department in 2009. Aswin is the recipient of the CVPR 2019 best paper award, the CIT Dean’s Early Career Fellowship, the Spira Teaching award, the NSF CAREER award, the Eta Kappa Nu (CMU Chapter) Excellence in Teaching award, and the Herschel Rich Invention award from Rice University.