Technical Paper, “Deep Appearance Models for Face Rendering” © 2018 Facebook

Developed to connect billions of people who are geographically far apart through authentic social presence, the SIGGRAPH 2018 Technical Paper “Deep Appearance for Face Rendering” was written by Stephen Lombardi, Jason Saragih, Tomas Simon, and Yaser Sheikh and covers their use of breakthrough technology to achieve detailed facial modeling. We sat down with Stephen Lomdardi to learn more about how the project began and how the team is continuing to work to generate avatars more efficiently.

SIGGRAPH: Your paper showcases how the Facebook Reality Labs team developed a process for using data to create realistic facial animation. What was the discovery process that led to this?

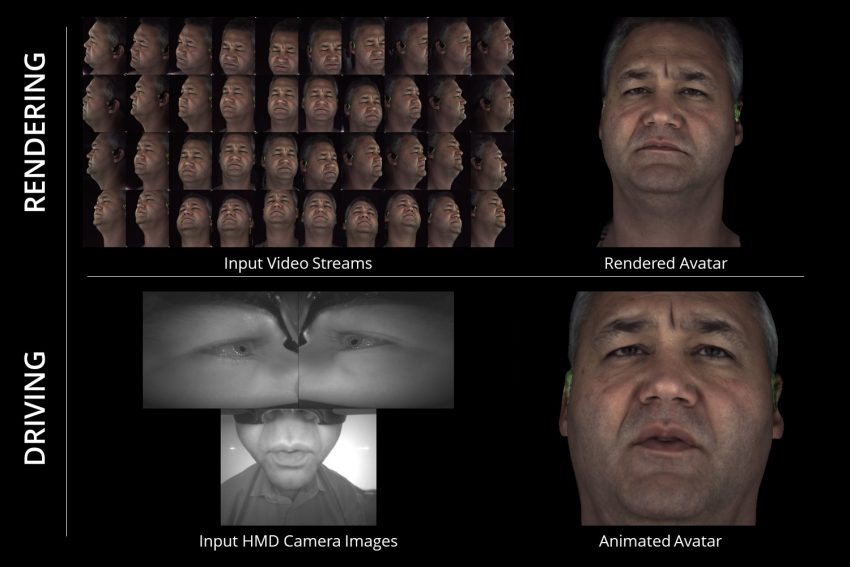

Stephen Lombardi (SL): When we began collecting data with our multi-camera capture system, one of the first things we wanted to do was build realistic face models. A common way to accomplish this is with physics-inspired models, which simulate how the surface of the face physically reflects and scatters light. While these methods can indeed produce very realistic images, they tend to work only if your measurements are both accurate and complete. In other words, inaccurate geometry or partial material property measurements tend to produce artifacts that are very noticeable in the face.

To avoid the drawbacks of physics-inspired models, we turned to Active Appearance Models (AAMs). AAMs can produce realistic renderings despite representing the face with a very coarse triangle mesh. However, AAMs had not been formulated to properly model multi-view imagery, so there wasn’t a good way to handle how the appearance of the face changes as you view it from different angles. A potential solution is to build a separate AAM for each viewpoint and interpolate between them, but this is undesirable because it relies on heuristics about how exactly to combine the results of the individual models. A more elegant solution is to build a single model that handles view-dependent appearance natively, which is what we decided to explore.

SIGGRAPH: How do you go about beginning to build such complex models?

SL: To build a traditional AAM, you would perform Principal Component Analysis (PCA) on geometry and appearance to reduce the representation of the data to a small vector. However, view-dependent appearance effects tend to create non-linearities that PCA is not well-suited to model. Autoencoders, on the other hand, are a type of neural network that reduces the dimensionality of data but, unlike PCA, can perform nonlinear dimensionality reduction. This seemed like a natural way to augment the representational capacity of traditional AAMs. And, this path led us to conditional autoencoders, which can be used to provide some known information (in our case, viewpoint) to the autoencoder to explicitly control an aspect of the output.

After we tried some initial experiments with these ideas, we saw some promise with this approach. The quality of the initial results, however, was very far from compelling. It took many months of tweaking (increasing resolution, experimenting with architectures, and tuning hyperparameters) to go from our initial proof-of-concept experiment to the quality of results in our paper.

It took many months of tweaking (increasing resolution, experimenting with architectures, and tuning hyperparameters) to go from our initial proof-of-concept experiment to the quality of results in our paper.

SIGGRAPH: The implications for real-time rendering using this system are major. How do you hope your research is used now that it’s out there? Do you have any concerns with how it might be applied?

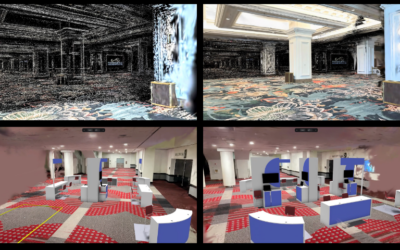

SL: One of the main takeaways of our paper is that we can better leverage multi-view image data by directly modeling view-dependent texture maps. It’s common for face models to either ignore view-dependent effects or model them with physically-based models. But we showed that directly modeling the output color enables us to model regions where geometry is incorrect and the result is greatly improved realism. Part of our goal in putting out this research is to see this how this kind of treatment can be used in areas other than facial modeling, such as entire scenes.

We’d also like to see people improve some of the areas where our model falls short. Currently, our model spends a lot of capacity producing textures that account for incorrect geometry. Ideally, the model would be able to correct the geometry directly based on the input images. This is a difficult problem, but solving it would have a big impact on the photorealism of the models.

Ultimately, our goal in doing this research is to achieve authentic social presence so we can enable people who are geographically far apart, to feel that they are together in the same space. Of course, as with any breakthrough technology, there is a danger that it could be misapplied. As researchers, it’s important we raise awareness of this possibility and think pragmatically about the safeguards we can put in place to prevent this. Ultimately, though, we believe that the positive impact of this technology to enable immersive social experiences across vast distances outweighs the potential for abuse.

Our goal in doing this research is to achieve authentic social presence so we can enable people who are geographically far apart, to feel that they are together in the same space.

SIGGRAPH: Technically speaking, what kind of rendering engines are needed to implement the system? Other technology?

SL: Technically, the work can be split into two phases: training and inference. For the training phase, we used PyTorch to implement the autoencoder and train the model. For inference, we found that running the model in Python was too slow to be interactive. To solve this problem, we implemented a custom rendering engine in C++ that directly calls the NVIDIA CUDA Deep Neural Network library (cuDNN). This allowed us to evaluate the decoder network on the GPU in about 5ms. Once the texture map and vertex positions are decoded, we can render them using a simple OpenGL shader. Because of the large size of the network output (a 1024×1024 texture map and 7,306 vertex positions), we relied on CUDA/OpenGL interop functions to avoid expensive data copies between the GPU and CPU.

SIGGRAPH: What’s next for Facebook Reality Labs? (Specifically, for your research team?)

SL: Our research team in Pittsburgh is going to continue our quest to connect billions of people who are geographically far apart through authentic social presence. We’re going to tackle hands, bodies, and hair, and continue to learn how to generate avatars more efficiently.

SIGGRAPH: What advice do you have for a first-time contributor submitting to the SIGGRAPH Technical Papers program?

SL: During the process of putting together the paper, I found that working on the video allowed me to better think through how to explain the ideas than working on the writing directly, so it was valuable to start it early.

Meet the Researchers

Stephen Lombardi is a research scientist at Facebook Reality Labs. He received his bachelor’s in computer science from The College of New Jersey in 2009, and his Ph.D. in computer science from Drexel University in 2016. His doctoral work aimed to estimate reflectance and illumination of objects and scenes from small sets of photographs. He originally joined FRL as a postdoctoral researcher in 2016. His current research interests are combining techniques from computer graphics and machine learning to create data-driven photorealistic avatars.

Stephen Lombardi is a research scientist at Facebook Reality Labs. He received his bachelor’s in computer science from The College of New Jersey in 2009, and his Ph.D. in computer science from Drexel University in 2016. His doctoral work aimed to estimate reflectance and illumination of objects and scenes from small sets of photographs. He originally joined FRL as a postdoctoral researcher in 2016. His current research interests are combining techniques from computer graphics and machine learning to create data-driven photorealistic avatars.

Jason Saragih is a research scientist at Facebook Reality Labs (FRL). He works at the intersection of graphics, computer vision, and machine learning, specializing in human modeling. He received his bachelor’s in mechatronics and Ph.D. in computer science from the Australian National University in 2004 and 2008, respectively. Prior to joining FRL in 2015, Jason developed systems for the mobile AR industry. He has also worked as a post doc at Carnegie Mellon University and as a research scientist at CSIRO.

Jason Saragih is a research scientist at Facebook Reality Labs (FRL). He works at the intersection of graphics, computer vision, and machine learning, specializing in human modeling. He received his bachelor’s in mechatronics and Ph.D. in computer science from the Australian National University in 2004 and 2008, respectively. Prior to joining FRL in 2015, Jason developed systems for the mobile AR industry. He has also worked as a post doc at Carnegie Mellon University and as a research scientist at CSIRO.

Tomas Simon is a research scientist at Facebook Reality Labs. He received his Ph.D. in 2017 from Carnegie Mellon University, advised by Yaser Sheikh and Iain Matthews. He holds a B.S. in telecommunications from Universidad Politecnica de Valencia and an M.S. in robotics from Carnegie Mellon University, which he obtained while working at the Human Sensing Lab, advised by Fernando De la Torre. His research interests lie mainly in using computer vision and machine learning to model faces and bodies.

Tomas Simon is a research scientist at Facebook Reality Labs. He received his Ph.D. in 2017 from Carnegie Mellon University, advised by Yaser Sheikh and Iain Matthews. He holds a B.S. in telecommunications from Universidad Politecnica de Valencia and an M.S. in robotics from Carnegie Mellon University, which he obtained while working at the Human Sensing Lab, advised by Fernando De la Torre. His research interests lie mainly in using computer vision and machine learning to model faces and bodies.

Yaser Sheikh is an associate professor at the Robotics Institute, Carnegie Mellon University. He also directs Facebook Reality Labs’ Pittsburgh location, which is devoted to achieving photorealistic social interactions in AR and VR. His research broadly focuses on machine perception and rendering of social behavior, spanning sub-disciplines in computer vision, computer graphics, and machine learning. With colleagues and students, he has won the Honda Initiation Award (2010); Popular Science’s “Best of What’s New” Award; the best student paper award at CVPR (2018); best paper awards at WACV (2012), SAP (2012), SCA (2010), and ICCV THEMIS (2009); the best demo award at ECCV (2016); and, he received the Hillman Fellowship for Excellence in Computer Science Research (2004). Yaser has served as a senior committee member at leading conferences in computer vision, computer graphics, and robotics, including SIGGRAPH (2013, 2014), CVPR (2014, 2015, 2018), ICRA (2014, 2016), ICCP (2011), and served as an associate editor of CVIU. His research has been featured by various media outlets, including The New York Times, BBC, MSNBC, Popular Science, WIRED, The Verge, and New Scientist.

Yaser Sheikh is an associate professor at the Robotics Institute, Carnegie Mellon University. He also directs Facebook Reality Labs’ Pittsburgh location, which is devoted to achieving photorealistic social interactions in AR and VR. His research broadly focuses on machine perception and rendering of social behavior, spanning sub-disciplines in computer vision, computer graphics, and machine learning. With colleagues and students, he has won the Honda Initiation Award (2010); Popular Science’s “Best of What’s New” Award; the best student paper award at CVPR (2018); best paper awards at WACV (2012), SAP (2012), SCA (2010), and ICCV THEMIS (2009); the best demo award at ECCV (2016); and, he received the Hillman Fellowship for Excellence in Computer Science Research (2004). Yaser has served as a senior committee member at leading conferences in computer vision, computer graphics, and robotics, including SIGGRAPH (2013, 2014), CVPR (2014, 2015, 2018), ICRA (2014, 2016), ICCP (2011), and served as an associate editor of CVIU. His research has been featured by various media outlets, including The New York Times, BBC, MSNBC, Popular Science, WIRED, The Verge, and New Scientist.