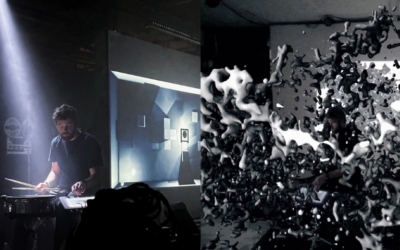

Image credit: Example hair models rendered on the GPU using hair meshes. Character model by Lee Perry-Smith (ir-ltd.net).

Pioneering a new era in real-time hair rendering, the SIGGRAPH 2024 Technical Paper “Strand-Based Hair Modeling and Rendering with Hair Meshes” introduces innovative methods that redefine efficiency and realism in computer graphics. Contributors Gaurav Bhokare, Eisen Montalvo, Elie Diaz, and Cem Yuksel offer a deeper look into how these techniques — like on-the-fly geometry generation and advanced procedural styling — are pushing the boundaries of strand-based hair rendering. Learn more as we unpack the technology and its potential to transform digital artistry.

SIGGRAPH: Share an overview of “Real-time Hair Rendering with Hair Meshes.” What inspired this research?

Cem Yuksel (CM), Eisen Montalvo (EM), Elie Diaz (ED), and Gaurav Bhokare (GB): High-quality hair rendering has been a longstanding challenge for video games. This is because a full-resolution hair model can easily take hundreds of megabytes to store. This also makes it very expensive to render.

We recognized that hair meshes offered an ideal solution for this problem. Hair meshes were originally designed for movie-quality hair modeling and, most importantly for our purposes, they could represent a hair model using only kilobytes of data. We just needed to figure out how to efficiently generate the full-resolution strand-based hair model from the hair mesh representation on the GPU during rendering.

The result is a method that can render hair 10 times faster than the state of the art. In addition, because we generate the hair strands while rendering, using level-of-detail techniques is trivial. As a result, we can render high-quality hair for hundreds of characters and do so 10 times faster than what it takes contemporary game engines to render hair for only a single character.

SIGGRAPH: Tell us more about the technique used in “Real-time Hair Rendering with Hair Meshes.” How does the hair mesh structure achieve massive reductions in storage and memory bandwidth compared to traditional methods?

CM, EM, ED, GB: The problem with prior methods for high-quality hair rendering is that they must store each individual hair strand. A full-resolution hair model can have more than 100,000 hair strands, each with potentially complex geometry. Therefore, storing all strands is extremely expensive and inefficient for rendering.

Hair meshes allow precise modeling of the overall hair shape without dealing with individual hair strands. Hair strands are generated inside the hair mesh, and the strand-level details are added via a variety of procedural operations. This makes hair meshes very powerful and practical for modeling virtually any hairstyle.

A relatively low-resolution hair mesh is sufficient to represent a full-resolution hair model with intricate details. The only storage needed is the hair mesh geometry and the parameters of the procedural functions used. This means storing on the order of 100,000 times less data than strand-based representations.

Notably, unlike purely procedural hair generation methods, which are highly limited in their representation capabilities, using hair meshes allows having complex hairstyles without any compromise in quality or hairstyle diversity.

SIGGRAPH: What role do mesh shaders and custom texture layouts play in distributing computations and offloading tasks to texture units?

CM, EM, ED, GB: Mesh shaders are not required at all. Though mesh shaders allow additional optimizations, at SIGGRAPH 2024 we also presented an implementation that only uses vertex (and fragment) shaders within WebGL running on a browser on a mobile phone. In an earlier implementation, we used tessellation shaders.

In the paper, we recommend using compute shaders with software rasterization, simply because software rasterizers in contemporary game engines outperform the hardware rasterizers for small triangles and hair strands are rendered as such small triangles. Also, additional optimizations are possible by rendering hair strands as curves or polylines without triangulating them at all.

Nonetheless, our performance evaluation in the paper was done with mesh shaders using hardware rasterization, showing more than an order of magnitude improvement over the fastest alternative.

All our implementations offload some of our computations to the hardware texture units. The texture units on the GPU are optimized for bilinear and trilinear filtering, which are the core operations for generating hair strands within a hair mesh. By carefully converting the hair mesh data into a hair mesh texture, we could offload most of the computation for hair generation to the texture units. What remains is the procedural styling operations, which are performed in software within (mesh/compute/vertex) shaders.

In addition to the techniques we describe in our paper for converting a hair mesh to a hair mesh texture, it is possible to achieve further optimizations using “Seiler’s Interpolation for Evaluating Polynomial Curves,” which was separately presented at SIGGRAPH 2024. More information on Seiler’s interpolation can be found here.

SIGGRAPH: How do the procedural styling operations in “Real-time Hair Rendering with Hair Meshes” enable realistic strand variations and support a wide range of hairstyles? Beyond real-time rendering, what other areas or industries could benefit from these techniques? Additionally, what future advancements or breakthroughs do you foresee for this technology in enhancing realism and performance?

CM, EM, ED, GB: Virtually all high-quality hair modeling techniques used in production today rely on procedural operations to add variations between hair strands. The advantage of hair meshes is that they offer a mesh modeling step to easily control the overall shape of the hairstyle. In addition, the hair mesh provides a consistent volumetric embedding to define the procedural operations, which is critical.

The procedural operations described in our paper are similar to methods used with other hair modeling tools. The advantage here is that they operate in the local volumetric space of the hair mesh. We have shown that a wide variety of hairstyles can be achieved using the basic set of procedural styling operations we described. The local volumetric space of the hair mesh makes it easy to add other operations, if needed.

In addition, the consistent volumetric embedding of a hair mesh is crucial for animating hair by simulating the hair mesh. This is analogous to the standard practice of simulating a small number of guide hairs, as opposed to all hair strands. The difference is that, unlike guide hairs, a hair mesh offers an efficient representation of the overall hairstyle with topological connections that make it easy to incorporate hair-hair interactions. While simulation methods using guide hairs struggle with preserving the intended shape of a hairstyle, hair meshes can easily do so, even after extreme deformations, as demonstrated in our prior work on this topic.

Combining this with recent advancements in deformable simulations, such as the “Vertex Block Descent” method presented at SIGGRAPH 2024, offers a highly efficient hair simulation approach both for games and offline graphics productions, like visual effects and feature animation.

Our research once again shows the power of on-the-fly geometry generation. The hair models we render have the same geometric complexity (i.e., triangle count) as full-resolution strand-based hair models. Yet, we can render them more than an order of magnitude faster than the alternative methods, because we are not using pre-generated strands/triangles. A similar approach can be taken for rendering other types of objects, minimizing the cost of data movement by generating the triangles on the GPU during rendering.

SIGGRAPH: What advice do you have for someone who wants to submit to Technical Papers for a future SIGGRAPH conference?

CM, EM, ED, GB: A paper published in SIGGRAPH is expected to have some technical novelty and substantially improve the state of the art according to a desirable metric, such as performance, quality, usability, etc. Therefore, the authors must be familiar with the state-of-the-art techniques, including existing methods that may not yet be widely used in practice, and understand what existing methods they must compare their work against and how.

In addition, SIGGRAPH papers are expected to have highly polished visuals, even if the core contribution of the work is not about visual quality. Therefore, authors who can spend extra time to improve the quality of all visuals in their paper submissions often receive more favorable evaluations from the reviewers.

Furthermore, SIGGRAPH papers are often well written: clearly explaining the contributions of the work, justifying the technical steps taken, providing evaluations indicating that the proposed methods improve the state of the art, and discussing any limitations. It is crucial that the paper tells the right story for the proposed method and makes the right claims that are justified by the presented results.

Feeling inspired? SIGGRAPH 2025 Technical Papers submissions are open! Submit your research today.

Elie Diaz is a fourth-year Ph.D. student in the University of Utah’s Graphics lab, working with Dr. Cem Yuksel. His research interests revolve around physics-based simulations, having done work with Hair Simulation, using the Material Point Method to simulate snow, and now working to expand the Vertex Block Descent method. His previous experience includes internships at Roblox as well as Graphics Research at the Georgia Institute of Technology.

Eisen Montalvo is a Principal Computer Engineer at RTX working at their Immersive Design Centers. Having completed his Master’s degree in 2023, Eisen started a Ph.D. in Graphics and Visualization under the tutelage of Dr. Cem Yuksel. His research interests include realistic hair rendering, physics-based animation, and raytracing.

Gaurav Bhokare is a 2nd-year Ph.D. student at the University of Utah, conducting graphics research under the guidance of Dr. Cem Yuksel. His research focuses on high-performance graphics, advanced image synthesis techniques, and hardware ray tracing.

Cem Yuksel is an associate professor in the Kahlert School of Computing at the University of Utah and the founder of Cyber Radiance LLC, a computer graphics software company. Previously, he was a postdoctoral fellow at Cornell University after receiving his PhD degree in Computer Science from Texas A&M University. His research interests are in computer graphics and related fields, including physically-based simulations, realistic image synthesis, rendering techniques, global illumination, sampling, GPU algorithms, graphics hardware, modeling complex geometries, knitted structures, and hair modeling, animation, and rendering.