DrawmaticAR © Yosun Chang

The SIGGRAPH 2020 Real-Time Live! event was exciting for many reasons — including an appearance by Caramel the corgi. We connected with contributor and Real-Time Live! Audience Choice award recipient Yosun Chang to learn more about the development of her two 2020 selections, PaperMATA: Build Habitats in AR for AI (Appy Hour) and DrawmaticAR (Real-Time Live!), hear about her process as a one-woman creator, and get to know her corgi, who stole the live show.

SIGGRAPH: Share some background about your 2020 selections, PaperMATA and DrawmaticAR. What inspired these projects?

Yosun Chang (YC): I’ve started to break down my decision paralysis on what to work on into three degrees of freedom: practical, mad science, and artistry.

Practical

These selections were my “hot” projects around the February SIGGRAPH submissions time frame, so minutes before the 11:59 pm GMT deadline, I submitted both! At the time, I was very keen on turning both into self-sustaining endeavors. Both were the then-current incarnations of my explorations on paper-based creation for augmented reality — an area I remain very interested in.

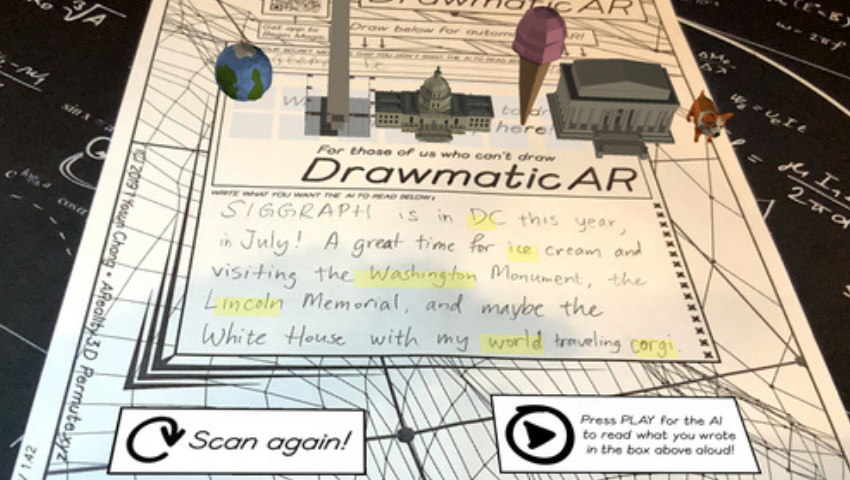

DrawmaticAR started as a hackathon project in September that didn’t win. Its first incarnation was a bit disjointed and used Microsoft Azure Cognitive Services. I rapidly built the entire thing from a crazy burning idea (that sweeps me off my feet in the morning) to App Store submission in eight hours on the day of the contest submission at 5 pm ET. In February, because I’d somehow hacked it together fast enough to have time to submit it to the App Store before the deadline, an EdTech influencer discovered it and kept cajoling me to fix it up so she could share it at a conference. I revitalized and added new features, including Tensorflow handwriting to text, Stanford NLP, and Google Poly. I applied my zero-marketing spend test, and DrawmaticAR passed with flying colors.

I crashed TCEA remotely to soft-launch DrawmaticAR, plus trending tweet status and DMs from education coordinators who wanted to volume license it for their schools. A Twitter account attracted hundreds of education-industry specific followers, and I submitted PaperMATA and DrawmaticAR to SIGGRAPH, which continues to be a home for my projects and brings them new life.

Mad Science

What if, instead of the usual learning environment, you could have Mary Poppins be your governess and “magic what you write into reality”? The vision of DrawmaticAR is literally that — or, the ultimate tutor-as-an-app you always wish you had growing up. For a long time, I had wanted to try breaking up language into command structures that can be mapped (with intermediary, to a stochastical model, for serendipity) directly to 3D-animated models and into instantly interactive experiences. By now, we’ve all seen ad nauseum the standard paradigm model for “visual coding”. These approaches are disjointed and even antiquated, considering the modern advances in ML/AI and processing powers available on smartphones. Why not connect the dots and have your AR scene creation happen automatically, straight from your words and automagically turn certain grammatical structures into mini games? That’s actually what you were thinking of when you said it!

Artistry

PaperMATA came as a “new vision of Walden” — I think I’d just rewatched “La Planète sauvage” — and I thought about a creative, meritocracy-driven valuation of real estate that induces “natural learning” for “AI refugees”. A “habitat for humanity” of sorts, created by humans, for AI “homunculi”. What if, instead of glutting your model with meaningless streams of data, you expose your AI to a “gentler, more humane” source of learning? What if AI could learn by living a life? I also wondered about the old Spielberg movie where AI was (fictionally) capable of love, and an inspiring conversation from a midsummer wedding about a transcendent “virtual being” real enough for one to truly fall in love with.

SIGGRAPH: Tell us about the process of creating PaperMATA and DrawmaticAR. How long did each take? What were the biggest challenges you faced?

YC: Both started as what I now call “software sketches” for half-day hack days (though my standard for that nowadays includes completion with submission to App Store).

DrawmaticAR was actively in development for most of February until late March. It was a pleasure to work with educators to integrate all sorts of fun school events, like the Valentine’s Day “turn my words into candy hearts” special (below), St. Patrick’s Day “hunt for lucky charms” special, Women’s Day “famous woman AR” newsprint popup, and dozens more fun celebratory features. Both foreign-language, mini-AR flashcards and popping the corresponding 3D model directly on its written word came from educators’ requests.

The biggest challenge was battling my fear of venturing too deep into a rabbit hole of happy fans, who, despite their numbers, can’t justify the financial cost of my own time spent. Eventually, such real-life constraints would be the reason to close both projects down in April. I’m grateful for the opportunity to revamp these projects, albeit just the weekend before SIGGRAPH [2020] in August!

SIGGRAPH: Beyond UV-mapping, PaperMATA recognizes objects drawn on paper and assigns characteristics to identified objects. How did you achieve this?

YC: It’s now very easy to do transfer learning for object recognition. In fact, these days, you literally just drag and drop it into the Create ML App on your Mac — no obscure python versions, libraries, and install-fests needed. All you need is a sleek Apple UI, no console, and ML made accessible for script kiddies. I collected a ton of hand-drawn common objects to train a model. (OK, since I can only draw about 100 unique things in an hour and I needed a training set of thousands, I cheated a bit where I automatically converted a number of photos to sketches with Photoshop actions.)

SIGGRAPH: How will DrawmaticAR influence the future of interactive storytelling?

YC: Do you remember those young author writing contests for first graders, where kids get to write and illustrate their own story, the teacher would read the winning story, and everyone would be in awe? And then the critical, judgmental, artsy kid (me) would judge the winning entry for being bad at illustration. Well, what if you could take the words straight from the story itself and instantly turn it into a wondrous, 3D-animated feature film, mistaken as a Pixar production? If I’m still working on this project 10 years in the future, that’s what it’d become!

SIGGRAPH: DrawmaticAR took home the Audience Choice award at Real-Time Live!, congratulations! Tell us about your experience presenting the demo during the event.

YC: We all signed into a speaker’s Zoom, each device having its own separate Zoom account session, where the conference software coalesced these sources together. The teams had an initial test session in late July. On the day of the event, we did a 30-minute equipment-check rehearsal and a full dress rehearsal. I’m very grateful for the feedback I received from the Real-Time Live! team in these sessions. I am incredibly bad at improv (because my mind’s often everywhere), and it’s the first time that I’ve had a dress rehearsal before a live pitch.

I was worried about the latency from Zoom. I was worried about accidentally disconnecting. I was worried about my mind wandering, rambling incoherently, caffeine, and sleep loss getting the best of me. But somehow (after the few seconds of stunned, “OMG it’s time, go, go, go!”), I got into it, which for me feels like I’m immersed in my own charmed story and fantasy world. Time flew by, and I had to live-edit my final takeaway: “Imagine a future of storytelling where you can go straight from words to an interactive 3D AR story, mistaken to be a multimillion dollar production, automagically! Beloved by thousands of kids worldwide, DrawmaticAR — live on the App Store and Google Play — is a first iteration in that seamless direction.”

When the last presentation ended, I was about to sign off to walk my corgi. Due to area wildfires, the AQI in San Francisco had been bad but improved during [the] Real-Time Live! [event]. I had already unplugged the Shure microphone I’d purchased for my at-home presentation setup. I didn’t expect my indie, zero-budget project to win.

I don’t remember what I said for my acceptance speech. I usually have a lot to say, and always run out of time. For once, I think I ended early. I considered blurting out, “Support me on patreon.com/yosun,” but I didn’t want to sound too indie (albeit I am).

I rationalized my win with my project being the only live audience participatory demo and that, instead of just tech achieved as the ends, mine was not yet requited. Hoping primarily to inspire, I was presenting an early vision of what things could be.

That, and I have my secret high-rise window corgi weapon loaded and ready!

SIGGRAPH: What do you hope virtual SIGGRAPH participants will take away from PaperMATA and DrawmaticAR?

YC: Both are early incarnations of a future generation of software that tries to make the process of digital creation as natural and easy for the human as possible. Traditional infrastructures have students spending years mastering a digital software, but this is prior to advances in both AI and HCI that synergize with real-time data. Soon, we’d arrive at a tech-norm state with instant, seamless 3D digitization of any real-world art or object — or go from story concept automagically to feature-length animated 3D production — all where the software has transcended to a state of being transparent with no learning curve.

I hope that my indie, one-woman projects can inspire folks working from home to take on the initiative to build their own crazy projects. Perhaps we’ll see a trend of indie projects winning future Real-Time Live! awards. I do believe it’s a Moore’s Law of some sort.

SIGGRAPH: Your corgi stole the show during Real-Time Live!. Tell readers about your pup!

YC: You can find my corgi on Instagram.

She also has several apps, including one that augments videos on her Instagram photos, as well as a fun ARKit/ARCore app dubbed Volumetric Corgi. And … she has her own API, so you can get your corgi photo fix of her!

She hosted a SIGGRAPH Zoom creativity jam (draw, model, make music) and is starting her own virtual escape room designed for conferences.

If you missed SIGGRAPH 2020, you can still think beyond with us — and check out Yosun’s sessions — when you register by 11 September.

I Yosun Chang is an augmented reality hacker-entrepreneur-artist who has been writing software as a freelance professional and artiste since 1997. She loves to build innovative software demos that turn emerging technologies into Arthur C. Clarke-style magic. Indie for life, she has won too many hackathons and has two TechCrunch Disrupt grand prizes, three Intel Perceptual Computing first places, AT&T IoT Hackathon grand prize, Warner Brothers Shape Hackathon grand prize, and hundreds more. Her work has been shown at SIGGRAPH 2015–2020 and Ars Electronica, Art Basel Miami, Augmented World Expo, AWS Re:Invent, CES, CODAME, Developer World Congress, GDC, Exploratorium, Makerfaire, Mobile World Congress, MoMath, MOMA, O’Reilly Where Conference, Rubin Museum, Tech Museum of Innovation, Twilio Signal, and more.

I Yosun Chang is an augmented reality hacker-entrepreneur-artist who has been writing software as a freelance professional and artiste since 1997. She loves to build innovative software demos that turn emerging technologies into Arthur C. Clarke-style magic. Indie for life, she has won too many hackathons and has two TechCrunch Disrupt grand prizes, three Intel Perceptual Computing first places, AT&T IoT Hackathon grand prize, Warner Brothers Shape Hackathon grand prize, and hundreds more. Her work has been shown at SIGGRAPH 2015–2020 and Ars Electronica, Art Basel Miami, Augmented World Expo, AWS Re:Invent, CES, CODAME, Developer World Congress, GDC, Exploratorium, Makerfaire, Mobile World Congress, MoMath, MOMA, O’Reilly Where Conference, Rubin Museum, Tech Museum of Innovation, Twilio Signal, and more.