“AnisoMPM: Animating Anisotropic Damage Mechanics” © 2020 University of Pennsylvania

SIGGRAPH 2020 Technical Paper “AnisoMPM: Animating Anisotropic Damage Mechanics” offers a robust, general approach that couples anisotropic damage evolution and anisotropic elastic response to animate the dynamic fracture of isotropic, transversely isotropic, and orthotropic materials. To learn more about this research, we sat down with Joshuah Wolper, Jiecong “Jacky” Lu, Minchen Li, and Chenfanfu “Fanfu” Jiang of University of Pennsylvania, four of eight researchers behind the project. Rounding out the team are Meggie Cheng, Yu Fang, and Ziyin Qu of University of Pennsylvania, and Yunuo Chen of University of Pennsylvania and University of Science and Technology of China.

SIGGRAPH: Talk a bit about the process for developing this research. What inspired the project?

Joshuah Wolper (JW): For these types of physical simulation projects, we usually start with a very simple idea, or a very simple effect that we want to model. We spend a lot of time talking in the lab about different materials we want to explore or different effects we want to see in graphics; we have somewhat of a running list of these ideas, and as CG research expands and grows in different directions, we start to see certain ideas from this pool stand out as promising targets for future projects! As for the inspiration, there are a few clear influences. Personally, I’ve always been so excited and inspired by the natural world: taking a closer look at the way the grass sways in the wind, or how raindrops collect and move down a window pane, or, of course, how bread or meat slowly stretches and then tears apart as you pull. It was this excitement about the natural world that led us down the path of modeling material fracture last year when we presented CD-MPM, our isotropic predecessor to AnisoMPM. However, this year, we were additionally inspired by a drive to expand on CD-MPM: to not only bring exciting fracture approaches to graphics, but also make them extremely generalizable to different types of materials with different underlying structures. Research is so very iterative, and by the time a paper wraps up we already have an outpouring of ideas on how we would’ve wanted to expand on it or change it, and I think that this drives ideas, too. Finally, as one of the few groups focusing on the Material Point Method (MPM), we are also extremely motivated and inspired by all of the incredible MPM works coming out in the mechanics and CG communities alike!

But back to the process… Once we’d become excited about some physical effect (e.g., anisotropic fracture), we are tasked with the unique challenge of exploring what’d been done and researched in mechanics and engineering, then had to figure out what parts we thought would be useful to explore and introduce to the computer graphics community (through the CG-specific lens of speed and visual fidelity). Fortunately, scientific progress afforded us a deep understanding of myriad physical processes due to hundreds of years of research. It is this expansive set of knowledge and theory that we seek to explore and develop new computational models for. For example, continuum damage mechanics (the core of our damage theory in AnisoMPM and CD-MPM) has its roots in the mid-20th century, but we — only in recent years — have developed the computing power and techniques to model these theories using millions of particles in MPM!

SIGGRAPH: Let’s get technical. How did you develop the animation technique in terms of execution? Our audience loves details!

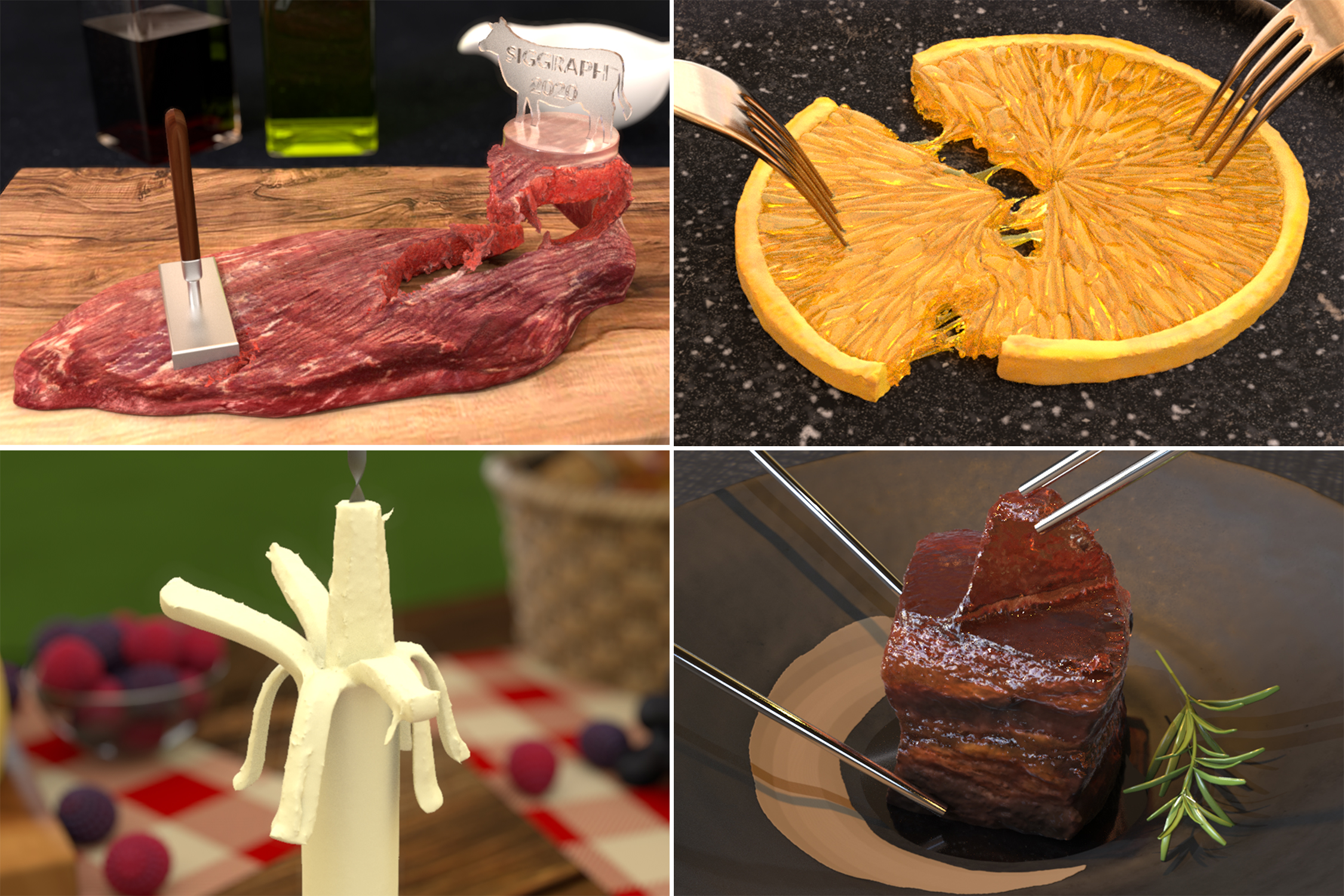

JW: AnisoMPM has eight official authors. As a large group, we benefited tremendously from the breadth of perspectives, expertise, and foci that we span together. Each contributing author brought something unique to the project, and I couldn’t be more humbled by my co-contributors. Outside of the official authors, we also had helpful conversations with one of our frequent collaborators, Johan Gaume of EPFL, as well as some artistic help in making key figures from my partner Matt Alexander. I also worked with Matt to develop the Technical Papers Fast Forward video for AnisoMPM and we are SO excited to share it with [everyone]! I won’t say too much, but think ’70s and ’80s horror movies. I was very inspired by some of the feedback we’ve gotten on the demos being kind of … gross. In fact, we have so many food-related demos because MPM has best results when modeling soft, elastic materials, and food makes up the most palatable subset of soft, squishy materials for us to tear apart. We dabbled in some non-food demos, but they tended to be a little horrifying. I tried some really crazy things when making demos this year, and so many were scrapped because they were too wild or gross. There’s one that still makes me laugh where I just had a Thanksgiving turkey in an enclosed cube and set up this wild barrage of bullet collision objects to go through it from all these different directions. It was just way too insane and didn’t make the cut.

The development of AnisoMPM really kicked up toward the end of last summer [in 2019], when I was exploring the mechanics literature for works on modeling anisotropic elasticity and anisotropic damage. Choosing an approach for anisotropic damage was probably most difficult because there are just so many approaches that could work; more specifically, in mechanics, fracture is approached usually through either “fracture mechanics” or through “continuum damage mechanics.” The former models the stresses at the crack tip, which requires rigorously tracking and modeling the discontinuity that is forming during simulation. Conversely, the latter (CDM) focuses on modeling a field of damage variables evolving over time that give a “smeared” crack based on these damage values. We chose to stick with CDM because it obviates the need for complex remeshing techniques and fits so well within the framework of MPM. As for the elasticity approach, we actually began with a simpler model, but realized some flaws in it that we wished to correct. As such, the anisotropic elasticity was largely inspired by the QR decomposition-based elasticity used to simulate cloth back in 2017 due to its robust formulation and natural pairing within MPM. We found that this lacks a treatment, however, for extremely stiff materials, and to remedy this shortcoming we developed an additional inextensibility approach that is more suitable for rigid materials.

After the success of CD-MPM, I was really driven to expand dynamic fracture animation to anisotropic materials, especially since most materials we experience in our daily lives are anisotropic! Whether it’s the shirt on your back or the steak you’re cutting into, anisotropy follows us every day. So, naturally, it was a really exciting goal to develop an approach that can model not only isotropic materials, but also transversely isotropic and orthotropic materials as well. This flexibility gives AnisoMPM the ability to model just about any type of anisotropic material. Interestingly enough, the non-local geometric CDM approach that we take in AnisoMPM is also faster and arguably more intuitive than our phase field fracture approach in CD-MPM. The theories are closely linked and implicitly do boil down to very similar system solves; however, the approach we take in AnisoMPM allows for both explicit and implicit damage solves (giving serious speed improvements compared with CD-MPM), as well as allowing us to very intuitively add the underlying anisotropic structure that guides fracture. Ultimately, the damage can be thought of as an energy balance: a material undergoes stresses which lead to locally weakened elastic responses, and, as fractures form, energy is released from the system. Specifically, our damage evolution is posed as a balance between a crack driving force — anisotropically driven by underlying structural tensors — and a geometric resistance to fracture, which is related to the damage Laplacian and the diffuse crack regularization parameter.

Jiecong “Jacky” Lu (JL): One of the biggest challenges was to create photorealistic renderings of the simulation, especially those of the fracture surfaces due to the abundance of intricate details in those areas. For example, the material texture of the fracture surface of the flank steak needs to match with that of the surface closely, yet organically, in order to be consistent with the grain direction. At the same time, it also needs to be distinguishable from the surface because of the unique muscle and fat distribution in the internal structure. Similarly, the Dong Po pork belly has very fine and interweaved layers of skin, fat, and muscle tissue with very different material properties, and presenting these details in an accurate and realistic manner proves to be very challenging. For example, different layers have very different levels of translucency as well as reflection and refraction intensity, and the combination and mixture of all the layers together create a variety of subtle details. In order to ensure photorealistic and accurate rendering of the torn surface, we collected and studied a large number of photos and video references. We also captured high-quality 3D photogrammetry data of an actual Dong Po pork belly (cooked by Fanfu’s step-mother) with submillimeter details to serve as the starting point of the simulation and rendering.

After covering the virtually simulated pork belly with many layers of mappings that encode specific texture and material information, we used physics-based rendering with real-world camera lensing and lighting parameters to produce the footage shown in the video. For the orange, we actually modeled out every single grain of the pulp in combination with procedural elements that adhere to an orange’s structural details based on reference images and videos. It’s worth it to point out that the material properties of every grain in an orange slice also vary greatly, and we made sure to capture all these details in the final rendering. Overall, in addition to producing photorealistic renderings that match as closely to reality as possible, we aimed to create a synthetic experience to our audience, as we believe every image tells a unique story.

SIGGRAPH: What do you find most exciting about the final research you are presenting?

JW: The most exciting part of AnisoMPM to me is just how much it completely eclipses PFF-MPM — the phase field based fracture approach in my last work, CD-MPM — in terms of flexibility and speed. Not only can it model isotropic materials faster and more efficiently than PFF-MPM, but it does all of this while offering great flexibility. AnisoMPM models all types of anisotropy with less parameters to tune, offers both an explicit and implicit damage solve, allowing for extreme speed improvements if using explicit damage and smaller time steps if using implicit damage, all while adding embedded inextensibility for super-stiff materials. The only thing from CD-MPM that we cannot replicate is the gorgeous debris-laden fractures associated with our Non-associated Cam-Clay plasticity (e.g., pumpkin and watermelon demos), but that’s a small price to pay for all of these improvements!

Minchen Li (ML): The most exciting thing is that our results match reality so well without having to tune many parameters or do tedious potential post-processing work like some traditional methods usually do.

JL: I am most excited about the vast number of potential applications of this new technology in various industries beyond the field of traditional computer graphics.

SIGGRAPH: What’s next for “AnisoMPM: Animating Anisotropic Damage Mechanics”?

JW: Most realistically, I would love to see AnisoMPM adopted into graphics software like Houdini, as well as in-house simulation softwares, so that we can start seeing more and more creative uses for anisotropic fracture in animation! More quixotically, it’s really exciting to muse about AnisoMPM’s tech being used in real-time for hard-hitting, real-world applications, such as virtual surgery or even in video games. However, the relatively slow speed of MPM simulation severely limits real-time simulation on a single workstation, but I’m really excited for the day when that’s no longer the case. I think there’s also huge potential for AnisoMPM to lend itself to other realms where physical accuracy is important; for example, we found that AnisoMPM produces bone fractures that match qualitatively with known bone fracture patterns, and I would love to see this expanded on in the future.

I’m so elated whenever our work gets some buzz, like in the recent Gizmodo article, because it’s just so gratifying seeing people excited about our work and about computer graphics! It’s hard being tight-lipped about the projects I’m working on, but it is so worth it in the end when we can finally reveal it all. I think the article raises a couple of interesting points, the first being that they acknowledge that AnisoMPM is nowhere near real-time applications yet (despite the headline way overpromising our real-time capabilities [laughs]). The second is this sort of age-old question of ethics in research. It’s hard to see this research and not imagine all of the unsavory applications of something so focused on destruction; this is certainly something I’ve grappled with, and a huge part of why we chose food for almost all of our demos. I’m a huge fan of horror movies and games, and am not at all opposed to realistic depictions of gore for effect, but I would hate to see this used for other ill-intentioned applications, such as ballistics testing. As researchers on the forefront of scientific and technological progress, it’s our responsibility to hold these questions of ethics close to heart.

ML: Gizmodo’s view is certainly interesting; we believe in the near future applications of our methods in virtual surgery, gamings, visual effects, and more are certainly possible and will make a bigger impact.

JL: Gizmodo’s take on this research raises a controversial yet important ethical question with regard to how far the boundaries should be pushed in terms of the realistic depictions of gore and violence in video games. I think this topic deserves further discussion and assessment by industry professionals and existing regulation framework.

Therefore, I would like to highlight a few other interesting potential applications of the technology brought by AnisoMPM. For example, AnisoMPM can potentially be used for non-destructive food grading and processing simulation due to its physics-based nature. In addition, as mentioned at the end of the Gizmodo article, AnisoMPM would be of great use in virtual simulation and training for medical professionals and healthcare workers. AnisoMPM would also have positive contribution to environmental protection and the prevention of animal cruelty. New technologies introduced by AnisoMPM can be integrated into existing CGI and visual effects approaches in order to further reduce the unnecessary injuries and harm to animals during the production of films and TV programs. In terms of environmental protection, AnisoMPM can be utilized to reduce the need to dissect real animals for educational purposes. As an alternative, this new technology can create digital experiences that are not only physically accurate, but also more accessible and less costly to instructors and students.

Chenfanfu Jiang (CJ): Convincingly simulating anisotropic, inhomogeneous, and heterogeneous materials has always been an intriguing, yet challenging topic in both physics-based animation and computational science. One of the major motivations we had for developing AnisoMPM is exactly to make it a prototype whose extensions can directly benefit areas beyond computer graphics. As mentioned by my students, in addition to food processing, this research direction also naturally shows a lot of promise in achieving better simulation of a human body. Muscles, porous structures in human bones, and the vascular system of our body form extremely complex patterns that are very difficult to resolve with most traditional simulation techniques. Our group will keep pushing this direction to see its usage in healthcare and to help get rid of animal experiments. Furthermore, we should know that glaciers calving ice into the ocean is predicted to be one of the largest contributions to sea-level rise in the future. Ice fracture-induced phenomena can be dramatic, as illustrated by the recent collapse of ice shelves in Antarctica. With Josh and other collaborators, we in fact have already been conducting investigations into new models that follow knowledge we learned from AnisoMPM that can predict ice sheet breaking.

SIGGRAPH: Share your all-time favorite SIGGRAPH memory. If you have never participated, tell us what you most excited about for!

JW: I’ve attended SIGGRAPH twice, but last year was my first year presenting when I presented CD-MPM. It was a WHIRLWIND. It is so incredibly satisfying going from spending 6–8 months on a project that you can’t talk about to finally sharing it with the world! It really is so special that all of a sudden there’s a whole community of people eager to talk with you about your work and share their work, and I just got so much out of the whole experience. I also absolutely love the interdisciplinary focus of SIGGRAPH and the computer graphics community as a whole, it really feels like this wild, artistic, brilliant family of eccentric aunts and uncles all working together; I can’t get enough! I think my specific favorite memory, though, was during my presentation on CD-MPM: one of our key demos was a Jello T-Rex that we shot with a high-speed bullet. You could see the debris-laden aftermath with the internal debris spraying out the back, and I just remember the most audible GASP from the audience upon showing that clip. To garner such a visceral reaction was really cool, especially since good animation always seeks to evoke some emotion or response.

ML: My favorite memory about SIGGRAPH is the chance to network with computer graphics researchers and practitioners all over the world. It is always wonderful to see old friends, make new friends, and chat about what we all have passion for.

JL: I have not attended SIGGRAPH before, but I already have a fond memory of SIGGRAPH since another research project that I worked on, “CD-MPM: Continuum Damage Material Point Methods for Dynamic Fracture Animation”, was presented at SIGGRAPH 2019. I am very excited about my first SIGGRAPH, especially since it’s the very first time it will be held virtually!

CJ: I have been attending SIGGRAPH every year since I was a Ph.D. student. My favorite thing in SIGGRAPH is the once-per-year chance to meet, talk, and party with friends, research colleagues, and talented students. A lot of fabulous research ideas are born from a lunch (or sometimes in a bar) during SIGGRAPH while chatting with all the smart people that share the same background and enthusiasm. Some of these will be less convenient this year virtually, but I look forward to seeing the new opportunities that virtual conferences could bring us.

SIGGRAPH: What advice do you have for someone looking to submit to Technical Papers for a future SIGGRAPH conference?

JW: I think the first key is to pick research topics that you’re excited and passionate about, because the work will reflect that. I think the trickiest parts of writing a good paper are totally separate from the theory. A good paper should tell a story, make its contributions clear, make past efforts clear to highlight why your work matters, and make the theory sections as universally accessible as you can. Clear writing and didactic flow are huge! Having a breadth of demos helps, too: a handful of demos to wow your audience, as many demos as you need to illustrate your key points, and then even more for good luck. Figures should be clear, concise, and each tell a story. I also think that it’s worth it to put the extra effort into the video, the Fast Forward, and the supplement to make them just as high quality as your amazing new research. Think of the video and the abstract as your first impressions to the world! I like leaving a project feeling like every layer has the same amount of polish and effort, but it’s also pivotal to know where you can cut corners — especially in a pinch. Ultimately, if I know my work will be read over and over for years to come, I want to make sure it’s a good read.

ML: My advice would be to make sure there are enough experiments in the paper to solidify the work and persuade people how fancy and nice the work is. This will really make the paper stand out and will be very convincing to potential users/reviewers.

CJ: Publishing a good SIGGRAPH paper requires focusing on a research topic diligently, thoroughly, and well. To achieve so, it is extremely important to pick a research topic that you would love to try everything to overcome the challenges and make things work. It is also crucial to make sure you could persistently gain feelings of satisfaction (and a sense of achievement) as you progress on the chosen topic.

Registration for the virtual SIGGRAPH 2020 conference will open soon. View packages here.

Joshuah Wolper is a fourth year Ph.D. student and Harlan Stone Fellow at the University of Pennsylvania. Graduating from Swarthmore College with a B.S. in engineering and a B.A. in computer science, he hopes to pursue scientific computing and modeling with a social conscience. Forever fascinated and motivated by the natural world, his current research focuses on modeling natural phenomena such as fracture and topology change using MPM discretization.

Minchen Li is a Ph.D. candidate at University of Pennsylvania advised by Professor Chenfanfu Jiang. His research interests include numerical optimization and simulation for computer graphics and beyond, especially physics-based animation and geometry processing. He is a winner of the 2020 Adobe Research Fellowship. Minchen’s current goal is to devise novel methods that are robust, accurate, and efficient to benefit visual effects, geometric design, robotics, engineering, virtual reality, games, etc.

Jiecong “Jacky” Lu is a rising senior pursuing a BSE in computer science with a concentration in digital media design and a minor in Spanish at the University of Pennsylvania. He has also submatriculated into the graduate program of Computer Graphics and Game Technology at Penn Engineering. Jacky’s passions and interests lie in the intersection of technology and business. With a strong interdisciplinary background in computer graphics, computational science, and humanities, Jacky seeks to use his knowledge and skills to solve meaningful problems and create positive impact in various fields and industries.

Chenfanfu Jiang has been an assistant professor of Computer and Information Science at the University of Pennsylvania since June 2017. He is a faculty in Penn’s SIG Center for Computer Graphics, the Penn Institute for Computational Science (PICS), and the Graduate Group in Applied Mathematics and Computational Science (AMCS). Before joining Penn he was a post-doctoral researcher at UCLA. He received a Ph.D. (2015) in computer science from UCLA, jointly advised by Professor Joseph Teran and Professor Demetri Terzopoulos, and a B.S. (2010) in plasma physics in the Special Class for the Gifted Young (SCGY) program from University of Science and Technology of China. He was the recipient of UCLA’s Edward K. Rice Outstanding Doctoral Student Award in 2015 for his Ph.D. work on photorealistic physics-based simulation. His research in the Finite Element Method and the Material Point Method improves the robustness, efficiency, and scope for the simulation of continuum solid and fluid materials, leading to impacts in computer graphics, computational physics, high-performance computing, computer vision, and robotics. He received the NSF CRII award in 2018 and the NSF CAREER award in 2020.