© 2019 Yosun Chang

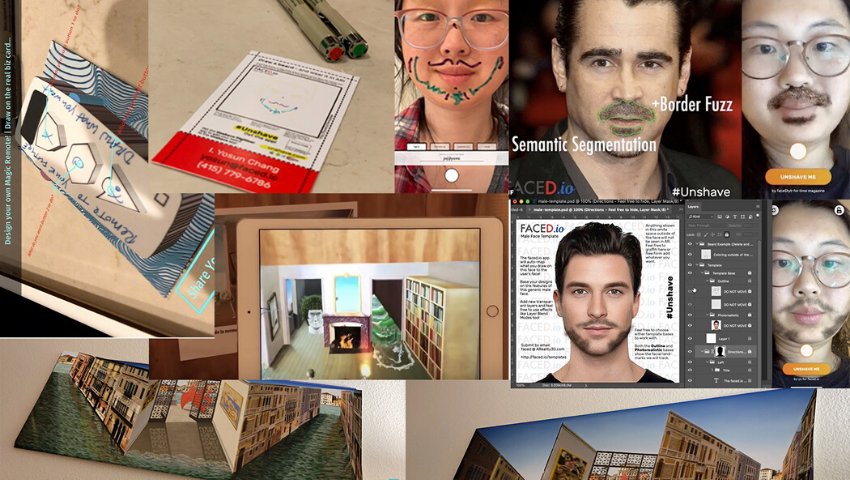

At SIGGRAPH 2019, Yosun Chang (AReality3D, Permute.xyz) shared Project SUR from Sur.faced.io during Appy Hour. Project SUR allows users to draw on a 2D medium, like paper or Photoshop, and instantly create face masks and other special effects in AR. We caught up with Chang to learn about the inspiration behind the project, how the app was developed, and how it can be used moving forward.

SIGGRAPH: What inspired you to create Sur.faced.io as an app?

Yosun Chang (YC): I view Sur.faced.io as a generalized form of viewing real-world surfaces as art canvases that can be instantly digitized for AR (and also augmented on). Colloquially, the entire process can be labeled as a two-way street between real-life reality and spatial computing worlds and referred to as “getting surfaced.”

I wanted to try something different and also more down to earth. Paper is good for so many things other than just being a fiducial. Also, the real world is the domain of real randomness, chaos effects, and tangible art-creation mediums — you can tie dye, spill coffee, splatter sprinkles, press flowers, and more to decorate a piece of paper. So, why not utilize what’s available and fuse it all together?

SIGGRAPH: Tell us about how you developed the app.

YC: At a high level, the app relies on this generalizable mechanism:

- From your AR fiducial marker: You have an a priori, fully deterministic 2D grid that can be 1:1 mapped to, say, the UV-mapped surface of a 3D model.

- It’s more fun when there is apparent open-endedness in the mapping. Segment out the “white space,” where tracking markings aren’t printed, and turn that into “creative space” to be able to 1:1 map whatever the user draws (for example, spills, flower-presses, or splatters) to your digital entity.

And, in this case, the “digital entity” can be anything, such as:

- Your real face. The popular AR face mask is simply a real-time, simplified mesh created based on feature points recognized from your face, most popularly, DLIB 68 landmarks. This real-time mesh also can be real-time UV’d, so the texture can be mapped 1:1 accordingly.

- Note, in its current incarnation, I haven’t figured out how to wrangle OpenCV for Unity to work with Vuforia. So, the faced.io app with the AR try-on for your face is separate from the faced.io digitizer app for onboarding content. One easy way to connect content between two separate apps you make is to have one app save content to a server that the other can access through a keycode.

- Classic, simply a 3D-model incarnation of the 2D fiducial, where the mapping is from paper to the UV mapped texture. (Likely with some assumptions, such as the back-facing part of the 3D character has the same color as parts of the front.)

- The AR preview of a folded origami, where the fiducial is the AR crease pattern as in PlayGAMI.

- The AR preview of a T-shirt or shoe, where the fiducial is the fabric pattern, as in the new example of Sur.faced.io I showed at SIGGRAPH 2019.

SIGGRAPH: Where did the idea of allowing users to draw on a 2D medium, like paper or Photoshop, before the creation is transferred to AR come from?

YC: Here is an attempt at an origin story, though as common with creative people, the sources and prior projects might be many … I created an iOS app prototype for a client in 2012 that allowed people to color on a coloring book page to instantly visualize what a color-textured 3D model of the subject might look like and also augmented on the same colored page. (Of course, the coloring book page actually was an appropriately high-fidelity QCAR image target — think of it as a generalized AR marker/fiducial that allows for robust designs without the requirement of a bulky, thick black border.)

In 2015, I made PlatoAR, which featured “magical graph paper.” Effectively, these were “math worksheet fiducials” that were designed to be robust enough so that if a student were to make notes on it, the tracking still works. This allowed me to explore quite a bit on how to create high-quality fiducials that had sufficient robustness.

With Tango, HoloLens, and ARKit (Metaio rebranded), it was mostly SLAM marker-based for 2016 and 2017 and OpenCV/ARUCO on HoloLens. At the time, Vuforia did not support HoloLens.

In September 2018, I spent a few weekends remotely collaborating with an origami artist named Uttam Ghandhi. The main focus was building innovative AR apps with a focus on origami. Three apps emerged from the collaboration: Origamiji (Origami emoji for iPhone X), FurniGAMI (AR on origami furniture), and PlayGAMI (draw on AR origami crease patterns to create game characters). That was the start of my revitalized interest in paper and AR, with a focus on direct content creation.

In October 2018, I decided to create business cards featuring generic faces that let people draw on them to AR-wear them on my faced.io app. In September, thanks to a terrific pitch by Jackie McGuire, I won the Grand Prize at TechCrunch Disrupt Hackathon (again) for a ModiFace-inspired AR styling platform (“Try anything on your face — and buy it!”). A small part of that also featured makeup and beard extraction from any photo for instant face masking. The Photoshop-onboarding-inspired feature came from that digital portion. Then, in December, I collaborated with futurist Zenka on Magic Remotes and the onboarding generalized to projecting abstract metadata of one’s vision of the future onto any 3D model.

It’s a whole lot more than just “drawing on a 2D medium.” We later discovered at the flagship 2019 Maker Faire in San Mateo, California, that having a giant AR crease pattern meant you could onboard people and robots who roll and loll about on the marker as well! Add in footprints, food, rain, and more as real-world mediums to make art with.

SIGGRAPH: What problem or problems does “Sur.faced.io” solve?

YC: The digital gap is very real in art, and I wanted to create a solution to instantly “onboard” traditional, nondigital artists straight to AR.

Currently, it’s a bit canned in being just a direct mapping to texture onto existing 3D models as identified by the AR marker. The software architecture I’ve designed is very open to advances in machine learning, such as GANs that can more intelligently create new forms based on 2D art. In the future, I think this method could be generalized to instantly turn any 2D art into a 3D scene that one could “look into” from “the window of the painting,” as envisioned by Wanderlust, an Appy Hour exhibit I built in 2015.

In addition, there are multiple types and genres of art one easily can create in real life with features of reality and real-world randomness that just can’t be done on a computer. True randomness is still something that digital can’t provide. For example, Jackson Pollock’s action paintings had been identified to possess a high-chaos theory fractal dimensional quotient through box analysis. Or, the perfect simulation of the effects of spilling multiple substances on paper or real-world media. Or, more practically, scattering found objects onto a 2D surface to easily digitize a “3D to 2D collage.”

SIGGRAPH: How do you hope your app will be used moving forward? What do you want users to take away from it?

YC: Sur.faced.io is a software API that allows all kinds of 2D- to 3D-mapping apps to be made. I’d love to collaborate with artists and designers to AR their 2D media.

I’m working on a new platform that I’m informally calling PostcardAR to enable more general “draw on paper” to instant 3D AR stuff. [Readers can] follow my Twitter for updates on that. Also, I’m hoping that more people explore the clever uses of marker-based AR with an emphasis on things that paper and real-world based mediums easily can do. I’d rather it not become a lost art. It seems that mainstream AR is moving toward markerless — the Metaio SLAM ARKit/ARCore kind where you have to walk around and wave your phone for tracking to happen. In fact, DrawmaticAR, another marker-based app I made that only works when pointed at the marker, was rejected from the [Google] Play Store because it couldn’t seem to understand that there are other types of AR apps that you simply draw on paper rather than “walk-around” types. Quoting Google’s policy-based rejection: “Special Restrictions: If your app uses augmented reality, you must include a safety warning immediately upon launch of the AR section. The warning should contain the following: A reminder to be aware of physical hazards in the real world (e.g., be aware of your surroundings).”

SIGGRAPH: Tell us about your experience presenting during Appy Hour at SIGGRAPH 2019.

YC: SIGGRAPH is often headlined by behemoth institutions whose productions require large teams (or budgets that can easily fund one indie developer for decades). Appy Hour reminds the industry that Moore’s Law has progressed enough that it’s possible to be an indie developer (having much more humble budgets) in building creations of value.

Do you want to demo your latest app among the brightest minds in the industry? Submit your latest creation for the SIGGRAPH 2020 Appy Hour by 11 February.

I Yosun Chang is an augmented reality hacker-entrepreneur-artist who has been writing software as a freelance professional and artiste since 1997. She loves to build innovative software demos that turn emerging technologies into Arthur C. Clarke-style magic. Indie for life, she has won too many hackathons and has two TechCrunch Disrupt grand prizes, three Intel Perceptual Computing first places, AT&T IoT Hackathon grand prize, Warner Brothers Shape Hackathon grand prize, and hundreds more. Her work has been shown at SIGGRAPH 2015–2019 and Ars Electronica, Art Basel Miami, Augmented World Expo, AWS Re:Invent, CES, CODAME, Developer World Congress, GDC, Exploratorium, Makerfaire, Mobile World Congress, MoMath, MOMA, O’Reilly Where Conference, Rubin Museum, Tech Museum of Innovation, Twilio Signal, and more.

I Yosun Chang is an augmented reality hacker-entrepreneur-artist who has been writing software as a freelance professional and artiste since 1997. She loves to build innovative software demos that turn emerging technologies into Arthur C. Clarke-style magic. Indie for life, she has won too many hackathons and has two TechCrunch Disrupt grand prizes, three Intel Perceptual Computing first places, AT&T IoT Hackathon grand prize, Warner Brothers Shape Hackathon grand prize, and hundreds more. Her work has been shown at SIGGRAPH 2015–2019 and Ars Electronica, Art Basel Miami, Augmented World Expo, AWS Re:Invent, CES, CODAME, Developer World Congress, GDC, Exploratorium, Makerfaire, Mobile World Congress, MoMath, MOMA, O’Reilly Where Conference, Rubin Museum, Tech Museum of Innovation, Twilio Signal, and more.