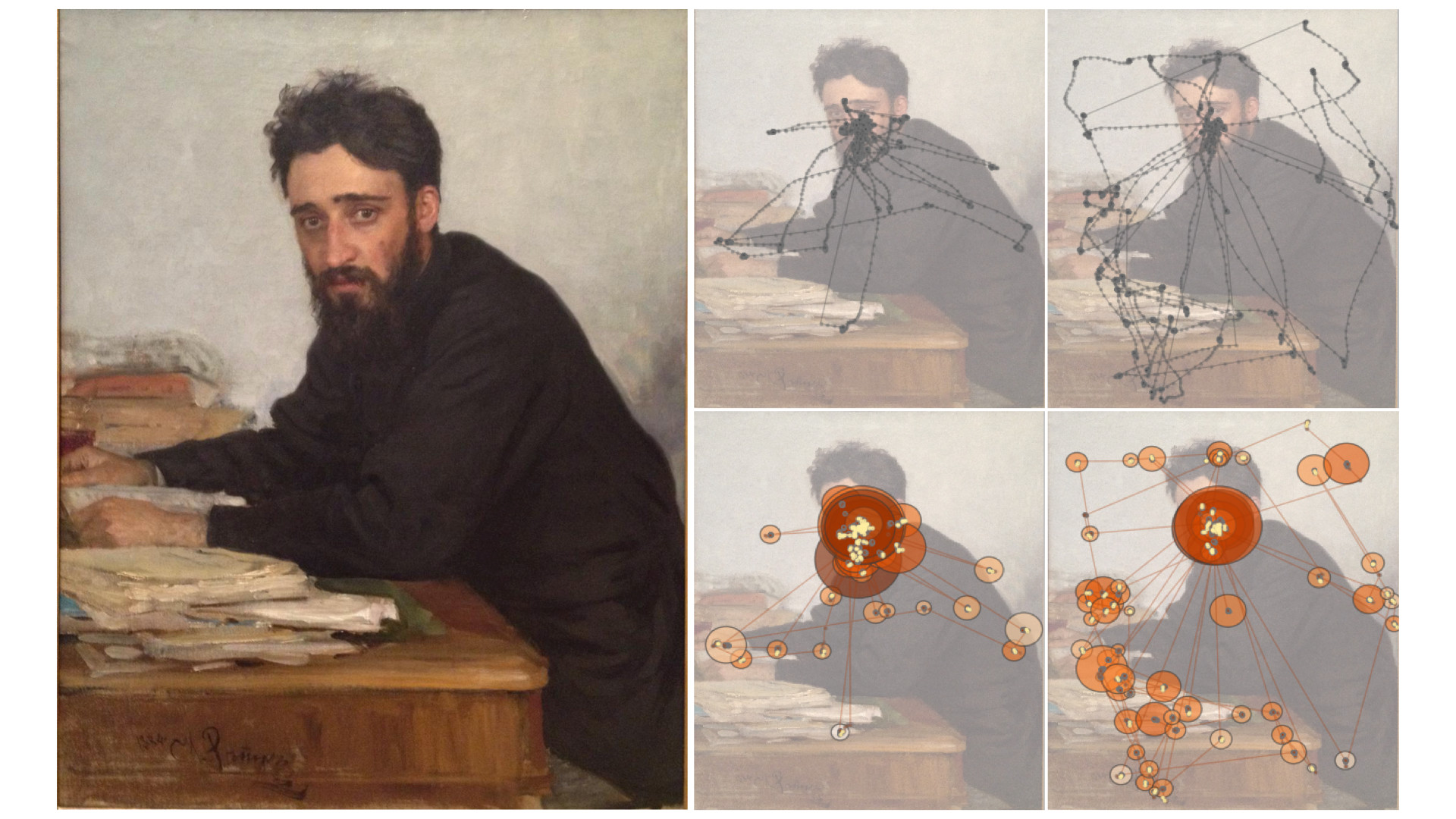

Ilya Efimovich Repin’s Vsevolod Mikhailovich Garshin (1855–1888), 1884, oil on canvas, Gift of Humanities Fund, Inc., 1972, The Metropolitan Museum of Art, New York, NY, superimposed with raw gaze data and scan paths including micro saccades.

A continuation of a passion spurred two decades ago, “Eye-Based Interaction in Graphical Systems: 20 Years Later,” a SIGGRAPH 2020 Course, explores eye-tracking in the form of four applications. We caught up with instructor Andrew Duchowski to learn more about his interest in eye-tracking, get an exclusive preview of the Course content, and learn the key to a successful SIGGRAPH submission.

SIGGRAPH: Talk a bit about the process for developing your Course. What inspired you to begin exploring eye tracking in computer graphics?

Andrew Duchowski (AD): Well, 20 years ago, similar to now, I was inspired by the excitement of combining research on computer graphics (for stimulus creation and visualization), human-computer interaction (for real-time use), psychology (for experimental design and analysis), and neuroscience (for human vision). Today, computer vision (for augmented reality) has also become much more prominent, and machine learning and AI are all being woven into the eye-tracking mix.

[At the time], I found the combination of these many topics particularly compelling, and that’s still the case today. Due to technology advancements, this niche area is much more accessible than ever before … and a lot more fun!

SIGGRAPH: Let’s get technical. How did you select the four applications you’ll cover (diagnostic, active, passive, and expressive)?

AD: The four application areas started out as just two: diagnostic (offline) and interactive (online). Based on recent funding from the National Science Foundation for eye movement animation, and an invitation to present a keynote address at VS-Games in Athens, I decided that this taxonomy needed to expand to include expressive, active, and passive (online) applications. The expressive application deals with eye movement synthesis as opposed to analysis, which is generating artificial eye movements procedurally.

SIGGRAPH: Can you provide any insight into what kinds of analysis the instruction will touch on? What was the biggest challenge?

AD: Due to time restrictions, the Course largely focuses on reviewing eye-based applications. Interspersed within that is instruction on how to conduct signal processing on eye movement data that is foundational to all applications. The last 10 minutes is a lightning round on writing a program that talks to the eye tracker. After this, I’m out of time. The time restriction was the biggest challenge: how to fit it all in… I couldn’t!

SIGGRAPH: How did you redevelop the Course for a virtual audience?

AD: I actually had my Course notes done before submitting the Course proposal, except that I had promised to do a live demo of an eye-tracking app, which I had intended on doing in person at SIGGRAPH 2020, just like we did 20 years ago. Today, it would have been with more modern hardware (e.g., Gazepoint) and software (e.g., in Python, for its ease of development). Unfortunately, I had to table the already written, interactive app demo when SIGGRAPH went virtual. Perhaps this year I’ll still have a chance to do it during the live Q&A or maybe next year.

I recorded the Course with my laptop pointed at me, and with a tablet to the side to allow annotations. The execution isn’t perfect since I move out of the frame quite a bit (sorry!), and some of the soundtrack didn’t initially record. It took me about four days to record the Course, fitting it into one hour, and then another four days to redub the missing audio and reformat the automatically generated subtitles.

In the end, I suspect a lot more work went into this than if I had done it live, but then again it’s SIGGRAPH, so it’s worth the effort and good practice learning to deal with video production, transcoding, and subtitling. (Pro tip: If you find I’m speaking too quickly in the video, just turn on the subtitles!)

SIGGRAPH: Who is the optimal attendee for your Course?

AD: Anyone interested in eye tracking really — from the novice to the seasoned expert. This year, I would also really like people involved in avatar animation to see that eye motions are important and not too hard to simulate. I really want to see better eye animation “in the wild,” whether it’s gaming, film, or VR. If anyone is interested in providing better avatar models that have rigged eyes (using a look point), please get in touch with me.

One aspect that I unfortunately had to cut for the reduced, 1-hour time slot is an entire section on experimental design, which I think anyone doing perceptual graphics at SIGGRAPH would have been interested in. Perhaps SIGGRAPH 2021?

SIGGRAPH: What takeaways can attendees expect to walk away with when you virtually present the Course — at 12:30 pm PDT on Thursday, 27 August — during SIGGRAPH 2020?

AD: Primarily, I hope that participants will walk away inspired with the breadth of work going on using gaze as its primary or secondary input modality, and having learned that gaze can be used to evaluate graphics (e.g., perceptual graphics, which, unfortunately, I don’t have a lot on in the current Course). They’ll see that it’s relatively easy and now inexpensive to get started.

SIGGRAPH: Can you share your all-time favorite SIGGRAPH memory?

AD: I have many fond SIGGRAPH memories, so it’s hard to pick just one. My Course from 20 years ago ranks high on the list because it was one of my first big presentations — maybe my biggest ever. This year, I wanted to both mark that 20-year anniversary, as well as update SIGGRAPH conference goers on how this area has substantially progressed.

One of the special things about SIGGRAPH is its sheer size and variety — that’s really what sticks out as a prominent memory and one that I hope will persist even in its virtual instantiation. I am really looking forward to SIGGRAPH 2020 and am super happy to be contributing to it.

SIGGRAPH: What advice do you have for someone looking to submit to Courses for a future SIGGRAPH conference?

AD: I think the biggest advice I have for Course submitters is to know your audience. That also involves knowing SIGGRAPH. What I mean by that is: If you’re going to propose a Course, know what kinds of topics have been presented before, which Courses you liked most as an attendee, and which aspects you found appealing and would like to emulate. Try to imagine what SIGGRAPH attendees already know or would like to know, and ask yourself if you’ll be bringing something that will both attract these attendees and be useful enough that they can use your material in their own work, whether they’re researchers, production artists, gamers, makers, technical directors, or whomever.

I hope my Course will offer something of interest to a large cross-section of [virtual] SIGGRAPH conference goers — maybe even some who were there 20 years ago in New Orleans.

Want more Courses content? Enjoy on-demand presentations 17–23 August at SIGGRAPH 2020. Live, scheduled sessions will take place 24–28 August.

Dr. Andrew Duchowski is a professor of computer science at Clemson University. His research and teaching interests include visual attention and perception, computer vision, and computer graphics. He is a noted research leader in the field of eye tracking, having produced a corpus of related papers and a monograph on eye-tracking methodology, and has delivered courses and seminars on the subject at international conferences. He maintains Clemson’s eye-tracking laboratory and teaches a regular course on eye-tracking methodology, attracting students from a variety of disciplines across campus.