Physics Forests: Real-time Fluid Simulation using Machine Learning © 2017 Disney Resarch, ETH Zürich, and Microsoft

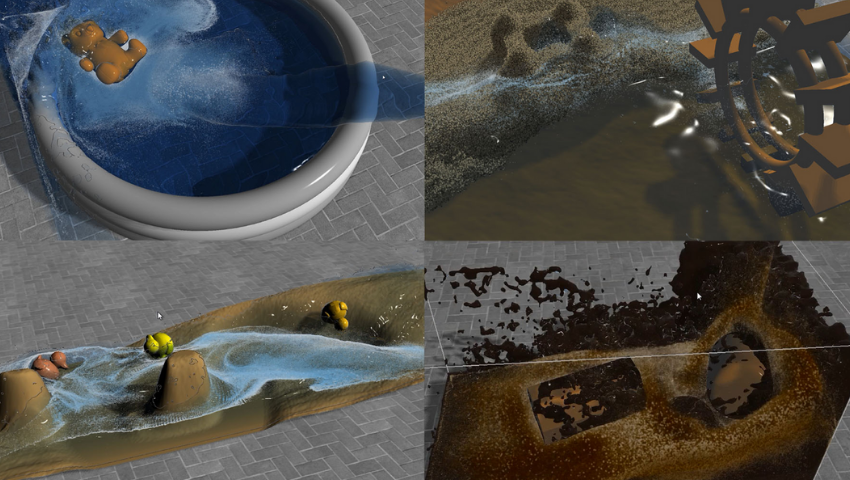

During our last trip Los Angeles, conference participants experienced a thrilling machine-learning, real-time demonstration as part of the SIGGRAPH 2017 Real-Time Live! showcase: Physics Forests. This data-driven fluid simulation, with surface generation, foam, coupling with rigid bodies, and rendering, is capable of simulating several million particles in real time. It uses the regression forest to estimate the behavior of particles and rendered surfaces. The method can handle a wide range of fluid parameters.

Since then, Physics Forests has only grown and built on the capabilities it boasted in 2017. We connected with ETH Zürich’s Ľubor Ladický, one of the masterminds behind the project, to get an understanding of what makes machine learning and real-time graphics such a winning combination, and what has happened to Physics Forests over the past two years.

SIGGRAPH: Tell us a bit about Physics Forests. What was novel about it when you brought it to SIGGRAPH 2017’s Real-Time Live! program?

Ľubor Ladický (LL): We developed a new method for real-time fluid simulations, which, unlike all other approaches, does not directly solve underlying Navier-Stokes equations, but instead makes the prediction of the behavior of fluid particles based on a model trained on a large set of simulations. Basically, we transformed a well-known mathematical problem into a regression task in the hope that it will lead to a significant speed-up over traditional solvers.

SIGGRAPH: What edge does machine learning/AI have over standard solvers with regard to physics simulations?

LL: Our method is 2-3 orders of magnitude faster than existing solvers. That allows for real-time simulations on an unprecedented scale of up to 10 million particles in real time. The predictions are always within the ranges observed in the training set, leading to the originally unexpected benefit of better stability of the solver.

SIGGRAPH: It’s been some time since the project was shown at SIGGRAPH, and we’ve noticed it is still ongoing. What progress have you made in the past couple of years?

LL: There is always a long way between a prototype and a product. As with any other machine learning problem, the devil is in the detail, and there is always space for improvement. Last summer we released a demo (http://apagom.com/physicsforests/downloads) and videos (http://apagom.com/physicsforests/videos/) so anyone can download and test how far we’ve pushed the quality since 2017.

SIGGRAPH: What are some of the ways Physics Forests can be used in the world?

LL: In the last few years, fluid simulation made its way into many off-line applications ranging from robotics, civil engineering, aerodynamics, movie industry, or medicine. Our method can be used when there is no alternative due to the real-time nature of the application, and the runtime is more important than the physical exactness. For example: computer games, VR/AR applications, virtual training, or an art exhibition.

SIGGRAPH: What are you currently focused on improving about Physics Forests and working toward?

LL: We are developing a general framework for the simulation of multiple physics phenomena built around the machine-learning paradigm. Currently, we are focusing on rigid bodies, fracture, and destruction, aiming to achieve similar speed-up over existing methods as we managed to get for fluid simulations. Concurrently, we develop plugins for existing SFX frameworks and game engines.

SIGGRAPH: How — if at all — did showcasing Physics Forests at SIGGRAPH impact your work?

LL: Showcasing our work at SIGGRAPH 2017 Real-Time Live! gave us lots of visibility and interest from our potential future customers. Without showing our work at a major conference, we would most likely struggle to get enough attention.

Don’t miss your chance to submit a project that you’ve been working on in the exciting realm of real time. Submit your work to the SIGGRAPH 2019 Real-Time Live! program by Tuesday, 9 April.

Meet the Creators

Ľubor Ladický received a Ph.D. in computer vision from Oxford Brookes University in 2011. He worked as a researcher in the Visual Geometry Group at the University of Oxford and in the Computer Vision and Geometry Lab at ETH Zürich. His current research interest is speeding up physics simulation using machine learning. In 2017, he co-founded and is a CEO of Apagom AG, transferring this line of research to industry.

Ľubor Ladický received a Ph.D. in computer vision from Oxford Brookes University in 2011. He worked as a researcher in the Visual Geometry Group at the University of Oxford and in the Computer Vision and Geometry Lab at ETH Zürich. His current research interest is speeding up physics simulation using machine learning. In 2017, he co-founded and is a CEO of Apagom AG, transferring this line of research to industry.

SoHyeon Jeong worked on a physics simulation of natural phenomena for her Ph.D. at Korea University and joined the computer graphics lab at ETH Zürich. During her post-doctoral studies, she worked on real-time fluid simulation using machine learning and later co-founded Apagom AG.

SoHyeon Jeong worked on a physics simulation of natural phenomena for her Ph.D. at Korea University and joined the computer graphics lab at ETH Zürich. During her post-doctoral studies, she worked on real-time fluid simulation using machine learning and later co-founded Apagom AG.