“Visual Knitting Machine Programming” © 2019 Carnegie Mellon University, University of Utah

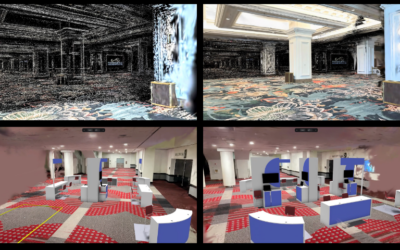

This summer, the Carnegie Mellon Textiles Lab, in collaboration with the University of Utah, presented its technical paper “Visual Knitting Machine Programming” at SIGGRAPH 2019. The paper demonstrates a new data structure, the augmented stitch mesh, and a knitting machine programming tool built atop it. We sat down with the researchers themselves to learn more about the project and its applications.

SIGGRAPH: Talk a bit about the process for developing the research for your Technical Paper “Visual Knitting Machine Programming.” What inspired the idea?

Jim McCann (JM): Vidya and I had been working on building machine knitting patterns automatically, and we knew the next step was to build a system which allowed user editing of the patterns. Cem and Kui had been developing great tools for pattern editing, and were just getting into making them knittable (at that time, just hand-knittable). So it was a natural fit.

SIGGRAPH: Let’s get technical. How did you develop the technology in terms of execution?

JM: Visual Knitting Machine programming is a combination of ideas that have been under development going back to our earliest knitting machine projects at my lab at Disney (approximately 2014–2015) and going back to Cem’s work at Cornell on Stitch Meshes (approximately 2012). So, even though the specific project only took us about six months and four people, the work really could not have been done without ideas, algorithms, and inspiration developed by 10–20 collaborators over the last 5–7 years.

SIGGRAPH: You’ve developed a mechanism to create a knittable augmented stitch mesh. Why was that important?

Vidya Narayanan (VN): Traditional knitting tools for historic reasons work in a 2D layout. This means the designer has to maintain a mental map between 3D shapes and their 2D layouts on the machine. But knitting machines can build 3D shapes directly and seamlessly (and not just paste or sew together flat pieces)! Because the output is in 3D — the ideal scenario would be to also design these objects in 3D. Knitting machines do have some constraints though and cannot simply knit all 3D shapes. So, it was important to come up with a data structure (the augmented stitch mesh) that could both track “knittability” as well as be intuitive to edit in 3D.

Kui Wu (KW): For the existing industrial knitting design tools, like knit paint, designers need to have background knowledge about the knitting machine in order to create nice textures. Also, they have to work on the 2D plane, which is not intuitive for designing appearance for 3D shapes. Our work provides a framework that users are able to do creative design without understanding how the knitting machine work. The system will automatically disable the unknittable design by the “knittability test” and allow the user to edit the texture and shape in an intuitive way.

SIGGRAPH: For the 3D, knit structures you initially produced, what made you choose the structures you did (like the bunny covering or faux spindles)?

VN: Well, it is SIGGRAPH and we absolutely had to knit the Stanford Bunny. In some of our previous work, we concentrated on complex shapes with high genus to showcase the capabilities of machine knitting. For this work, many of the results were chosen to showcase that such a system could indeed be a good way to produce knits. So, we made the choice to use a lot of traditional knit shapes (sweaters, caps, socks) with various edited styles that could be designed relatively quickly with our system.

SIGGRAPH: How did you introduce “cable faces” into the programming tool?

VN: Cables are a very common class of knitting patterns and our system allows users to easily introduce these structures on their designs. But the system itself can be easily extended by the user to also create other similar multi-stitch operations by introducing more “face types” to the library of stitch types we support.

JM: Another way to think about this is that we designed the system with the idea of faces as “wrappers” around fragments of knitting machine code. Cable faces emerge naturally when you consider the dependencies of code that is transfer-only (that is, it moves loops but doesn’t make new loops).

KW: Cable is one of the knitting techniques that create beautiful texture with large deformation. The core of it is to switch loops between the row. Though the original stitch mesh already supports adding cables, in our work, we change the way of representing cable faces, which can be supported by our system in a general way.

SIGGRAPH: What do you find most exciting about the final experience you’ve created?

JM: When I first saw the programming tools used for knitting machines, I pictured how we could make a more intuitive interface that would combine the stitch-level editing of the existing tools with 3D visualization of the target shapes. All of our knitting work up to this point has been trying to get closer to that vision, and “Visual Knitting Machine Programming” has finally, I think, arrived.

More succinctly, this is the knitting machine programming interface that I have always wanted to use.

Cem Yuksel (CY): When we’d published our first paper on the stitch mesh modeling interface, it attracted a lot of interest outside of the SIGGRAPH community as well. There was a strong desire to use this approach for tangible knitted artifacts, which we could not produce. Though our work is not nearly done, showing that we could connect this type of intuitive modeling with machine knitting is the most exciting outcome of this work for me.

SIGGRAPH: What’s next for “Visual Knitting Machine Programming”? (For example, what kind of application do you hope to see long term? Do you feel this will be used by the larger knitting community?)

VN: I would really like this to be a system that can be used by casual designers to create custom knit shapes like they would using a 3D printer. With consumer-grade knitting machines, like Kniterate, and just the growing interest in soft fabrication, building good design tools to support 3D machine knitting is key. Hopefully, such systems will also encourage more applications that can be built on top of it — optimizing stitch patterns, structures, color work, motion, etc.

SIGGRAPH: If you’ve attended SIGGRAPH conferences in the past, share your all-time favorite SIGGRAPH memory. If you have not, tell us what you most enjoyed about your first SIGGRAPH!

JM: SIGGRAPH is at its best when it expands your conception of what is possible in the world. Sometimes this comes from a technical paper or a poster, and sometimes it comes from Emerging Technologies, the Art Gallery, or the Exhibition. One strong memory I have of the latter is seeing Brightside Technology’s prototype local-dimming HDR display. I had never seen a display with such an amazing dynamic range, and I’m pleased that the technology is now so widely deployed.

CY: I like the diversity of SIGGRAPH. It covers and expands beyond the computer graphics community. It is an event where you see some of the most impressive computer graphics related research work, masterfully-prepared courses on advanced topics, and clever ideas and solutions to various production problems. My favorite part, however, has always been the electronic theater.

VN: Apart from Technical Papers and Production Sessions, I’ve really enjoyed all the exhibits and demos. In my very first SIGGRAPH experience (2016) I recall finding the “Zoematrope” project at Emerging Technologies (that visually added 3D-printed materials to physically render material combinations) really neat.

SIGGRAPH: What advice do you have for someone looking to submit to Technical Papers for a future SIGGRAPH conference?

JM: Graphics — and, really, computer science in general — is about building tools that enhance our human capabilities. Make something that the world needs.

CY: SIGGRAPH papers are a lot of work for the authors. A successful SIGGRAPH technical paper submission would typically include far beyond the minimum effort for showing that the proposed ideas a viable. It all starts with a good idea in computer graphics and related areas, but one must have the desire and commitment to spend the time and energy to demonstrate how far they can push their ideas.

The SIGGRAPH 2020 Technical Papers call for submissions is now open. Click here for details on how to submit, including deadlines (the first is 22 January 2020), requirements, and more.

Jim McCann is an Assistant Professor in the Carnegie Mellon Robotics Institute. He is interested in systems and interfaces that operate in real-time and build user intuition; lately, Jim has been applying these ideas to textiles fabrication and machine knitting as the leader of the Carnegie Mellon Textiles Lab.

Cem Yuksel is an associate professor in the School of Computing at the University of Utah. Previously, he was a postdoctoral fellow at Cornell University. After receiving his Ph.D. in computer science from Texas A&M University in 2010. His research interests are in computer graphics and related fields, including physically based simulations, realistic image synthesis, rendering techniques, global illumination, sampling, GPU algorithms, graphics hardware, modeling complex geometries, knitted structures, hair modeling, animation, and rendering.

Vidya Narayanan is a third year graduate student in the computer science department at Carnegie Mellon University (CMU), advised by Jim McCann. She is broadly interested in fabrication, graphics, and visualization. Before joining CMU, she was a research associate at Disney Research, Pittsburgh. Vidya earned her Master’s degree at the Indian Institute of Science, focusing on graphics and scientific visualization where she was advised by Vijay Natarajan.

Kui Wu is a postdoctoral associate in the Computational Fabrication Group under the guidance of Professor Wojciech Matusik at MIT CSAIL. He received a Ph.D. in computer science from University of Utah, advised by Professor Cem Yuksel. Wu graduated with B.A. in mathematics and B.S in software engineering from Qingdao Univeristy in China. His research focuses on computer graphics, especially mesh processing, real-time rendering, and physical-based simulation.