SIGGRAPH 2014 has released its list of 2014 Emerging Technologies highlights to be featured at this years conference at the Vancouver Convention Centre. Out of almost 90 submissions to the program, 24 were accepted, with more than 20 pieces from submitters outside of the United States.

Each year, SIGGRAPH-selected emerging technologies allow conference attendees to play with the latest interactive and graphics developments. The program also presents demonstrations mechanical bull for sale of research in several fields — like displays, input devices, collaborative environments, and robotics — through Talks and a close partnership with the SIGGRAPH Studio.

“This years content is very diverse,” explained Thierry Frey, SIGGRAPH 2014 Emerging Technologies Chair. ”For example, we brought forward a call for invisible technologies, i.e. installations and projects that leave technology in the background to focus on the user/usage, and have a selection of pieces that fall close to that science.”

Access to the 2014 Emerging Technologies program is available with Full Conference, Select Conference, and Exhibits Plus pass values. Prices start as low as $50.00 USD.

SIGGRAPH 2014 Emerging Technologies Highlights:

Birdly

Authors: Max Rheiner, Fabian Troxler, Thomas Tobler, Thomas Erdin, Zürcher Hochschule der Künste

Birdly is an installation that explores the experience of a bird in flight. It tries to capture the mediated flying experience with several methods. Unlike a common flight simulator, users do not control a machine. Instead, they embody a bird, the Red Kite. To evoke this embodiment, the system mainly relies on sensory-motor coupling. Participants control the simulator with their hands and arms,Jumping Castle which directly correlate to the wings and the primary feathers of the bird. Those inputs are reflected in the flight model of the bird and displayed physically by the simulator through nick, roll, and heave movements.

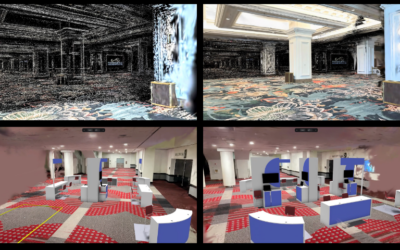

Spheree: A 3D Perspective-Corrected Interactive Spherical Scalable Display

Authors: Fernando Teubi Ferreira, Marcio Cabral, Olavo da Rosa Belloc, Roseli de Deus Lopes, Marcelo Zuffo, Universidade de São Paulo; Gregor Miller, Sidney Fels, The University of British Columbia; Celso Kurashima, Universidade Federal do ABC; Junia Anacleto, Universidade de São Carlos; Ian Stavness, University of Saskatchewan

Spheree is a personal spherical display that arranges multiple blended and calibrated mini-projectors to transform a translucent globe into a high-resolution perspective-corrected 3D interactive display. It tracks both the user and Spheree to render user-targeted views onto the surface of the sphere. This provides motion parallax, occlusion, shading, and perspective depth cues to the user. One of the emerging technologies that makes Spheree unique is that it uses multiple mini-projectors, calibrated and blended automatically to create a uniform pixel space on the surface of the sphere. The calibration algorithm allows for as many projectors as needed for virtually any size of sphere, providing a linear scalability cost for higher-resolution spherical displays.

Above Your Hand: Direct and Natural Interaction with an Aerial Robot

Authors: Kensho Miyoshi, Ryo Konomura, Koichi Hori, The University of Tokyo

Above Your Hand is a new application of a quadcopter that follows hand directions with two onboard cameras. The system is unique, because it does not require external devices such as controllers or motion tracking. All the processing is executed within the onboard computer. You and the quadcopter can freely Bouncy Castle walk around without worrying about external-camera views or wifi networks.

Monsters in the Orchestra: A Surround Computing VR Experience

Authors: Rémi Arnaud, Emanuel Marquez, Bill Herz, AMD, Inc.

Monsters in the Orchestra is an interactive and immersive demonstration of surround computing as experienced in a VR environment. Participants are immersed in a stereoscopic world of monsters playing real-world instruments with positional audio and 3D x 360-degree gesture control. The original system, demonstrated at CES 2014, allowed participants to be fully immersed in a 30-foot-diameter dome driven by six HD projectors, creating a 360-degree view from floor to apex. Real-time positional audio was introduced via hidden 32.4 speakers in three rings at different heights. Audio equalization and reverb were processed using AMD True Audio. The monsters were conducted or controlled by a human conductor using 3D x 360-degree gestures. At SIGGRAPH 2014, participants will be immersed in the same experience toboggan gonflable using virtual reality headsets, such as the new Morpheus VR head-gear from Sony, with 3D spatialized audio delivered to each participant using stereo headphones.

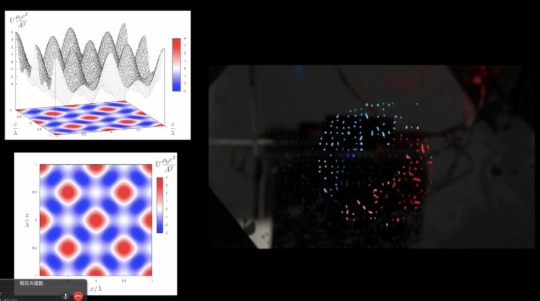

Pixie Dust: Graphics Generated by Levitate and Animated Objects in Computational Acoustic-Potential Field

Authors: Yoichi Ochiai, Jun Rekimoto, The University of Tokyo; Takayuki Hoshi, Nagoya Institute of Technology

This novel graphics system is based on expansion of 3D-acoustic-manipulation technology. In the conventional study of acoustic levitation, small objects are trapped in the acoustic beams of standing waves. Here, this method is expanded by changing the distribution of the acoustic-potential field (APF), which generates the graphics using levitated small objects. The system makes available many expressions, such as expression by materials and nondigital appearance.