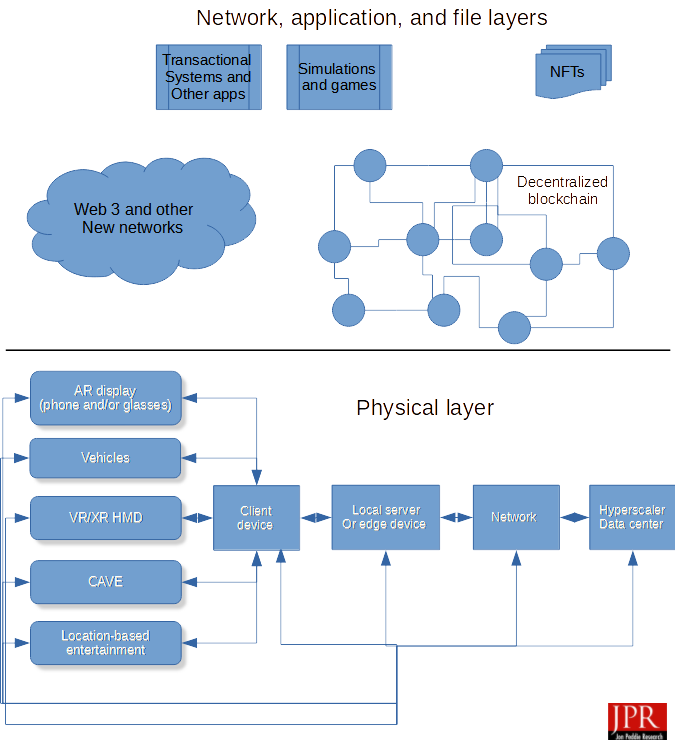

Image via Jon Peddie Research

How will you know you’re there if you can see stuff?

Today’s big buzzword is the metaverse which, as most people know, originated with Stephenson’s epic “Snow Crash” in 1992. A popular second novel, “Ready Player One,” by Ernest Cline in 2011, and a subsequent movie in 2018, plus the famous “Matrix” movie of 1999, provided a visualization of the concept of a connected virtual world. But fiction is fiction, and while it is a nice idea to embrace and fantasize about an all-encompassing metaverse, Second Life and Sony’s PlayStation Home are the frequently quoted real-world examples that don’t quite live up to expectation.

The definition of the metaverse is in flux, and various organizations are trying to define it to support their products and vision. That’s normal in new technology. We saw it with the PC, the smartphone, and the internet. We also saw promising technological failures like 3DTV and various media devices. And we saw the repeated promise of VR go through the practicality gap several times. Some people think, and others espouse, that the metaverse is the third coming of VR — it’s not, but could incorporate it. Some think and advocate that the metaverse is NFTs, blockchain, and cryptocurrency — it’s not, but could incorporate it. The one thing the metaverse is, and will be, is visual.

You will be able to experience the metaverse with your smartphone, AR smart glasses, a PC, in a Cave, and, yes, with a VR headset. Lots of pixels and lots of choices. And the metaverse will be persistent, maintaining your state of activity regardless of which vehicle you choose to use for engagement with it.

The metaverse is an extensive and extensible network, not just the display device. But the display device is the portal to the metaverse.

Readers of my blog may recall I’m fond of saying the more you can see, the more you can do; in the metaverse, that is truer than ever because seeing more will be critical. Billions of pixels will make up the metaverse. You may only be able to see a few million of them at a time due to the limitations of your display device, but wherever you look in the metaverse, the pixels will be there waiting for you, and not statically.

The challenges and opportunities we face now in display devices are magnified in the metaverse as we try to substitute a new reality for our current one, or at the very least have a view of another reality.

One of the concepts of the metaverse is that it will be the vehicle for digital twins, virtual replicas of the real world with megabytes of data behind every element. That data, which includes the physical properties, identifies the capabilities of the element, how much stress it can take, how its material will withstand its environment, and, most importantly, how it is supposed to look.

And the ultimate virtual extreme — the nefarious NFTs. Here again, visual acuity is critical. The buyer (or renter, as some say) of an NFT wants the assurance they got what they saw when they bought it — what you see is what you get. And that includes color fidelity as well as spatial fidelity. Pixels. Millions of them. Deep pixels — 10 bits minimum — and fast pixels, an order of magnitude faster than the fastest human reaction or recognition.

And while those blazingly fast, physically accurate pixels are swimming in front of billions of people’s eyes, they are also traveling through networks and in and out of storage systems, and sometimes — maybe most of the time — into the ether, never to be seen again.

The metaverse will be built with the parts we have today. First efforts — I like to call them “proto-verses,” like Fortnight and Omniverse — show us what is possible, and at the same time expose the weak points and barriers. That’s the plumbing we will have to work on, and the caution is that we do it in an open world employing sanctioned standards so it can be replicated anywhere by almost anyone. And that leads us to this: Where will the standards come from, and will the regulator bodies be able to move fast enough to satisfy the demand for this brave new world? One organization, Khronos, is trying to broker all interested parties and standards groups into working together to build the foundations for this pixel-driven metaverse.

It could be a decade before we have a metaverse of the type envisioned in Stephenson’s book. We might just end up with a lot of variations on AR and XR experiences, and the stupendous achievements of AI helping to idiotproof selfies and make social media posts pop. However, challenges of interoperability due to parochial attitudes by governments and shortsighted selfish stake-claiming by corporations, combined with technological barriers in bandwidth, semiconduction manufacturing, and software development, are limiting elements — not imagination.

It is not a case of “if you build it, they will come.” It is a case of “they are already here — when will you build it?” And because the metaverse will be pixel-based — you’ll know it when you see it.

Dr. Jon Peddie is a recognized pioneer in the graphics industry, president of Jon Peddie Research, and has been named one of the world’s most influential analysts. Peddie has been an ACM distinguished speaker and is currently an IEEE Distinguished speaker. He lectures at numerous conferences and universities on topics about graphics technology and the emerging trends in digital media technology. Former President of SIGGRAPH Pioneers, he serves on the advisory boards of several conferences, organizations, and companies, and contributes articles to numerous publications. In 2015, he was given the Lifetime Achievement award from the CAAD society. Peddie has published hundreds of papers to date; and authored and contributed to eleven books, His most recent, “Augmented Reality, where we all will live.” Jon is a former ACM Distinguished Speaker. His latest book is “Ray Tracing, a Tool for all.”

Jon Peddie Research (JPR) is a technically oriented marketing, research, and management consulting firm. Based in Tiburon, California, JPR provides specialized services to companies in high-tech fields including graphics hardware development, multimedia for professional applications and consumer electronics, entertainment technology, high-end computing, and Internet access product development.