Assassin’s Creed 2020; Image credit: Ubisoft

It’s doubtful we could have forecast 20 years ago the performance and sophistication we have today.

You’ve heard the old joke about how to boil a frog — slowly raise the temperature of the water. In a way, we’ve been the frogs, and the water temperature was being raised by Moore’s Law. The improvements in processing power, and the cost reductions due to mass consumption, have resulted in a virtuous circle feeding software developments that exploit the improvements in processors and then demanding more.

But we’ve barely noticed and have seldom looked back and made comparisons. We’ve been too busy consuming and enjoying the results. So much so that we’ve taken them for granted. But, let’s pause for a moment and look at how far we’ve come.

One of the biggest, if not the biggest, applications that uses every FLOP a CG system can deliver is gaming, specifically PC gaming. Real-time, high frame rate, high fidelity graphics, and smooth HDR gameplay across more and more advanced games, combined with 5.1 to 7.1 to Atmos audio, enable a new level of immersivity. A triple-A, first-person-shooter (FPS) is a masterpiece in simulation, with open worlds, independent AI bots, real-time and realistic physics, and 4K imagery. Flight and car simulators are equally on par, and real-time ray tracing just adds another dimension — you can have such a simulation system for $2,000 USD, something that would have been $2 million just a few short years ago.

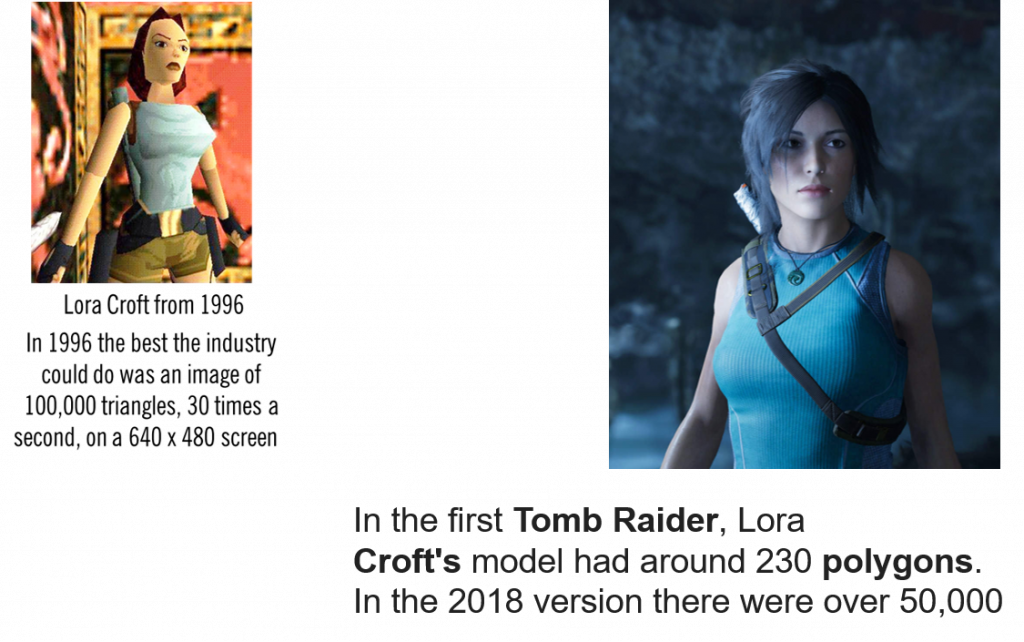

Lora Croft, pictured above, is a great example of what can be — and has been — done today. The jump from 230 polygons in 1996 to over 50,000 in 2018 was mostly in her head; her hair (and now other parts of her body) has been famously misquoted. Hair is probably one of the great accomplishments of CG, along with smoke and waves. Real-time realism makes it possible for you to be in the movie or game, not just looking at it.

Twenty years ago, the best screen resolution was 1024 × 768, as users and the industry slowly moved from the venerable VGA (640 × 480) and SuperVGA (800 × 600). By 2010, HD (1920 × 1080) was starting to be used and 1366 × 768 was popular. And five years ago, 4K was just becoming interesting. I’m writing this on an 8K Dell monitor.

Color depth moved even more slowly. Twenty years ago, we had remnants of 6-bit color, but 8-bit (24-bit RGB) was the norm, which, when properly implemented, gave us 16 million colors. Full color or high dynamic range (HDR10), 30-bit RGB, and 1.07 billion colors didn’t get established and supported in monitors until 2019, although the graphics add-in boards (AIBs) and GPUs could support for some time. Display makers lagged in their support for mainstream HDR screens because high-color depth requires much more sophisticated back lighting to deliver more levels of grey and black, which in turn add costs. But, once they did step up, display makers added on with wider displays going from a 5 × 4 aspect ratio to 16 × 9 at 27 inches, and 32 x 9 on a 49-inch curved monitor. (An example screen is pictured below.)

With each step in resolution and color depth, the processing load increased exponentially and frame rates (fps) slid from a required 60 fps to an acceptable 30 fps and a desired 60 fps. Video bandwidth demand went from 18 MHz to 1 GHz or more, and the end is not in sight.

The numbers are fantastic: 8.3 megapixels in a 4K screen with 1 billion colors and 533 MP/sec data to feed it. A modern GPU can handle billions of triangles a second, and we are so blasé we take it in stride, expect it, and demand more. Even armed with the power of Moore’s Law, it’s doubtful 20 years ago very many people would have been able to forecast the level of performance and sophistication we have today in computer graphics.

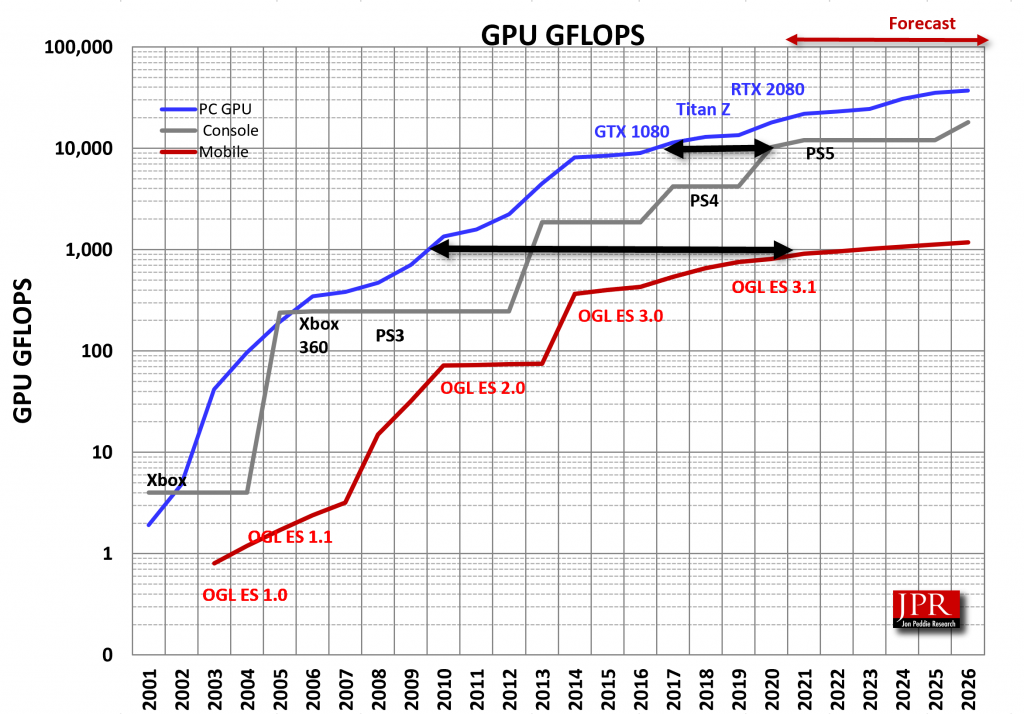

Moore’s Law is slowing down, as illustrated in the following chart. Slowing, not stopping. So the gains in hardware performance will not be as frequent or as great as we’ve enjoyed for the past 50 years.

However, software development will continue, and programmers and artists will squeeze even more capabilities out of the hardware. In addition to even better hair and smoke, skin tones and muscular movement will be improved to further reduce distraction and add to the suspension of disbelief. Ray tracing will add soft shadows and reflections that are optically perfect, and we will feel the images viscerally and not just as flat images on a screen. The emotive and evocative engagement will be breathtaking, and we may never want to leave. We will be boiled frogs — happy, boiled frogs.

Jon Peddie Research is a technically oriented marketing, research, and management consulting firm. Based in Tiburon, California, JPR provides specialized services to companies in high-tech fields including graphics hardware development, multimedia for professional applications and consumer electronics, entertainment technology, high-end computing, and Internet access product development.

Jon Peddie Research is a technically oriented marketing, research, and management consulting firm. Based in Tiburon, California, JPR provides specialized services to companies in high-tech fields including graphics hardware development, multimedia for professional applications and consumer electronics, entertainment technology, high-end computing, and Internet access product development.