Copyright Robert Twomey

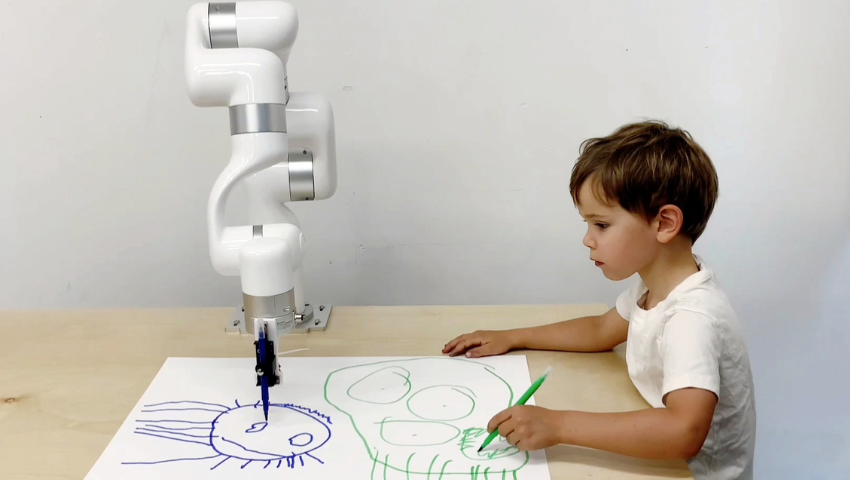

This Art Papers project, “Three Stage Drawing Transfer,” creates a mental-physical-visual circuit between a generative adversarial network (GAN), a co-robotic arm, and a 5-year-old child. From training images to the latent space of a GAN through pen on paper to a final human interpreter, it establishes multiple translations, confounding easy definitions of learning, creativity, and expression. Robert Twomey presents during an Art Papers roundtable session at SIGGRAPH 2022, Monday, 8 August from 2:30-2:35 pm PDT. His work also is on display in the Art Gallery, 8-11 August in the Experience Hall.

SIGGRAPH: Tell us about the process of developing “Three Stage Drawing Transfer.” What inspired your project?

Robert Twomey (RT): This project began with a simple desire to train a generative adversarial network (GAN) to produce children’s drawings. It builds on long-term interests of mine investigating children’s drawing and children’s babble as proto-language — evocative, expressive, but lacking the formal structures of written language and learned conventions of graphic representation. I had been working with contemporary GAN architectures, and I trained one of these networks (StyleGAN2) on a well-known collection of children’s art (the Rhoda Kellogg Child Art Collection). This generative network is the core of the project, explicitly juxtaposing human creativity (the thousands of children’s drawings) with machine creativity (the GAN).

The second major aspect of the work, that of using the GAN in an interactive robot-child performance, came later. I was interested to work with my 5-year-old son (a very enthusiastic artist!) on a project, and had access to a robotic arm that could render these GAN-generated images and work with my son as a partner to make collaborative drawings. The framing and title for the piece come from a Dennis Oppenheim performance where the artist collaborated with his son in drawing performances. Here I believe I am doing something similar, with a greater degree of technological estrangement/entanglement. I viewed this project as a way to think about human learning and imagination and to develop machines for living, exploring the new ways we might incorporate robots and other emerging technologies into our domestic everyday experiences.

SIGGRAPH: What was the biggest challenge you faced in its development?

RT: This project is driven by a rather complex chain of processes: generating images from a fine-tuned machine-learning (ML) model, using those to drive a custom motion-control software for a co-robotic arm, incorporating feedback from some kind of vision or perception, and motivating a 5-year-old child to participate in the project. You can guess that motivating the 5-year-old was challenging, though we solved that with M&Ms. The rest of the piece is made of elements I have worked with individually (mechatronic automation, robot control, CV, GANs), but lashed up into a new configuration. A lot of the difficulty had to do with the interoperability of software platforms, toolchains, hardware, etc., between robotics, ML, and the concrete physical drawing set up.

SIGGRAPH: How can “Three Stage Drawing Transfer” contribute to current thinking about the design and use of AI systems?

RT: This project investigates ideas of co-production and co-habitation between humans and machines. In a loose sense, it relates to GAN-guided fabrication and design processes, though this project is less ends-driven toward particular practical outcomes (say plans for a building or designs for shoes), and instead roves freely across a broad space of creative activity — childlike drawing and scribbles. These outputs don’t have use value in and of themselves but do function as evocative expressions. Current text-to-image tools, such as MidJourney and DALL·E, demonstrate ways that practitioners incorporate AI into the design cycle and visualization of ideas, but the creative AI architecture itself is seldom explicitly juxtaposed with the human creativity and imagination that is driving the system. Here I wanted to make that juxtaposition explicit, to stage a concrete encounter between GAN-based and human-based imagination, child and robot, natural and artificial intelligence, and creativity. I believe these kinds of experimental interactions can help us reflect on the capabilities (and qualities) of each of those parts.

SIGGRAPH: What did you learn about your son’s thought processes, and what did he learn about robotics? How did that inform the project?

RT: My son is captivated by the idea of robots, so the physical presence of the robot added a particularly compelling dimension for him. In a different experiment, I had printed out GAN-generated variations of his drawings on paper as starting points for collaborative drawings and found that he had no interest in building on those static images. However, he is compelled by the robot as a presence, and had no problem responding to it when it was drawing in their shared space. I’ve become particularly interested in semantic dimensions of the output, both how he might label/describe the drawings by the robot (or any samples from the GAN), and similarly how he might title and narrate his own drawings. For him, at least, the drawings co-exist with this supplementary narrative text that might work with (or against) my impressions of the drawings themselves. Also, he viewed drawing with the robot as a competition and was curious whether he had beaten it. Obviously, drawing isn’t a zero-sum game; there are no winners — but it was interesting that he was motivated by this kind of John Henry vs. the machine framing. I think he will be eager to interact with the robot in more varied ways.

SIGGRAPH: What do you find most exciting about the final product that you will present at SIGGRAPH 2022?

RT: The initial stages of this project were focused on machine learning and human imagination in a relatively static and narrow way — how would a neural network learn to represent this diverse set of children’s drawings (which are themselves these varied, concrete records of human imagination and expression on the edge of intelligibility). And how would my son (a human child) respond to those machine-generated replicas? The drawings and documentation presented at SIGGRAPH show the results of those first dataset to robot/GAN to child interactions and also provide some insight into how the neural network learns to make the images. I’m very excited to share these results. I also will show new work facilitating more fluid, real-time interaction. By equipping the project with a vision system, and building some basic gestures and embodied behaviors into the arm, it opens up new possibilities for a more natural, real-time collaborative drawing. This interactive installation will be on display in the Art Gallery program in addition to my talk.

SIGGRAPH: What’s next for “Three Stage Drawing Transfer”?

RT: In future development I am interested to explore semantic dimensions of the content — what the images are. This can be both from the machine/AI perspective (using text-to-image translation and class-conditioned generation) and from the human perspective (working with the descriptions that the human collaborator provides of their work). I’d like to produce a series of these collaborative human-machine drawings around particular subjects or topics, prompting the process in more explicit ways. Beyond collaborative drawing and creative AI, I hope this work leads to a range of new experiments exploring roles for robots in our intimate spaces. I expect to stage other experimental encounters between robots and human/non-human occupants (pets, etc.) in domestic space to see how robots can alter, intervene in, and illuminate the conditions of our machine cohabitation.

Want to learn more about this art research? Register to join us in Vancouver or virtually for SIGGRAPH 2022! Now through 11 August, save $30 on new registrations using the code SIGGRAPHSAVINGS. Register now.

Robert Twomey is an artist and engineer exploring poetic intersections of human and machine perception, particularly how emerging technologies transform sites of intimate life. He has presented his work at SIGGRAPH (Best Paper Award), CVPR, ISEA, NeurIPS, the Museum of Contemporary Art San Diego, and has been supported by the National Science Foundation, the California Arts Council, Microsoft, Amazon, HP, and NVIDIA. He is an assistant professor of emerging media arts with the Johnny Carson Center, University of Nebraska-Lincoln, and an artist in residence with the Arthur C. Clarke Center for Human Imagination, UC San Diego. Find Twomey on Instagram and Twitter.