Over the past year, how often has a “frozen” (virtually, at least) colleague or friend caused frustration in an otherwise great meeting or discussion? NVIDIA’s SIGGRAPH 2021 Real-Time Live! Best in Show-winning demo, “I am AI: AI-driven Digital Avatar Made Easy” sought to solve that frustration and wowed our virtual audience and judges in the process. We caught up with Ming-yu Liu (director of research, NVIDIA) to learn more about the deep learning-based system.

SIGGRAPH: Share a bit about the background of this project. What inspired the initial research?

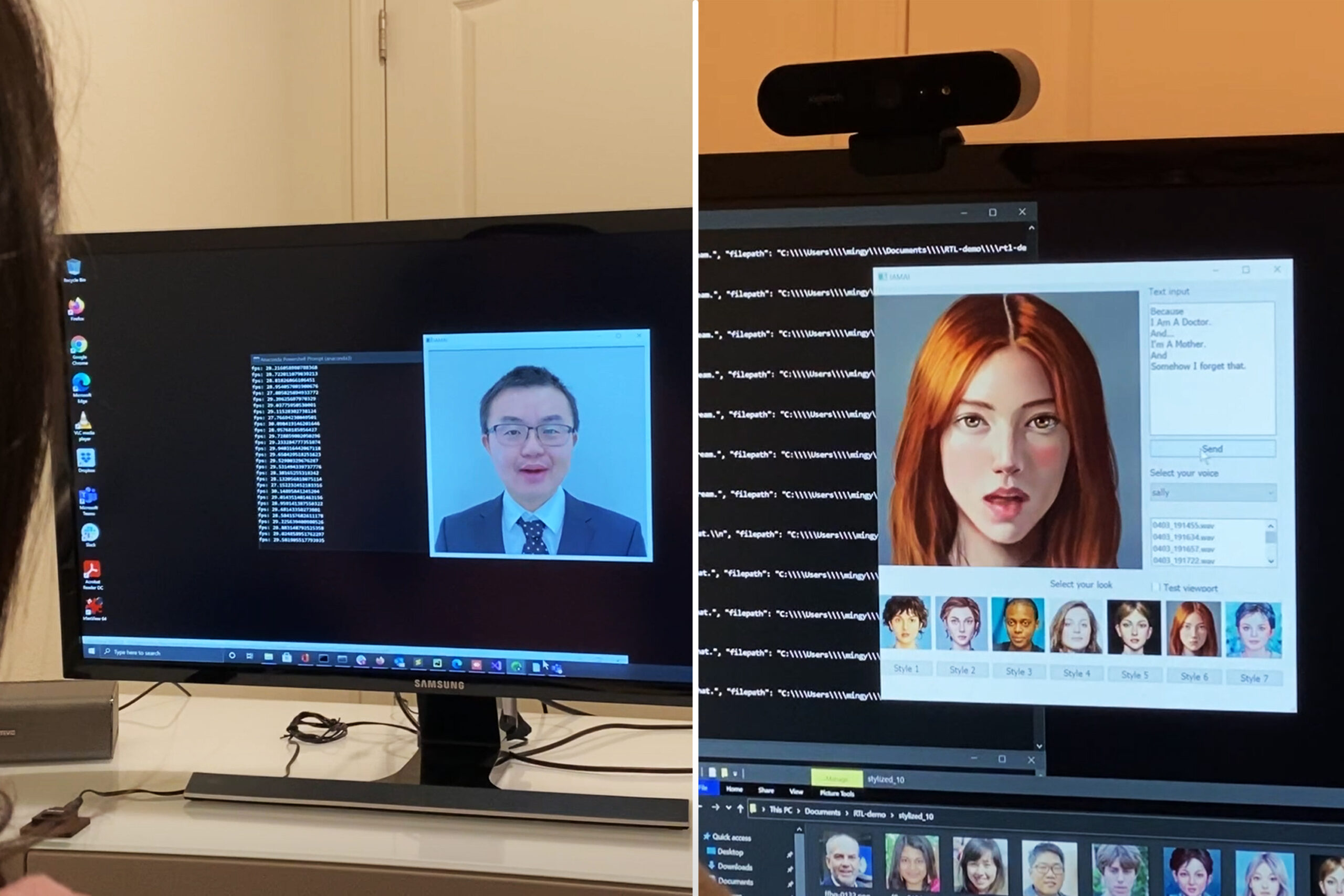

Ming-yu Liu (ML): We relied on video conferencing for idea brainstorming and collaboration, and one of my colleagues had a poor internet connection at his place. Sometimes, we’d be talking about an exciting research idea and the connection got lost, then the video feed froze. This caused some frustration. Our research was on synthesizing visual content, so we started to imagine how we could use our research to make video conferencing better. We hypothesized that if we could create a digital avatar with a single photo of a person, we would achieve very low bandwidth video conferencing because we only needed to send the information required to animate the avatar for a video call. Beyond video conferencing, it can be used for storytelling, virtual assistants, and many other applications. (Watch the demo below!)

SIGGRAPH: When it came to executing the idea for your demonstration, talk about the challenges faced and overcome. What was most difficult part to produce/practice? How did you work across locations?

ML: We faced a couple of challenges. Our demo was about using our AI digital avatar creation technology for video conferencing, and we had two main demonstrators. One played the role of the interviewer, and the other played the role of the interviewee. This setting required four important view angles: the two screen views of the two demonstrators’ computers and the two frontal views of the two demonstrators. As these two demonstrators had to be physically located in two different rooms, we had to use multiple cameras and screen captures for filming the demo. We spent a lot of time testing different camera views and transitions just to make the demo more interesting. All of the above required a large team operating in the same location. Fortunately, all the team members were very excited about the demo.

SIGGRAPH: Explain the technologies that were used to produce the end product.

ML: There are three main technologies:

- The first is a text-to-speech technology called RAD-TTS. It uses an AI to convert user input texts to speech audios.

- The second is an audio-to-CG-face-video technology called Audio2Face. It uses a deep network to convert speech audios to the facial motions of a CG character. The output is a CG face video.

- The third is a facial motion retargeting technology that uses a GAN to transfer the facial motion in the CG face video to a still photo of a target person or cartoon avatar. The output is a face video of the target person or cartoon avatar making the motion of the CG character in the CG face video. The AI model creates realistic digital avatars from a single photo of the subject — no 3D scan or specialized training images required.

To learn more about these technologies go here.

SIGGRAPH: What’s next for “I am AI”? What do you see as the future for the technology? Will it be made available publicly?

ML: We are working on improving the technologies in the “I am AI” demo. A version of the audio-to-face conversion technology that animates a face mesh using audio inputs is available in the NVIDIA Audo2Face App.

SIGGRAPH: Tell us about a favorite SIGGRAPH memory from a past conference.

ML: My favorite SIGGRAPH memory was the “The Making of Marvel Studios’ ‘Avengers: Endgame’” session at SIGGRAPH 2019. As a big fan of Marvel superheroes — and a beginner of computer graphics — I was very excited to see the world-renowned VFX studios’ producers share their experiences and journeys in making the “Endgame” movie. I was deeply impressed and motivated to create one amazing technology that could be used in a movie one day.

SIGGRAPH: What advice do you have for someone who wants to submit to Real-Time Live! for a future SIGGRAPH conference?

ML: Do dry runs and try to make each one better than the previous one. Real-Time Live! is the best platform to demonstrate your coolest real-time computer graphics technology. People expect unseen, brand-new technologies, and you only get a few minutes to present it live. As new technologies are often unstable, the dry runs will help you identify issues so that you can improve the robustness of your demo. You also need to work hard on making your audience understand the tech and why it is important from the demo. Watching the recordings of the dry runs helps improve the presentation.

Registration to access the virtual SIGGRAPH 2021 conference closes next Monday, 18 October. Don’t miss your chance to check out other award winners like “I Am AI”! Plus, for free you can hear more from Liu and his team when you sign up for GTC, 8-11 November.

Ming-Yu Liu is a distinguished research scientist and a director of research at NVIDIA. His research focuses on deep generative models and their applications, and his ambition is to enable machine super-human imagination capability so that machines can better assist people in creating content and expressing themselves. Liu likes to put his research into people’s daily lives — and NVIDIA Canvas/GauGAN and NVIDIA Maxine are two products enabled by his research. He also strives to make the research community better and frequently serves as an area chair for various top-tier AI conferences, including NeurIPS, ICML, ICLR, CVPR, ICCV, and ECCV, as well as organize tutorials and workshops in these conferences. Empowered by many, Liu has won several major awards in his field, including winning a SIGGRAPH Best in Show award two times.