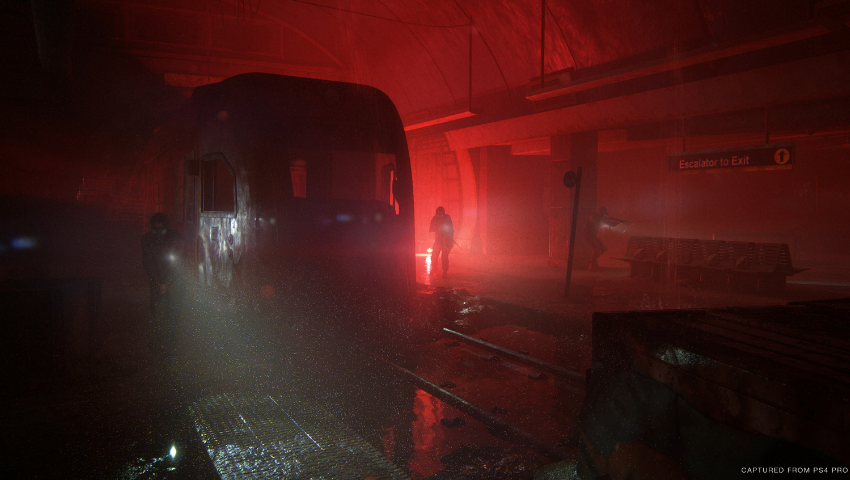

Naughty Dog LLC Comments: Screenshot from “The Last of Us Part II”, captured on a Playstation 4 Pro system

After the success of Naughty Dog’s “The Last of Us,” a 2013 game in which characters Joel and Ellie travel across a post-apocalyptic United States, “The Last of Us Part II” was released in June 2020. “Part II” was also featured during several SIGGRAPH 2020 presentations this summer. We caught up with Artem Kovalovs (game programmer, Naughty Dog) to learn more about what the team did differently in developing “Part II,” how visual effects like volumetric fog were created, and how fans are receiving this highly anticipated sequel.

SIGGRAPH: Share some background on the making of “The Last of Us Part II.” What did you do differently to develop Part II?

Artem Kovalovs (AK): A lot of new technology development went into the second iteration of “The Last of Us.” As a studio, we always push our engine technology forward. From the beginning, we knew that we needed a lot of new features to achieve the desired look and feel of the game. And that doesn’t apply only to graphics, but all other game engine systems, offline and real-time. Whole engine systems were improved. This project was the biggest and most ambitious yet, and, to pull it off on PlayStation 4, it took a lot of innovation and effort from everyone at the studio.

As far as my contributions to the game, I was responsible for technology supporting visual effects, such as atmosphere and fog, particles, character blood effects, snow, etc. Of course, each aspect of the game is a result of collaboration between multiple disciplines. I am grateful to have so many talented people who worked with me on these features.

SIGGRAPH: Tell us about the GPU particle framework you developed for this game. How does it add to the experience of “The Last of Us Part II”?

AK: The reason we started working on porting particles from CPU to GPU was performance. Particles are great candidates to offload some of the work to GPU. As that work was coming along, we started seeing that there were new techniques possible by using what was available on GPU, specifically screen space texture access. There is a great amount of useful information generated into the screen space as a frame is being rendered. We realized we could use that information for spawning and simulating particles.

Dynamic drip simulation is a great example of this. We used the screen space information to simulate liquid drips on complex geometry, such as a moving character. The drips were a string of GPU particles living on the surface of geometry that were connected to each other. This approach was very cheap due to using existing screen space information, yet it allowed enhancement of character effects, such as water drips on characters in rain or realistic blood simulation from wounds. We spawned droplets when dynamic drips reached a certain geometry curvature.

Dynamic snow falling and attaching to characters and environment is another great example of this. We utilized screen space for collisions and attachment simulation. Collisions with real geometry in 3D are expensive, but transferred to screen space, they become much cheaper.

At Naughty Dog, we pay close attention to detail. It is not that one effect makes something look good, but it is a combination of many systems working together to make the final look. And GPU particles and new techniques allowed us to add more detail and fidelity to the game that we couldn’t before.

SIGGRAPH: What techniques did you use to produce the volumetric fog? What challenges did you face in this process?

AK: We started out with a standard approach of fitting a 3D texture into view space, voxelizing the view space with a “froxel” (frustum voxel) grid. The main issue for us was not just implementing this approach but pushing for higher resolution and fidelity within the performance constraints. Our previous implementation looked good and produced sharp rays, but it was limited because of its final storage in a 2D texture. It looked great on its own, but it was challenging to integrate it with the rest of the world, like the transparent objects and particles. The challenge was adding another dimension but not losing much of the quality we had, with reasonable performance.

A major aspect of this game is indirect lighting. A lot of levels depended on ambient light for their look. A big part of this was volumetric fog, which created the atmosphere that tied everything together.

We also had a mixture of different dynamic lights, like flashlights and torches, that had to be supported with the new system, as well as sunlight.

A lot of optimization went into the system, making it all perform well on PlayStation 4. We were able to push the resolution of the fog grid pretty high, sometimes as high as over 5 million froxels, which gave us good-looking, sharp light shafts when needed.

SIGGRAPH: This sequel was highly anticipated. What feedback have you received so far from “The Last of Us Part II” players?

AK: Across the board, everyone was blown away by the achievements of this game. To be honest, I was surprised by how well the final product ended up looking. Naughty Dog has really pushed the boundaries of what is possible, and that is evident from fan reactions.

The game has received many awards, and that is a testament to the quality of this game.

SIGGRAPH: What do you hope players and developers take away from the work you’ve done on “The Last of Us Part II”?

AK: I am a proponent of sharing technological advancements in the developer community. I see it as yet another advantage of working in the games industry. In my SIGGRAPH 2020 Talks, I shared a lot of technical detail on what it took to get the results, and I hope that this experience will help others to push their games forward and will ultimately become another tiny step pushing the whole industry forward.

SIGGRAPH: Share a bit about your experience attending the virtual SIGGRAPH 2020. How did it go? Any favorite memories or sessions you enjoyed?

AK: Virtual SIGGRAPH was definitely a new experience. In general, I believe it went very well. I enjoyed the interactive chat window in the webpages of the Talks, where I could engage with viewers and answer questions. That provided a great way to supplement technical information from the Talks. There were many great Talks, and I walked away inspired and humbled by how much knowledge there is in the graphics field and how much there is left to learn.

Are you ready to inspire others with your discoveries and advancements in computer graphics and interactive techniques? Submit your Games-related content to SIGGRAPH 2021.

Artem Kovalovs graduated from University of Southern California (USC) and has been part of the games industry for over 10 years. He has been working at Naughty Dog for the last eight years as a game programmer, most recently focusing on graphics for “The Last of Us Part II.” He also has been an adjunct faculty at USC for over nine years.