All images are property of The Walt Disney Company.

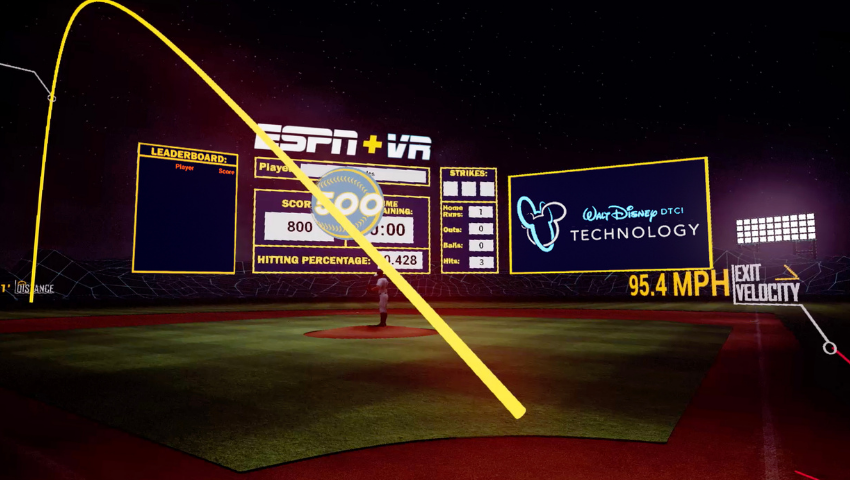

The Immersive Pavilion brought baseball to SIGGRAPH 2020 with ESPN VR Batting Cage, an immersive, interactive experience that engages sports fans with live pitch data. We connected with project contributors from the Content Technology team of The Walt Disney Company’s Direct-to-Consumer & International (DTCI) segment to learn more about the inspiration behind and development of the experience and how it aimed to create a dynamic mixed reality experience for baseball fans.

SIGGRAPH: Share some background on ESPN VR Batting Cage. What inspired the development of this immersive experience?

The Team: The VR Batting Cage was initially a side project — we were curious about how well the K-Zone 3D and Hit Track projects and assets would translate to a different perspective from the more traditional, two-dimensional (2D) content we were producing for broadcast and digital. Because the projects were built in Unreal Engine, and because the engine supports XR development, it was a natural direction for our exploratory work with data and real-time rendering. Initially, the VR experience was more about exploring the virtual stadium than interacting with data. Our team realized, though, as we considered how best to combine the K-Zone and Hit Track experiences, that the answer was simple: a baseball game. We narrowed our concept from a baseball game to a batting challenge, and decided to create an immersive experience that allowed players to connect with real baseball data in a new way — by stepping up to the plate themselves.

SIGGRAPH: Tell us about the process of developing the experience. How many people were involved? How long did it take?

The Team: The game itself was created from continuous iteration and development on one initial concept — can the player hit a professional pitch? First, we created a virtual bat and associated it with a VR hand controller. Then, we defined the collision between virtual bat and ball as the glue, binding together K-Zone pitches with Hit Track hits. Once a player was able to swing and hit the incoming baseballs, we were ready to refine the gaming experience. Our team’s approach to iterative development for the project was to informally test the VR experience as often as possible. With each new addition or change to the game’s logic, we had opt-in demos for whoever on the team was available that day. The time limit for batting rounds, outfield leaderboard, and tournament competition mode were all created because of team input.

SIGGRAPH: What was the most challenging aspect of developing ESPN VR Batting Cage?

The Team: Besides hitting one of the full-speed pitches? (This actually was an issue early in development! We weren’t able to test our method for calculating the ball’s exit velocity until we slowed the pitches down to quarter and half speeds so we could actually hit them.)

But really, we think the most challenging aspect of the project was making the leap from a virtual to mixed reality experience. As a team, we had combined experience with individual elements of the setup, like optimizing our Unreal project for VR, working with broadcast technologies like green screens and camera tracking, and exporting video from Unreal with AJA I/O card, but bringing them together into one, smooth solution was difficult. Finding a way to output two separate feeds with different frame rates into the VR headset and to the AJA card was particularly challenging.

We solved the frame rate problem by creating a separate blueprint actor to house the spectator camera and by forcing this blueprint to “tick” every 60th of a second, rather than every 90th. Having the blueprint tick every 90th of a second had been necessary in order to provide the best (and least nauseating) experience in the headset. This method allowed us to capture the spectator camera’s current view with each tick, to interpret these frames as a 60-fps video output, and to export that video from Unreal using an AJA media card.

SIGGRAPH: Since the COVID-19 pandemic, professional baseball has looked a bit different than usual. How can an immersive experience like ESPN VR Batting Cage fill the void for sports fans?

The Team: A baseball fan’s sports experience may be confined to a TV or phone screen for the foreseeable future, but real-time data visualizations like K-Zone 3D and Hit Track can help create a more immersive 3D experience within a medium that’s typically passive and 2D. As XR experiences become a more typical way of engaging, I think that interactive and immersive projects like the Batting Cage may gain further traction as professional sports leagues, broadcast companies, and technologists are challenged to create more accessible, engaging content for fans.

SIGGRAPH: What’s next for ESPN VR Batting Cage?

The Team: There were quite a few ideas for improving the Batting Cage that our team brainstormed during development but haven’t yet implemented. We really would like to revisit the motion-capture sequences we used to animate the pitcher’s movements and capture different sequences for different types of pitch (e.g., fastball, curveball, or changeup). With motion capture gloves that can provide more detail than general finger position, we actually could vary the pitch sequences by body movement and specific finger placement on the baseball prior to release.

Right now, our team is using the same recipe for content creation, and using the Unreal Engine to generate real-time, data-driven renderings of other sports.

SIGGRAPH: Share a bit about your experience attending the virtual SIGGRAPH 2020. How did it go? Any favorite memories or sessions you enjoyed?

The Team: One of our favorites from SIGGRAPH 2020 was Maxon’s Exhibitor Session with Gavin Shapiro, “Cinema 4D as a Tool to Create Happiness Through Mathematics and Dancing Flamingos.” Learning about the thought process behind each of the mathematically driven, repeating animations that Gavin described in his talk was fantastic. The computer science drew us in because of the way it fuses creativity and art with technology, mathematics, and analytical thinking. Gavin also did a great job demonstrating that math concepts can not only be part of implementing an animation, but also can share the stage with dancing flamingos as its subject matter! His explanations and demonstrations were so engaging and easy to understand that he made his talk as entertaining as watching the flamingos.

We also really loved “The Future of Immersive Filmmaking: Behind the Scenes at Intel Studios” with Philip Krejov and Ilke Demir. It was fascinating to learn about the immersive filmmaking tools and how pieces of that workflow fit together. We all enjoy discovering more about how technology can immerse people in different experiences and allow people to interact and engage with stories and each other.

Over 250 hours of SIGGRAPH 2020 content is available on-demand through 27 October. Not yet registered? Registration remains open until 19 October — register now.

Meet the Team

Eliza D. McNair is a software engineer with the Content Technology team in The Walt Disney Company’s Direct-to-Consumer & International (DTCI) division. She graduated from Wellesley College with a B.A. in computer science, with honors in computer science for her thesis research in the field of educational virtual reality systems. Over the course of her time at ESPN and Disney DTCI, Eliza’s enthusiasm for the synergistic fields of computer vision, computer graphics, and data visualization has found an outlet in virtual storytelling.

Eliza D. McNair is a software engineer with the Content Technology team in The Walt Disney Company’s Direct-to-Consumer & International (DTCI) division. She graduated from Wellesley College with a B.A. in computer science, with honors in computer science for her thesis research in the field of educational virtual reality systems. Over the course of her time at ESPN and Disney DTCI, Eliza’s enthusiasm for the synergistic fields of computer vision, computer graphics, and data visualization has found an outlet in virtual storytelling.

Katherine Ham is a software engineer with the Content Technology team at The Walt Disney Company’s Direct-to-Consumer & International (DTCI) division. She received a B.A. in computer science with a minor in marine sciences from the University of North Carolina at Chapel Hill (Go Heels!). She started with the company as a member of ESPN’s VFX team and as a current member of the Content Technology team, her enthusiasm for the power and art of storytelling allows her to explore the ways in which data can be used in visual storytelling.

Katherine Ham is a software engineer with the Content Technology team at The Walt Disney Company’s Direct-to-Consumer & International (DTCI) division. She received a B.A. in computer science with a minor in marine sciences from the University of North Carolina at Chapel Hill (Go Heels!). She started with the company as a member of ESPN’s VFX team and as a current member of the Content Technology team, her enthusiasm for the power and art of storytelling allows her to explore the ways in which data can be used in visual storytelling.

Jason M. Black is a software engineer who creates data-driven experiences with content partners across The Walt Disney Company’s Direct-to-Consumer & International (DTCI) division. After spending nearly a decade building XR experiences and working in VFX for linear broadcast at ESPN, Jason has received multiple awards for his contributions to innovation which most recently includes the George Wensel Technical Achievement Emmy in 2019. Jason is excited about the future of personalized, interactive content and experiences. Jason holds a B.S. in computer animation from Full Sail University.

Jason M. Black is a software engineer who creates data-driven experiences with content partners across The Walt Disney Company’s Direct-to-Consumer & International (DTCI) division. After spending nearly a decade building XR experiences and working in VFX for linear broadcast at ESPN, Jason has received multiple awards for his contributions to innovation which most recently includes the George Wensel Technical Achievement Emmy in 2019. Jason is excited about the future of personalized, interactive content and experiences. Jason holds a B.S. in computer animation from Full Sail University.

Christiaan A. Cokas is an idea generator with the Content Technology team in The Walt Disney Company’s Direct-to-Consumer & International (DTCI) division. He graduated from RogerWilliams University with a B.A. in computer science. Over the course of his time at ESPN and Disney DTCI, Christiaan’s enthusiasm for the real-time data and visual graphics have culminated in projects that tell stories in very visual ways.

Christiaan A. Cokas is an idea generator with the Content Technology team in The Walt Disney Company’s Direct-to-Consumer & International (DTCI) division. He graduated from RogerWilliams University with a B.A. in computer science. Over the course of his time at ESPN and Disney DTCI, Christiaan’s enthusiasm for the real-time data and visual graphics have culminated in projects that tell stories in very visual ways.