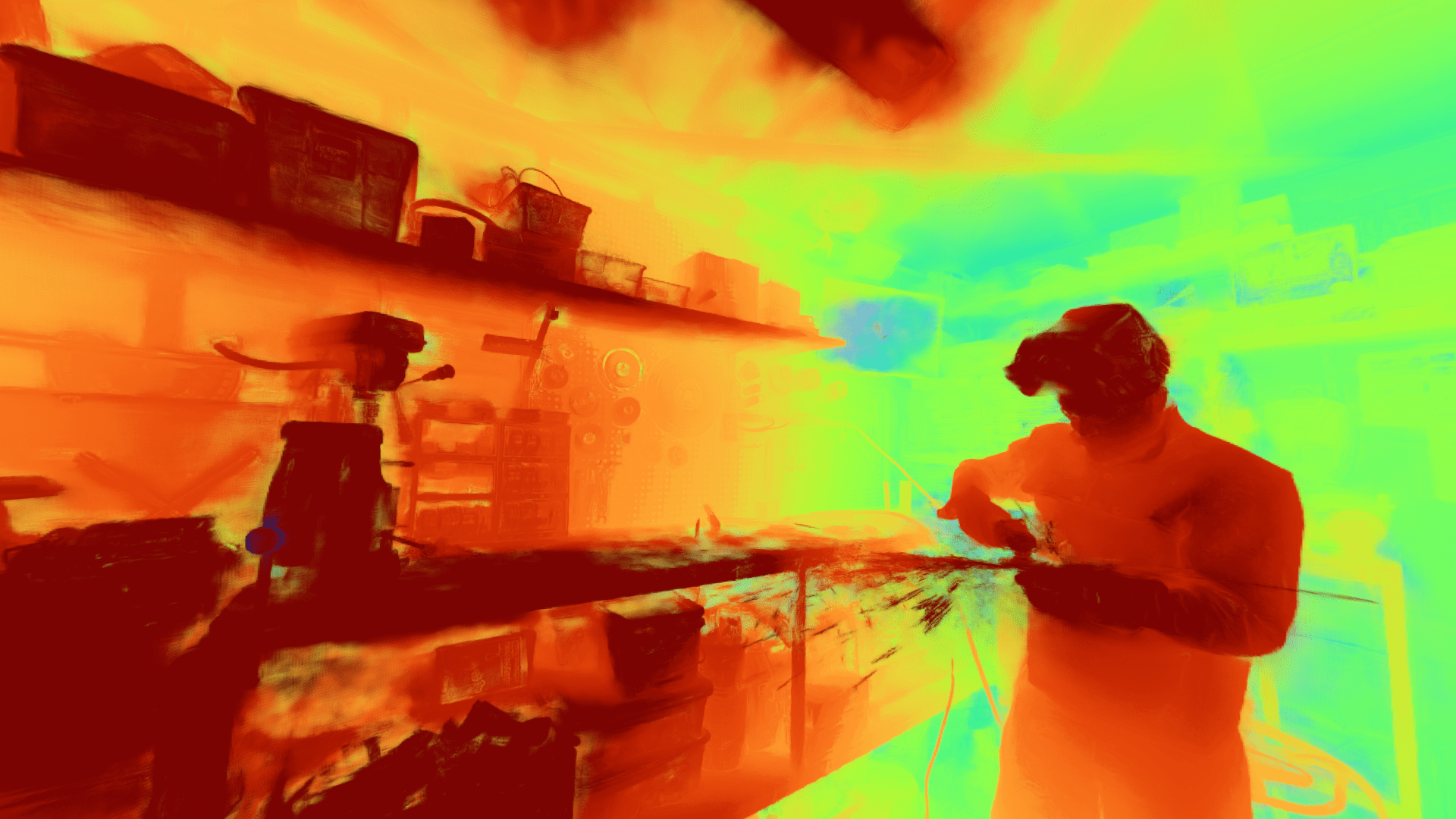

A colorized depth map used to compress the multi-sphere images generated by DeepView.

After announcing last week that Google’s Immersive Pavilion project, “DeepView Immersive Light Field Video” has been named the Best in Show – Immersive Experience award winner for SIGGRAPH 2020, we caught up with one member of the team to dive into the origins of the project and their incredible progress toward making streamable light field video a reality.

SIGGRAPH: Talk a bit about the process for developing your project, “DeepView Immersive Light Field Video”. What was the inspiration?

Paul Debevec (PD): We wanted to create the most immersive and comfortable virtual reality experience possible, and we knew it would be crucial for users to not only be able to turn their head to look around, but to be able to move their head left/right and forward/back and see the appropriate changes in perspective in the scene. We knew that the light field research shown in the mid-1990s at SIGGRAPH offered the right way to think about recording the scenes with camera arrays, but we needed to figure out how to make this into a practical and immersive VR media solution. We showed our first results in this area at the SIGGRAPH 2018 Immersive Pavilion, where we demoed our SteamVR app “Welcome to Light Fields”. That work could immerse you in photoreal, 3D versions of amazing places like The Gamble House and Space Shuttle Discovery, but it could only record and display still scenes. To bring dynamic immersive light field content to life, we’ve had to develop new video camera rigs, view interpolation algorithms, and compression techniques to bring live-action immersive content — people and animals doing interesting things in interesting places — into people’s VR headsets.

SIGGRAPH: Let’s get technical. How did you use technology to develop the project? We understand there is also a Technical Paper that ties back to this project as well as a SIGGRAPH Labs demo. Can you share how they are related? Different?

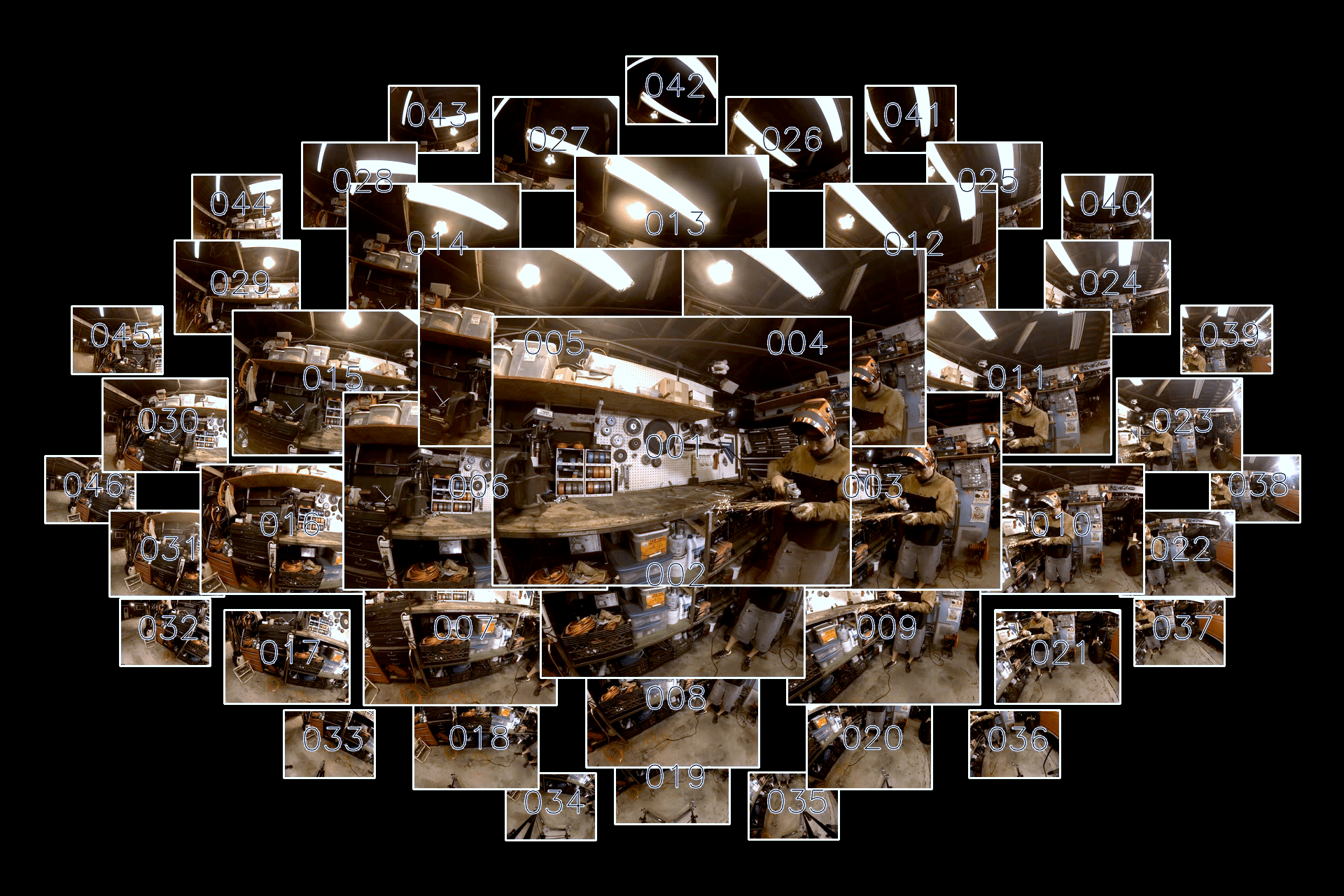

PD: There were several technical challenges to making streamable light field video a reality. One is that we needed to be able to work from far fewer viewpoints distributed around the spherical viewing volume than with light field stills, from around 500 images per hemisphere to around 50. Dealing with a greater number of cameras would be expensive and impractical; we were happy we found a way to build a 1m diameter hemispherical rig for about $6K of parts. It has 46 cameras about 15cm apart from each other.

Interpolating smoothly between these more widely spaced views needed more advanced computer vision techniques, and we turned to the DeepView technique of Flynn, et al, that we published at CVPR 2019. It uses machine learning to regress to a multi-plane image representation of the scene — a stack of RGB-Alpha textures rendered back-to-front — which smoothly interpolates the photographed viewpoints. To make this technique work for immersive content, we modified its geometry to work with spherical shells rather than planes.

Our biggest challenge was to find a way to compress the videos from 46 cameras down into a single six-degrees-of-freedom immersive experience, where you can explore the “in between” views seamlessly as the video plays back. Even though this is a huge amount of data, we were able to reduce it to below one Gigabit per second of streamable volumetric video. The novel representation that we use consists of Layered Meshes: typically 16 layers of dynamic low-res polygon meshes, each textured with semi-transparent red-green-blue-alpha video textures. And, we found a way to compress our representation into a temporally stable texture atlas which leverages industry-standard video codecs such as VP9 and h264.

SIGGRAPH: Congrats on winning the SIGGRAPH conference’s second-annual Best in Show – Immersive Experience award! Chosen by members of this year’s Unified and VR Theater juries, what does this honor mean to you as creators and to the Google family as a whole?

PD: We are thrilled and humbled to receive the Immersive Experience Best In Show award, especially because of the strong field of Immersive content at SIGGRAPH this year. We have a shelf in our Playa Vista building picked out for the award statue next to our first light field rigs.

SIGGRAPH: You shared during a media event last week that there will be an exclusive demo of the project released during SIGGRAPH 2020. Can you share more about this demo and how participants can view it?

PD: Yes! We are making a first-of-its-kind downloadable light field demo available to download from our DeepView Video project research project page at: https://augmentedperception.github.io/deepviewvideo/. It will play back the light field video clips you can see in our papers video in a desktop-driven VR headset, such as an HTC Vive or Oculus Rift.

SIGGRAPH: What’s next for “DeepView Immersive Light Field Video”?

PD: Like any medium, it will have its unique strengths, and some challenges. We want to discover how to make the most compelling content for this new form of free-viewpoint immersive VR video.

SIGGRAPH: Share your all-time favorite SIGGRAPH memory (or, tell us what you’re most looking forward to as you participate virtually).

PD: We’re a diverse team with a wide range of SIGGRAPH experiences, but we all look to SIGGRAPH as the forum for the most groundbreaking techniques in the art, technology, and industry of computer graphics. For some this will be our first or second SIGGRAPH, and our earliest SIGGRAPH attendee went in 1994! It was extremely exciting (and exhausting) to show the SIGGRAPH community our “Welcome to Light Fields” experience in VR headsets at SIGGRAPH 2018 in Vancouver — many of the greats of the field came by have a look, and we got a special invitation to record light fields at the Ivan Sutherland [ACM SIGGRAPH] Pioneers of VR event (you can see these here). Even in the virtual version of SIGGRAPH 2020, it remains the place to learn about the greatest advances in the field from the people making them.

SIGGRAPH: What advice do you have for someone looking to submit to a future SIGGRAPH conference?

PD: First, just do it! Put your all into both the work and into the submission packet which presents it. If the venue seems intimidating, like the Technical Papers program or the Electronic Theater, reach out to someone experienced with the venue for advice! You will very likely find that the Computer Animation Festival [director], or an experienced papers author, or your favorite immersive artist would be happy to review early versions of your work and give advice for submitting it to a particular venue. SIGGRAPH is an active community of creative individuals which guides and fosters the next generation of pioneering, visual creators.

You can still catch sessions and on-demand content from the “DeepView Immersive Light Field Video” team when you register now for SIGGRAPH 2020. Upcoming sessions include: “SIGGRAPH Labs Demo” (SIGGRAPH Labs) on Wednesday, 26 August, at 10:30 am PDT, and “Advances in XR” (Immersive Pavilion) at 9 am PDT and “Q&A: Systems and Software” (Technical Papers) at 1 pm PDT on Friday, 28 August.

Paul Debevec is a senior staff scientist with Google Research.