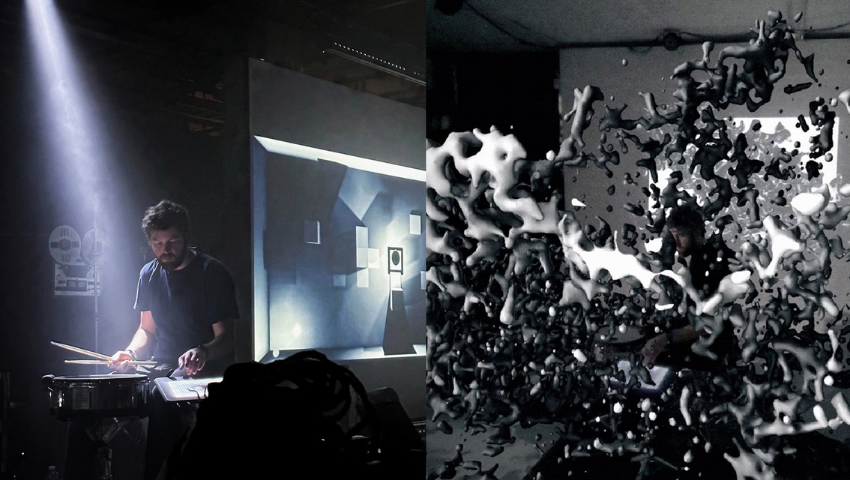

Image credit: Bent Stamnes – MUTEK Montreal 2023 + submitting authors

Real-Time Live! offers viewers an immersive experience that seamlessly merges digital visuals with the real world, balancing technical precision with social connection. By syncing real-time AR with live music, these innovators created an immersive yet shared performance, enhancing audience engagement without isolating viewers like VR. The team behind “Phases: Augmenting a Live Audio-Visual Performance with AR” presented their Real-Time Live! performance at SIGGRAPH 2024. We caught up with the contributors to hear about the development of their project.

SIGGRAPH: What challenges did you face in making real-time visuals work seamlessly for the audience?

Christian Sandor (CS) and Dávid Maruscsák (DM): One challenge we faced was that the Canon HMD does not provide a depth map, which was crucial for creating a real-time mask of Brett (the drummer) to properly occlude AR visuals behind him and achieve correct depth perception in AR. We initially attempted to use AI-based depth estimation from the HMD’s video feed, but the results were low quality, and the dynamic environment (e.g., background projections) further degraded their accuracy. In the end, we resolved this issue by using a Kinect Azure depth sensor.

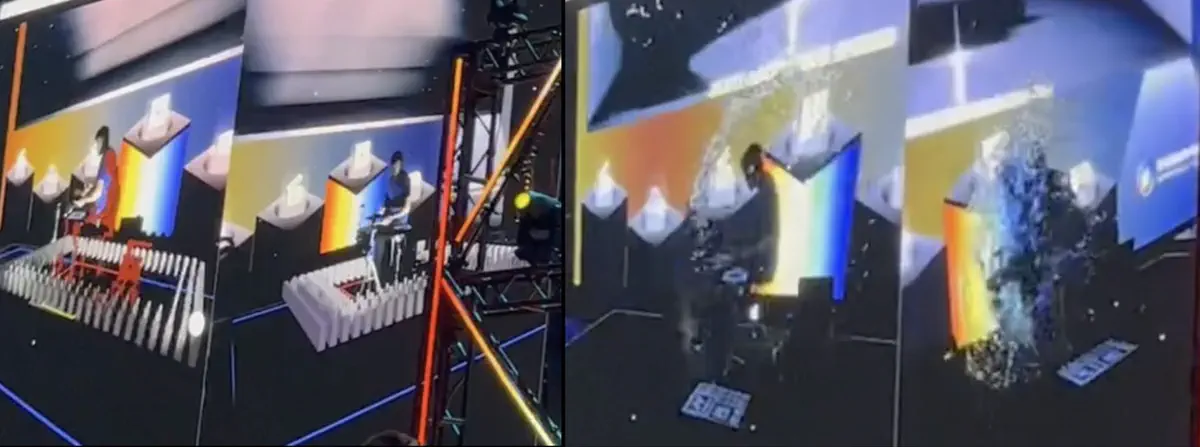

Image credit: Christian Sandor

SIGGRAPH: How do the Canon MREAL X1 AR glasses improve the viewer’s experience, and how do you ensure the AR visuals align with the real world while still keeping the performance social for non-AR viewers?

CS and MD: We chose this headset for several reasons. Most importantly, it is very “hackable”, as it allows very easy integration with any rendering engine, as well as access to low-level data (camera feed, gyroscope, tracking data, etc.). For other commercial headsets, you would need to jump through many hoops to achieve such basic tasks.

In terms of experience for a user wearing the headset, there are also several benefits compared to other commercial products:

- Comfort of wearing because of light weight (~10x lighter than Apple Vision Pro).

- The real world is visible at the periphery of the user’s visual field (unlike many XR displays which completely block the view of the real world), enabling the viewer to better link with the real environment.

- Great image quality.

The main downside is the extremely high price of this headset, which makes it not feasible for mass deployment; however, for our prototyping purpose it did not matter too much.

To align the virtual and real world, we start by initializing with a marker placed in a known position on the stage. Afterwards, a synergistic sensor-fusion-based tracker keeps things aligned over time.

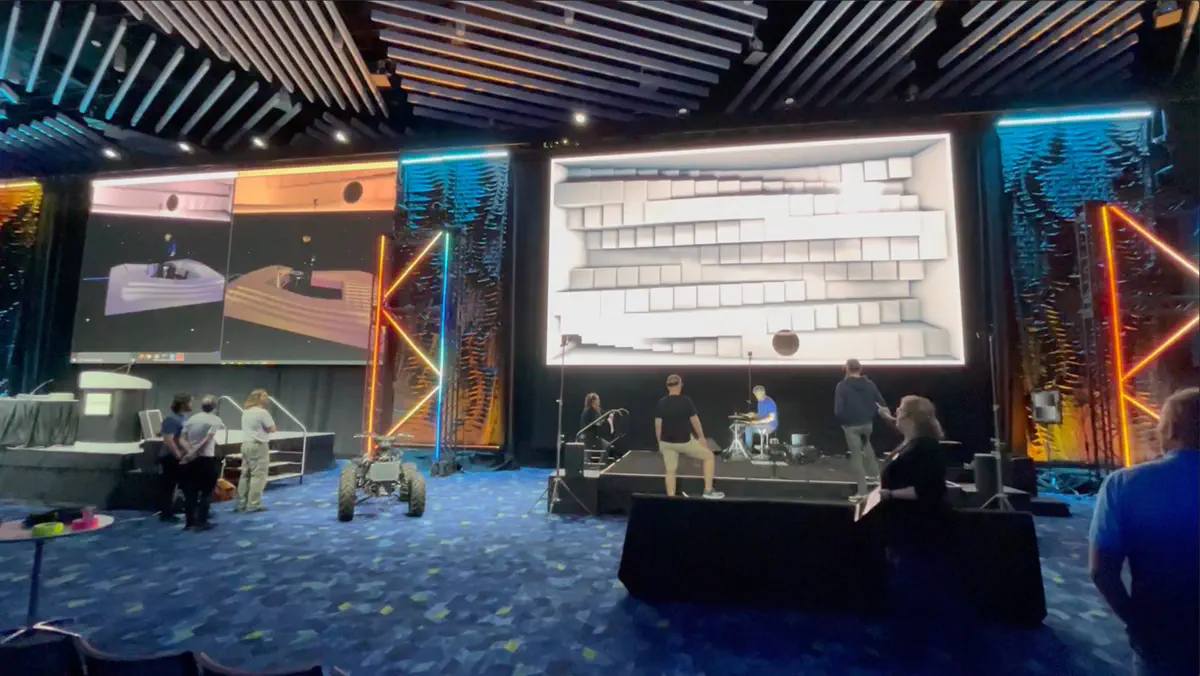

For non-AR viewers, it was crucial to display the AR users view on the big screens at the venue (see left-most screen in the image below).

Image credit: Christian Sandor

SIGGRAPH: How do you think this project could change live performances or collaborations in the future? What role do you see AR playing in creating new experiences for audiences?

CS and MD: At current live music events, we can often see that the audience is using their phones a lot to stream to social media or simply record videos and photos. The effect of this is that the event is perceived through the small window of the mobile phone, essentially disconnecting the audience from the musicians, as well as from each other. What a dystopia! We believe with the right visuals on AR headsets, we could encourage connections between audience members rather than to impede them.

An obvious challenge for such visions is the state of AR glasses, which are not yet at the level to be comfortable to wear over extended periods of time at a reasonable price level. However, technological progress will automatically fix that for us.

Nevertheless, we believe that even with the current state of technology it would be possible to construct compelling and commercially viable experiences. We are looking for collaborators to make this happen, so please don’t hesitate to contact us!

SIGGRAPH: What advice do you have for other Real-Time Live! submitters looking to showcase their project on stage?

CS and MD: We have three pieces of advice: Rehearse, rehearse, rehearse!

Did this inspire you to submit your own project? Submit to the SIGGRAPH 2025 Real-Time Live! program by 8 April.

Dr. Christian Sandor is a Professor at Université Paris-Saclay and the leader of the ARAI team at CNRS (Centre National de la Recherche Scientifique). Since the year 2000, his foremost research interest is Augmented Reality, as he believes that it will have a profound impact on the future of mankind.

In 2005, he obtained a doctorate in Computer Science from the Technische Universität München, Germany under the supervision of Prof. Gudrun Klinker and Prof. Steven Feiner. He decided to explore the research world in the spirit of Alexander von Humboldt and has lived outside of Germany ever since to work with leading research groups at institutions including: The University of Tokyo (Japan), Nara Institute of Science and Technology (Japan), Columbia University (New York, USA), Canon’s Leading-Edge Technology Research Headquarters (Tokyo, Japan), Graz University of Technology (Austria), University of Stuttgart (Germany), University of South Australia, City University of Hong Kong, and Tohoku University (Japan).

Together with his students, he won multiple awards at the premier Augmented Reality conference, IEEE International Symposium on Mixed and Augmented Reality (IEEE ISMAR): best demo (2011, 2016) and best poster honorable mention (2012, 2013). Further awards include: best short paper at ACM SIGGRAPH International Conference on Virtual-Reality Continuum and its Applications in Industry (2018), best paper at ACM Symposium on Spatial User Interaction (2018), and best demo (audience vote) at ACM SIGGRAPH Asia XR (2023).

He has presented several keynotes and has acquired over 3 million USD funding. In 2012, he was awarded in Samsung’s Global Research Outreach Program. In 2014, he received a Google Faculty Award for creating an Augmented Reality X-Ray system for Google Glass. In October 2020, he was appointed Augmented Reality Evangelist at the Guangzhou Greater Bay Area Virtual Reality Research Institute. In 2021, Dr. Sandor was named “Associate Editor of the Year” by IEEE Transactions on Visualization and Computer Graphics.

He serves as an editorial board member for IEEE Transactions on Visualization and Computer Graphics and as a steering committee member for ACM Symposium on Spatial User Interaction. He has been program chair for numerous conferences, including IEEE ISMAR, ACM SIGGRAPH Asia XR, and ACM SIGGRAPH Asia Symposium On Mobile Graphics And Interactive Applications.

Dávid Maruscsák is a multidisciplinary artist and PhD student exploring the intersection of audiovisual performance, interactive media, and emerging technology. A graduate of MOME Budapest (BA in Media Design) and an exchange student at the School of Creative Media in Hong Kong, he has contributed to independent and major theatrical productions, large-scale 3D mapping projects—including a mapping on the Hungarian Parliament—and diverse art exhibitions.

Fascinated by the synergy between light, technology, and space, Dávid crafts installations that blend computer 3D animation, AR, and projection techniques to create immersive environments. His work, driven by the interplay between digital and physical realms, has evolved from mapping projects to AR installations.

Expanding his exploration of human-machine interactions, Dávid has worked at the AR-lab led by Christian Sandor at the School of Creative Media in Hong Kong and pursued MA studies in human-computer interaction at Université Paris-Saclay. Currently, he is a PhD student at CNRS in the ARAI team. He is investigating Artificial Intelligence applications for AR and HCI under the supervision of Prof. Christian Sandor and Prof. Takeo Igarashi.