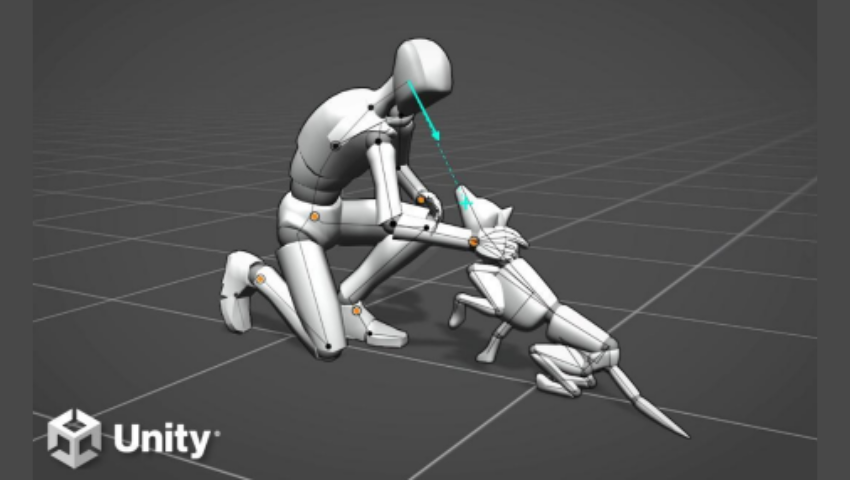

“AI and Physics Assisted Character Pose Authoring” (c) 2022 Unity Technologies

Character animation is no simple task. What if we could simplify the process? What would this mean for the world of animation? Now, researcher Florent Bocquelet and his team have created a new method of animation that simplifies the steps it takes in order to compose an animated character, and it cuts the time of doing so down by more than half. This method also won the SIGGRAPH 2022 Real-Time Live! Audience Choice Award! Read more about this character animation process and how Bocquelet felt about being a SIGGRAPH award winner.

SIGGRAPH: You won the Real-Time Live! Audience Choice Award for your project “AI and Physics Assisted Character Pose Authoring.” Congratulations! Can you briefly explain what this tool is used for?

Florent Bocquelet (FB): Thanks! Our mission is to make character animation easier for beginners. So far we focused on tools to quickly pose a character from just a few inputs. This means that you can place just one foot and one hand, and we can place the rest of the body using what it has learned from seeing hundreds of thousands of poses beforehand. Thanks to machine learning, our tool preserves the naturalness of poses while letting the artist remain in control.

SIGGRAPH: What is your biggest takeaway from winning this award?

FB: We did not expect to get this award. Being the public choice, we take this as a strong hint that our work is meaningful and relevant to people in the industry. This motivates us to push it further and make it real!

SIGGRAPH: “AI and Physics Assisted Character Pose Authoring” allows users with no artistic experience to author character poses. How does this tool impact users, specifically the SIGGRAPH community? What problems does it solve?

FB: Animating a character is still an expert job today, and it might even require multiple persons to get a final animation: once it is modeled, you need to skin the character, rig it, pose it, tween it, etc. For nonexperts, it is very hard and time consuming to make a convincing character animation. Our tool tries to solve that by leveraging machine learning to capture the subtle details that make a pose look natural while letting the user be in control. We also believe it could speed up some professional workflows, especially for early prototypes. For the SIGGRAPH community, we released a paper describing our model architecture and training process which we hope will serve as a foundation for future research.

SIGGRAPH: Can you describe how you trained a machine learning model to predict a full-character pose?

FB: We first collected a large dataset of motion capture data that we retargeted on our custom skeleton so that all animations shared the same structure. We then developed a specific model architecture that could handle partial inputs. This was very important to us so that users could iteratively add more constraints to specify the pose in any order they like. Our model was then trained to minimize a set of losses that describe the overall goal of posing a character, like a look-at-loss. During training, we simulated user inputs by randomly generating a few constraints from the ground truth pose, for instance by picking a few joint positions as input constraints. We also employ a set of regularization tricks to make the training process better and help the model generalize, which are all explained in our paper.

SIGGRAPH: What other SIGGRAPH 2022 Real-Time Live! projects did you find fascinating?

FB: There were a lot of impressive demos at the Real-Time Live! this year. I am fascinated by the pace of progress around NeRFs (neural radiance fields) in general, and it was amazing to see it train live on stage! But it is hard to pick, they were all very interesting.

SIGGRAPH: What was the best part of participating in Real-Time Live! at SIGGRAPH 2022?

FB: Everything was super well organized, and it was truly amazing to see the public react during the demonstration. The public was really into it, and it made it way more interesting than a pre-recorded demo. We also had a few laughs which were not planned. The fact that demos are performed live makes it more real for users.

SIGGRAPH: What advice do you have for those submitting to Real-Time Live! in the future?

FB: Make it simple to follow, and have fun!

Want to know more about the world of animation and watch Real-Time Live! on-demand? You can still register and access these exciting sessions in the virtual conference platform through 31 October!

Florent Bocquelet is a machine learning manager at Unity Labs working on innovative tools to

improve 3D character animation workflows. His area of expertise is both machine

learning and software development. Before joining Unity Technologies in 2019, Florent

was doing research on brain computer interfaces in a neuroscience lab for several

years. During that time, he also released several games as an independent game

developer.