© 2019 Weta Digital

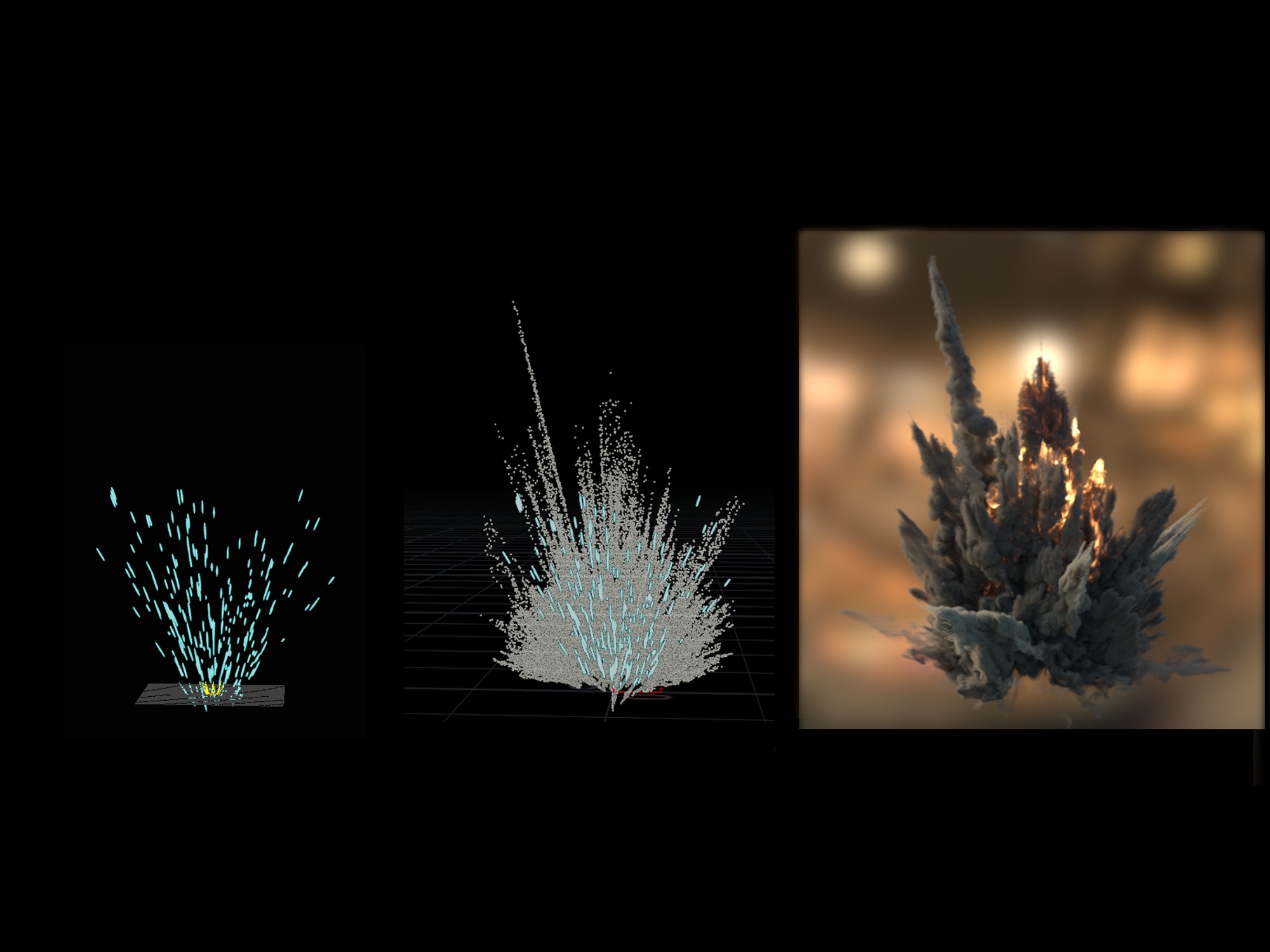

On Wednesday, 13 May 2020, the SIGGRAPH 2020 conference hosted its first-ever, complimentary webinar. The session, “Procedural Approach to Animation-Driven Effects for ‘Avengers: Endgame'”, encompassed a 20-minute Talk followed by Q&A with participants. Though we were unable to record the session, what follows is an extended Q&A with presenters Gerardo Aguilera (FX Supervisor) and Tobias Mack (Software Engineer) of Weta Digital. For those who may have missed the original webinar, the video below offers a look at the work the studio did on the film, “Avengers: Endgame”.

Q: What aspect does an FX TD need more, an artistic eye or a technical mind? How can one improve our artistic eye? —Anonymous

A:

Gerardo – In my years in the industry, I can tell you that I have seen all combinations. FX TDs who are good all around — in both areas — as well as TDs who are a lot more technical or a lot more artistic. The good thing is that you can always learn and improve either side. If you want to improve your artistic side you can get into something like photography that can help you develop an eye for detail. If you are already working in the industry something I did when I was starting out was to pay really close attention when a VFX Supervisor was giving out a note regardless if it was for FX, sometimes it could’ve been a note for lighting or comp, but I would try to “see” what the VFX Supervisor was catching, and through time like that you can develop that eye for detail. On the technical side you can learn scripting in python for example.

Q: How integrated are real-time render engines in your pipeline? —Roberto O.

A:

Tobias – Weta Digital developed our own in-house, real-time render, and we have been working with that for many years now. This means we have very deep integration at all stages of the pipeline.

Q: Any advice for a teacher, teaching FX? More so along the lines on how I can prepare my students for FX work in the industry? —Bobby G.

A:

Gerardo – Hi, Bobby. First of all, I just want to say thank you for your question and this is great to hear. My recommendation would be to keep current with software and techniques to where the industry is moving. This is a very evolving industry and teaching your students knowledge that they can apply currently will be invaluable. Also, trying to teach them real-world scenarios that either you might have experienced through working on a production or through consulting with colleagues that might be in the industry.

Q: Having the experience from the film, what would you change for the next time you use AnimFX for a project? —Anonymous

A:

Tobias – More than changes to AnimFX, I would say expanding the toolset. I would like to see more “governor” features to QC [or quality control] some of animation ‘s work. Also, on the Houdini side of things, there is still room to automate some of the processes even more.

Q: Can I get some tips about making a good FX showreel? —Bharath H.R.

Tobias – More than changes to AnimFX, I would say expanding the toolset. I would like to see more “governor” features to quality control (QC) some of animation ‘s work. Also, on the Houdini side of things, there is still room to automate some of the processes even more.

A:

Gerardo – Keep it short. If you can keep it around, approximately, two minutes, then that’s typically good. It’s always better to have less quality work in your reel than more average work. And, don’t make the music too specific. As an extreme example, you might like a very heavy, fast-paced song, but the person watching your reel might hate it, which can affect how they view your work (or they will simply mute it).

Q: How do you ensure consistency between your asset preview in Maya and the final assets in Houdini, especially when they are not strictly, physically accurate? Do you end up having to program the same setups twice, or do you use Houdini Engine on the Maya end to reuse the same setup? —Nikita P.

A:

Gerardo and Tobias – Like we mentioned in the presentation, all the computation and visualization is done outside of any host, using our in-house evaluation engine Koru and the real-time renderer Gazebo. This way, the data provided to the host is always consistent and no work is doubled up.

Q: In terms of dynamics and FX, what were both of your favorite and most challenging shots to work with? And, how did you approach those challenges? —Anonymous

A:

Gerardo – My favorite shot would probably be the whole blip sequence at the end when Thanos and his army get blipped away. There is a nice breakdown uploaded to the Weta YouTube [embedded below] that talks about this. One of the more challenging ones would be maybe the building destruction when Giant-Man comes out of. We have proprietary tools in Houdini that helped us accomplish this shot.

Q: How many FX artists are actually still working on the shots if everything is automated? What is their daily work: creating assets or do they still do actual shot work sometimes? —Jonas S.

A:

Gerardo – Oh, a lot. The only reason we developed and used the AnimFX approach was so that we could actually accomplish delivering the rest of the sequence. There was so much work involved that wasn’t or couldn’t be automated that we had over 40 FX artists at Weta working on the final sequence of the film.

Q: Do you use Houdini to make those gaseous/smoke effects or Weta has its own simulation tool? —Anonymous

A:

Tobias – We used our proprietary volumetric solver.

Q: How were these automated sequences reviewed? For example, would an FX artist be assigned to a series of shots to oversee the automated results, then were those submitted to a dailies review? —James F.

A:

Gerardo – Once we established the look of the library elements in a couple of shots, we did have an FX artist overseeing that the automation process was triggered correctly and that lighting was receiving our publishes correctly. In FX, we had a process to create flipbooks in order to review that we were ingesting the data correctly, and that the propagation for background (BG), motion graphics (MG), and foreground (FG) elements were correct. We got to a point that we didn’t need to do renders, it could go directly to lighting.

Q: Heard that Netflix is developing AI for VFX platform. What would be the future of VFX? —Sakthiveli I.

A:

Tobias – No one knows for sure but AI is definitely a useful tool to have and we are always doing research in many directions.

Q: What do you use for crowds simulation? In the battle with so many characters, did you animate everyone one by one in Maya? or did you use Houdini? —Hernaldo H.N.

A:

Gerardo – We use Massive in Weta.

View a list of all past SIGGRAPH Now webinars and sign up for upcoming sessions here.