Computer-generated dinosaurs walk the Earth. Source: Universal Pictures Studios

Written by Adam Bargteil, University of Maryland, Baltimore County

Republished with permission of The Conversation

With 25 years of hindsight, “Jurassic Park” marks a pivotal point in the history of visual effects in film. It came 11 years after 1982’s “Star Trek II: The Wrath of Khan” debuted computer-generated imagery for a visual effect with a particle system developed by George Lucas’s Industrial Light and Magic to animate a demonstration of a life-creating technology called Genesis. And “Tron,” also in 1982, included 15 minutes of fully computer-generated imagery, including the notable light cycle race sequence.

The Genesis demonstration from ‘Star Trek II: The Wrath of Khan.’

Yet “Jurassic Park” stands out historically because it was the first time computer-generated graphics, and even characters, shared the screen with human actors, drawing the audience into the illusion that the dinosaurs’ world was real. Even back then, upon seeing the initial digital test shots, George Lucas was stunned: He’s often quoted as saying “it was like one of those moments in history, like the invention of the light bulb or the first telephone call … A major gap had been crossed and things were never going to be the same.”

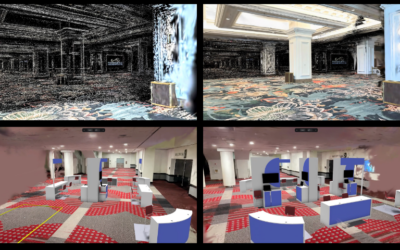

Since then, computer graphics researchers have been working to constantly improve the realism of visual effects and have achieved great success, scholarly, commercial and artistic. Today, nearly every film contains computer-generated imagery: Explosions, tsunamis and even the wholesale destruction of cities are simulated, virtual characters replace human actors and detailed 3D models and green-screen backgrounds have replaced traditional sets.

Years of progress

I have been researching computer animation for nearly two decades and witnessed the transition from practical to virtual effects; it didn’t happen overnight. In 1993, the film industry didn’t really trust computer graphics. For decades, filmmakers had relied on physical models, stop motion and practical special effects, many of them provided by ILM, which was founded to create the effects in the original “Star Wars” trilogy and, notably, provided effects for the “Indiana Jones” movie series. When he made “Jurassic Park,” therefore, director Steven Spielberg approached computer-generated sequences with caution.

By some counts, computer-generated dinosaurs were on screen for only six minutes of the two-hour movie. They were supplemented with physical models and animatronics. This juxtaposition of computer-generated and real-world imagery gave audiences the illusion of realism because the computer-generated images were on screen along with real footage.

Computers bring the extinct back to life.

The 3D animated movies that followed in the late 1990s — like “Toy Story” series and “Antz” — were stylized, cartoonish films limited even by the era’s best computing power, lighting models, and geometric modeling and animation packages.

The bar for realism is much higher when computer-generated images are mixed with live-action footage: Audiences and critics complained that mapping an actor’s face onto a younger virtual body didn’t work well in 2010’s “Tron: Legacy.” (Even the director admitted the effect wasn’t perfect.) In fact, small infidelities can be especially jarring when they look quite close but just a little bit off.

Early successes of computer special effects — such as “Starship Troopers,” “Armageddon” and “Pearl Harbor” — focused on adding events like explosions and other large-scale destruction. Those can be less true to real life because most of the audience hasn’t experienced similar events in person. Over the years, though, computer graphics researchers and practitioners tackled cloth, water, crowds, hair and faces.

Learning to use the innovations

There were important practical advances as well. Consider the evolution of performance capture for virtual characters. In the early days, live actors would have to imagine their interactions with computer-generated characters. The people playing the computer-generated characters would stand nearby, describing their actions out loud, as the human actors pretended to see it happening. Then the virtual-characters’ actors would record their performance in a motion capture lab, supplying data to 3D animators, who would refine the performance and render it to be incorporated in the scene.

The process was painstaking and especially difficult for the live-action actors, who couldn’t interact with the virtual characters during filming. Now, more advanced performance capture systems allow virtual characters to be interactive on the set, even on locations, and provide much richer data to the animators.

Performance capture pioneer Andy Serkis explains how his work has transformed over the years.

With all this technological ability, directors have to make big choices. Michael Bay is famous — among fans and critics — for extensive use of computer-generated special effects. True masters remember Spielberg’s lesson and skillfully combine the virtual and real worlds. In the “Lord of the Rings” movies, for example, it would have been easy to use computer graphics techniques to make the hobbit characters seem smaller than their human counterparts. Instead director Peter Jackson used carefully chosen camera locations and staging to achieve this effect. Similarly, the barrel escape scene from “The Hobbit: The Desolation of Smaug” combined footage from real river rapids with computer-generated liquids.

![]() More recently, makeup and computer magic were combined to create a merman lead actor in much-lauded “The Shape of Water.” Looking toward the future, as synthetic images and video become ever more realistic and easy to produce, people will need to be on guard that those techniques can be used not just for entertainment but to mislead and misinform the public.

More recently, makeup and computer magic were combined to create a merman lead actor in much-lauded “The Shape of Water.” Looking toward the future, as synthetic images and video become ever more realistic and easy to produce, people will need to be on guard that those techniques can be used not just for entertainment but to mislead and misinform the public.

This article was originally published on The Conversation. Read the original article here.