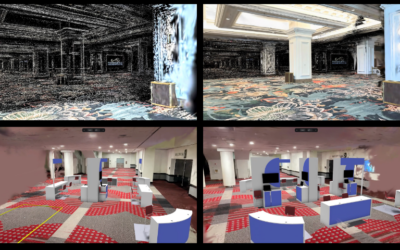

Image Credit: Qixuan Zhang, Longwen Zhang, Jiawei Wang, Lan Xu, Jingyi Yu

As we count down the days to SIGGRAPH 2024, we caught up with Qixuan Zhang to chat about his Real-Time Live! presentation “Rodin: 3D Asset Creation With a Text/Image/3D-Conditioned Large Generative Model for Creative Frontier.” Join us on a thrilling discussion about his real-time technology and the problems it solves in this rapidly evolving environment.

SIGGRAPH: The world of artificial intelligence is evolving every day. What inspired the research and development of this real-time technology?

Qixuan Zhang (QZ): In the past two years, we have witnessed the rapid development of generative AI across various applications. Modalities such as text, images, audio, and video have all undergone transformations under the influence of generative AI. We have seen both skepticism and enthusiasm from people as generative AI has enabled more individuals to participate in creative processes. The field of 3D generative AI research is also gaining significant attention. However, previous research has not been well-compatible with the 3D industry, preventing 3D generation from becoming a widespread trend.

At last year’s Real-Time Live!, we introduced ChatAvatar, a production-ready 3D character generation product that was very well received. ChatAvatar has already produced hundreds of thousands of high-quality character assets for over 50,000 artists. With this success, we aim to expand the application of this technology to a broader range of fields, which has led to the creation of Rodin Gen-1, a General 3D GenAI.

SIGGRAPH: Give our audience a sneak peek at how you developed this work. What problems does this technology solve?

QZ: In a nutshell, Rodin Gen-1 is the 3D version of Stable Diffusion + ControlNet + LoRA. Its core is a 3D Native Generative Large Model using a Diffusion Transformer architecture similar to Sora. Just as Sora generates an entire video at once, surpassing previous methods that used Stable Diffusion to output consecutive frames, Rodin Gen-1 trains directly on 3D data to generate an entire 3D model at once, achieving remarkable results.

I believe the biggest problem that Rodin addresses is whether the creative process should be led by artists or by AI. Through the use of ControlNet and LoRA, Rodin Gen-1 makes the entire generation process more controllable, allowing artists to influence their creations and achieve a Human-in-the-Loop system. This ensures that artists have sufficient input throughout the entire process.

SIGGRAPH: How does Rodin empower creators to focus on their artistic vision and less on the technical complexities of digital content creation?

QZ: Through conversations with dozens of artists who have used Rodin Gen-1, I believe that its greatest benefit is enabling artists to quickly move through the prototype stage. Rodin accelerates the overall idea iteration process, allowing artists to rapidly realize a brief implementation of their concepts. This helps artists focus on their creativity without needing to master certain complex skills.

Additionally, we hope that Rodin will lower the barrier to learning DCC software, allowing more people to participate in the creative process. By simplifying these technical hurdles, Rodin opens up opportunities for a broader range of individuals to engage in artistic creation.

SIGGRAPH: What does the future hold for ”Rodin”? How do you see it evolving in the future?

QZ: Compared to other modalities, 3D generation is still in its early stages. I believe that Rodin Gen-1 is comparable to MJ V3 in the image generation field in terms of quality, and it wasn’t until MJ V4 that it truly shined. Another apt comparison is that Rodin’s current parameters are similar to GPT-2, while the true power of GPT was felt with GPT-3.5.

We believe that as technology advances, Rodin will revolutionize the way models are created, saving artists significant time in the modeling process. More importantly, it will democratize creativity by allowing more people to participate in the creative process. This will help achieve greater equality in artistic creation.

SIGGRAPH: What do you hope the SIGGRAPH 2024 audience takes away, personally or professionally, from your live presentation?

QZ: From our live presentation at SIGGRAPH 2024, we hope the audience takes away a profound understanding of how Rodin seamlessly integrates into creative workflows, drastically reducing the time and technical barriers associated with creating high-fidelity 3D assets. By witnessing Rodin in action, they will see how it empowers artists to focus more on their creative vision rather than the complexities of digital content creation. We aim to offer a glimpse into the future of 3D content creation in the GenAI era, showcasing how Rodin can democratize the field by making advanced 3D modeling accessible to more people. Our goal is for the audience to leave with a sense of excitement and inspiration, both personally and professionally, about the possibilities that Rodin opens up for the future of digital art and design.

SIGGRAPH 2024 will be here before you know it. Don’t miss out on this thrilling Real-Time Live! presentation along with a wide variety of computer graphics content presented in Denver and virtually. Register for SIGGRAPH 2024 today.

Qixuan Zhang (QX) is a graduate student at Intelligent Vision and Data Center at ShanghaiTech University. He founded the 3D GenAI Startup, DeemosTech, and served as its CTO. QX specialize in computer graphics, computational photography, and gen AI.