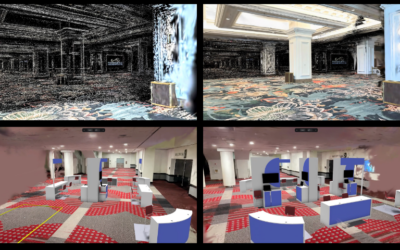

photo by Andreas Psaltis © 2023 ACM SIGGRAPH

We caught up with the team behind the SIGGRAPH 2023 Real-Time Live! selection “Suit Up: AI MoCap.” Argyris Chatzitofis, Georgios Albanis, Nikolaos Zioulis, and Spiros Thermos complete the team at Moverse. Here, they share their inspiration for the marker-based, motion capture (MoCap) method and what is next for them and their project.

SIGGRAPH: What inspired you to create an AI-powered, marker-based motion capture method?

Argyris Chatzitofis (AC): Our experience dealing with contemporary marker-based systems’ friction and complexities inspired us to develop an AI-powered method to combine the precision of traditional marker-based systems with the efficiency of deep learning.

SIGGRAPH: Tell us how you developed your AI system to perform while an object is in front of the camera.

AC: Our system employs 3D information across all stages, starting from single sensor measurements acquired from infrared and depth to a data-driven model trained with synthetic data that can be corrupted during training to simulate challenging capture conditions.

SIGGRAPH: This is an alternative option for optical marker-based motion capture built on real-time and low-latency interference of data-driven models. Why did you create an alternative option? What problem does it solve, and what are its benefits?

AC: Our goal is to open up the availability of robust marker-based MoCap, also reducing their complexity and high costs, allowing smaller or leaner teams to benefit from a solution that allows them to better manage the hardware and complexity tradeoffs.

SIGGRAPH: How would the motion capture operate with more or less markers or cameras?

AC: The demonstrated system is scalable with respect to both sensor and marker count. Still, it can operate with just two sensors and can deliver robust MoCap with just 40 markers attached to straps.

SIGGRAPH: What’s next for “Suit Up: AI MoCap”?

AC: Our last demo was just the start of our team’s work on MoCap and 3D animation. This year, we will be removing the need for suits and even capturing sessions!

SIGGRAPH: Tell us about your experience presenting during Real-Time Live! at SIGGRAPH 2023. What advice do you have for those planning to submit to Real-Time Live! at SIGGRAPH 2024?

AC: We had a great time. Real-Time Live! is a unique show that goes beyond flat scientific stories. Our advice for submitters is to rethink their presentation to be more engaging, interactive and fun. Enjoy these special six minutes!

Ready for your six minutes of fame? Submit to Real-Time Live by 9 April for your chance to take the stage at SIGGRAPH 2024.

Argyris Chatzitofis is co-founder and CEO at Moverse. He received his degree and Ph.D. from the National Technical University of Athens. For more than 10 years, including his Ph.D. research, he has focused on human motion capture using computer vision and machine learning. He has a strong background and passion for AI, motion capture, volumetric video, and 3D vision.

Georgios Albanis is a co-founder of Moverse, which specializes in advance Mocap (motion capture) technologies and human synthesis, integrating disciplines like computer vision, multiple-view geometry, machine learning, and computer graphics. Alongside his role at Moverse, he is also pursuing a Ph.D. in human motion capture and machine learning at the University of Thessaly. Moverse’s projects and research are centered on applying cutting-edge technological solutions to practical challenges in character 3D animation, pushing its boundaries.

Nikolaos Zioulis is the CTO of Moverse. He is a scientist and engineer with diverse experience in computer vision, graphics, and machine learning. He got his degree from the Aristotle University of Thessaloniki (AUTH) and his Ph.D. from the Universidad Politécnica de Madrid (UPM). At Moverse, he is rethinking MoCap at the intersection of body simulation technology, volumetric capture, and machine learning.

Spyridon (Spiros) Thermos has joined the startup quest as a co-founder of Moverse. He received his diploma in computer engineering (2013), his M.Sc. in computer science (2015), and his Ph.D. from the ECE University of Thessaly (2020). More recently, he was a postdoctoral researcher in the University of Edinburgh and an adjunct lecturer at the University of Thessaly. At Moverse, he is exploring the boundaries of generative AI in human motion.