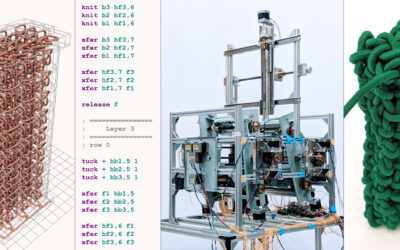

Image Credit: Qixuan Zhang

“DreamFace: Progressive Generation of Animatable 3D Faces Under Text Guidance” is truly a dream come true! “DreamFace” is a tool for generating personalized 3D faces under text guidance, allowing for easy customization of facial assets compatible with computer graphics pipelines. It produces realistic, high-quality 3D facial assets with rich animation capabilities. We sat down with Qixuan Zhang, a member of the team behind the magic, to learn more about how the team turned their dream into the next AR reality.

SIGGRAPH: Share some background about “DreamFace: Progressive Generation of Animatable 3D Faces Under Text Guidance.” What inspired this advancement in generating personalized 3D faces?

Qixuan Zhang (QX): “DreamFace” originates from a collaboration between ShanghaiTech University and Deemos Tech, a startup I co-founded when I was an undergraduate student. We embarked on this project with a clear mission: Make digital human technology affordable and accessible to every creator. The stark reality in the industry was that such technology was expensive and inefficient, primarily available only to big Hollywood studios who could afford it and produce impressive results.

This disparity motivated us to develop a more accessible, affordable, and democratizing technology. Recognizing AI’s potential as a game-changer, we envisioned using generative AI to empower creators at all levels. Our approach aimed to provide swift and effortless access to digital character assets, allowing creators to focus on their artistic expression and storytelling. This would not only free them from technical complexities but also open the graphics community to a wider audience. The “DreamFace” project is built upon this aspiration, reflecting our commitment to accessible digital creation and our passion for leveraging cutting-edge technology to transform the arts and entertainment sector.

SIGGRAPH: Why is it important to have variety in facial animation? How does it advance animation capabilities?

QX: Variety in facial animation is important because it allows for more realistic and relatable characters. Diverse expressions add depth to storytelling. With “DreamFace,” our focus isn’t limited to static, neutral faces. We aim to provide a robust animation framework that offers a wide range of expressions, enhancing the emotional depth and realism of digital characters.

SIGGRAPH: Tell us how you developed this work. What challenges did you face? What surprised you most during this process?

QX: In developing “DreamFace,” we embarked on a journey that intersected with the evolving narrative of generative AI and its potential intellectual property (IP) issues. Prior to starting our research, we had amassed a collection of production-ready facial assets, all legally authorized by models and actors, for AI training. However, these alone could only lead us to parameterized models, falling short of the richness we aspired to achieve. Our breakthrough came with the discovery of the Latent Diffusion Model, which, paired with CLIP technology and the LAION dataset, demonstrated remarkable versatility in text-to-image tasks. Utilizing this model, we built a Mix of Expert generation framework to create 3D avatars, forming the essence of “DreamFace.”

The second major challenge we faced was surprisingly non-technical. Preparing our “DreamFace” Technical Paper, we needed to generate 100 distinct avatars to showcase its capabilities. This meant writing 100 unique prompts to describe each character’s appearance. After struggling to create diverse descriptions past the fifth avatar, we turned to AI for help, specifically GPT-3 from OpenAI. Before the release of ChatGPT, GPT-3’s continuation capabilities became our assistant, guiding us through the creation of these 100 facial descriptions. This experience not only reiterated the power of generative AI but also highlighted the difficulty of prompt writing for average users. We integrated this insight into “DreamFace,” leading to the development of what we initially termed Prompt User Interface, and later evolved into the Language User Interface.

SIGGRAPH: “DreamFace” can generate realistic 3D facial assets and with rich animation ability from video footage, for fashion icons or exotic characters in movies. Impressive! What is next for “DreamFace”? Do you envision any further advancements or developments for the technology?

QX: It’s been a year since we completed the “DreamFace” project, and we’ve continuously improved it since. For instance, the process of generating each avatar, which took tens of minutes in our initial research, is now down to just 30 seconds. This efficiency has opened the door for “DreamFace’s” commercialization. The technology is now available on hyperhuman.top, where anyone can generate avatars from text or images and use them directly in platforms like Unity, Unreal, Maya, and Blender. We were thrilled to showcase this progress at SIGGRAPH 2023’s Real-Time Live! session.

Looking ahead, our goal is to bring even more innovation to the computer graphics community. We’re expanding the “DreamFace” framework to generate a wider range of 3D assets directly from text or images, creating production-ready assets. We’ve made significant strides in this area, and those interested can follow our latest developments at x.com/deemostech.

SIGGRAPH: What advice do you have for someone who wants to submit to Technical Papers for a future SIGGRAPH conference?

QX: My first SIGGRAPH submission in 2022 didn’t make the cut, but after months of refining our work, it was accepted at SIGGRAPH Asia. In just about two years, our team has had seven papers accepted by SIGGRAPH. My key advice is to not get discouraged by initial setbacks. Think from a creator’s perspective. Focus on technologies that the industry needs and that can draw more people into the computer graphics community. Remember, in graphics, there’s always a touch of magic.

In case you missed it: Submissions are open for a variety of programs! Visit the website to stay alert of upcoming deadlines as we prepare to gather in Denver, 28 July–1 August.

Qixuan Zhang is a graduate student at ShanghaiTech University and the CTO of DeemosTech, a digital human AI company. QX specializes in computer graphics, computational photography, and GenAI. QX’s research has been accepted by top academic conferences like SIGGRAPH, GDC, and CVPR, and their work has been applied to various film and game projects.