Image credit: “Split-Lohmann Multifocal Displays” © 3D scene model courtesy of “Entity Designer” on Blender Market under “Royalty free license” (See: https://blendermarket.com/products/dark-interior-scene)

The Technical Papers program has been at the core of SIGGRAPH since the very first conference 50 years ago. It is the premier international venue for disseminating and discussing innovative scholarly work in animation, simulation, imaging, geometry, modeling, rendering, human-computer interaction, haptics, fabrication, robotics, visualization, audio, optics, programming languages, immersive experiences, and machine learning for visual computing to name a few.

Following on the success of SIGGRAPH 2022, SIGGRAPH 2023 accepted submissions to two integrated paper tracks: Journal (ACM Transactions on Graphics) and Conference. Additionally, SIGGRAPH 2023 is continuing the new tradition of awards for Best Papers and Honorable Mentions. These papers were selected for their research prominence and new contributions to the future of research in computer graphics and interactive techniques.

SIGGRAPH 2023 Technical Papers Chair Alla Sheffer is thrilled to highlight these award-winning papers and thanks the selection committee who chose the Best Papers and Honorable Mentions out of a pool of hundreds.

Learn more about the Best Papers and Honorable Mentions below, and get ready to explore what’s next in research at SIGGRAPH 2023. Plus, learn more about the new ACM SIGGRAPH award, Test-of-Time, and its recipients. But first, check out the SIGGRAPH 2023 Technical Papers Trailer!

Best Papers

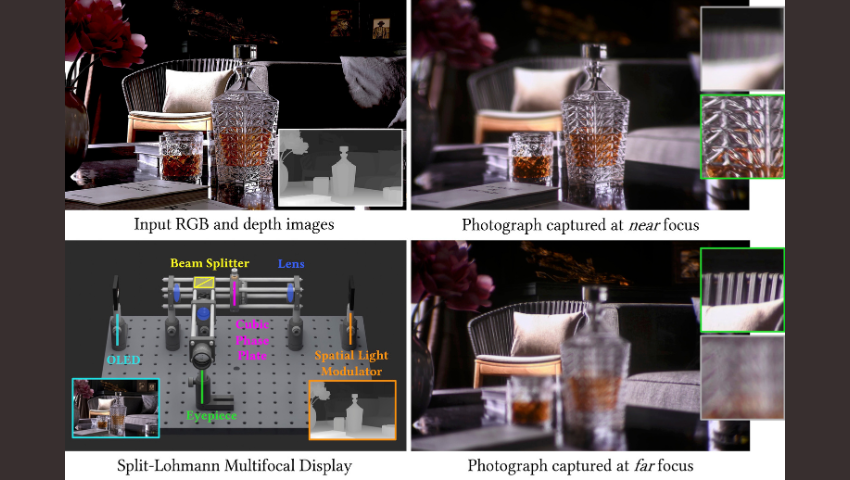

Split-Lohmann Multifocal Displays

This work describes a near-eye 3D display that instantaneously creates a virtual world, fully supporting the human eye’s native ability to focus on content placed at different distances. This capability enables a viewer to experience 3D videos and interactive games at a previously unattainable level of immersion.

Yingsi Qin, Wei-Yu Chen, Matthew O’Toole, Aswin C. Sankaranarayanan, Carnegie Mellon University

Differentiable Stripe Patterns for Inverse Design of Structured Surfaces

We introduce Differentiable Stripe Patterns — a computational approach for automated design of physical surfaces structured with stripe-shaped, bi-material distributions. We propose a gradient-based optimization tool to automatically compute stripe patterns that best approximate macromechanical performance goals.

Juan Sebastian Montes Maestre, Yinwei Du, Ronan Hinchet, Stelian Coros, Bernhard Thomaszewski, ETH Zürich

Globally Consistent Normal Orientation for Point Clouds by Regularizing the Winding-number Field

We propose a smooth objective function to characterize the requirements of an acceptable winding-number field, which allows one to find the globally consistent normal orientations starting from a set of completely random normals.

Rui Xu, Shandong University; Zhiyang Dou, The University of Hong Kong; Ningna Wang, The University of Texas at Dallas; Shiqing Xin, Shandong University; Shuangmin Chen, Qingdao University of Science and Technology; Mingyan Jiang, Shandong University; Xiaohu Guo, The University of Texas at Dallas; Wenping Wang, Texas A&M University; Changhe Tu, Shandong University

3D Gaussian Splatting for Real-time Radiance Field Rendering

Our method allows real-time rendering (>= 30fps) of radiance fields with high visual quality. We represent scenes accurately with 3D Gaussians allowing efficient optimization. Our visibility-aware rendering accelerates training which is as fast as the fastest previous methods for equivalent quality. An additional hour of training provides state-of-the-art quality.

Bernhard Kerbl, Inria, Université Côte d’Azur; Georgios Kopanas, Inria, Université Côte d’Azur; Thomas Leimkuehler, Max-Planck-Institut für Informatik; George Drettakis, Inria, Université Côte d’Azur

DOC: Differentiable Optimal Control for Retargeting Motions Onto Legged Robots

We present a Differentiable Optimal Control (DOC) framework that facilitates the computation of analytical derivatives of optimal control and state trajectories with respect to user-defined parameters. We demonstrate the utility of DOC by retargeting mocap and animation data onto a family of legged robots of varying proportions and mass distribution.

Ruben Grandia, Disney Research Imagineering; Farbod Farshidian, ETH Zürich; Espen Knoop, Disney Research Imagineering; Christian Schumacher, Disney Research Imagineering; Marco Hutter, ETH Zürich; Moritz Bächer, Disney Research Imagineering

Honorable Mentions

GestureDiffuCLIP: Gesture Diffusion Model With CLIP Latents

We introduce GestureDiffuCLIP, a CLIP-guided, co-speech gesture synthesis system that creates stylized gestures in harmony with speech semantics and rhythm using arbitrary style prompts. Our highly adaptable system supports style prompts in the form of short texts, motion sequences, or video clips and provides body part-specific style control.

Tenglong Ao, Zeyi Zhang, Libin Liu, Peking University

Word-as-image for Semantic Typography

Word-as-image is a technique where a word illustration presents a visualization of the meaning of the word, while also preserving its readability. We present a method to create word-as-image illustrations automatically. We optimize the outline of each letter to convey the desired concept, guided by a pretrained Stable Diffusion model.

Shir Iluz, Tel Aviv University; Yael Vinker, Tel Aviv University; Amir Hertz, Tel Aviv University; Daniel Berio, Goldsmiths University of London; Daniel Cohen-Or, Tel Aviv University; Ariel Shamir, Reichman University

Sag-Free Initialization for Strand-Based Hybrid Hair Simulation

This paper proposes a novel four-stage, sag-free initialization framework to solve stable quasistatic configurations for hybrid, strand-based hair dynamic systems. Our results show that our method successfully prevents sagging on various hairstyles and has minimal impact on the hair motion during simulation.

Jerry Hsu, University of Utah, LightSpeed Studios, Tencent America; Tongtong Wang, LightSpeed Studios, Tencent America; Zherong Pan, LightSpeed Studios, Tencent America; Xifeng Gao, LightSpeed Studios, Tencent America; Cem Yuksel, University of Utah, Roblox Research; Kui Wu, LightSpeed Studios, Tencent America

Deployable Strip Structures

C-meshes capture kinetic structures deployable from a collapsed state. They enjoy rich geometry and surprising relations to differential geometry, in particular surfaces with the linear Weingarten property. We provide tools for designing and exploring the shape space of C-meshes, and we present architectural paneling applications.

Daoming Liu, King Abdullah University of Science and Technology (KAUST); Davide Pellis, ISTI-CNR; Yu-Chou Chiang, National Chung Hsing University; Florian Rist, King Abdullah University of Science and Technology (KAUST); Johannes Wallner, TU Graz; Helmut Pottmann, King Abdullah University of Science and Technology (KAUST)

Towards Attention-Aware Rendering

Existing perceptual models used in foveated graphics neglect the effects of visual attention. We introduce the first attention-aware model of contrast sensitivity and motivate the development of future foveation models, demonstrating that tolerance for foveation is significantly higher when the user is concentrating on a task in the fovea.

Brooke Krajancich, Stanford University; Petr Kellnhofer, TU Delft; Gordon Wetzstein, Stanford University

Random-access Neural Compression of Material Textures

This work introduces a neural compression technique for mipmapped material texture sets, offering significantly better compression than BCx at comparable quality and even surpassing entropy-coded AVIF and JPEG XL at low bitrates. Our method uses small, optimized neural networks for efficient compression, real-time decompression, and random access on GPUs.

Karthik Vaidyanathan, Marco Salvi, Bartlomiej Wronski, Tomas Akenine-Moller, Pontus Ebelin, Aaron Lefohn, NVIDIA

Learning Physically Simulated Tennis Skills From Broadcast Videos

We present a system to learn diverse and complex tennis skills leveraging large-scale but lower-quality motions harvested from broadcast tennis videos for physically simulated characters to hit the ball to target positions with high accuracy and successfully conduct competitive rally play that includes a range of shot types and spins.

Haotian Zhang, Stanford University; Ye Yuan, NVIDIA; Viktor Makoviychuk, NVIDIA; Yunrong Guo, NVIDIA; Sanja Fidler, NVIDIA, University of Toronto; Xue Bin Peng, NVIDIA, Simon Fraser University; Kayvon Fatahalian, Stanford University

Min-Deviation-Flow in Bi-directed Graphs for T-Mesh Quantization

Integer optimization problems for T-mesh quantization are central to state-of-the-art quad-meshing methods. We show how their structure allows modeling as generalized network flow problems in multiple ways. Our novel approximate and exact solvers achieve dramatic speed-ups over general solvers and have applications beyond T-mesh quantization.

Martin Heistermann, University of Bern; Jethro Warnett, University of Oxford; David Bommes, University of Bern

Test-of-Time Awards

ACM SIGGRAPH is delighted to announce the 2023 Test-of-Time Award papers that have had a significant and lasting impact on computer graphics and interactive techniques over at least a decade. This is the first year of this annual award. For 2023, the papers presented at SIGGRAPH conferences from 2011 to 2013 were considered by the Test-of-Time Award committee and the committee selected four winning papers.

Functional Maps: A Flexible Representation of Maps Between Shapes (2012)

Establishing correspondences between pairs of shapes is a fundamental step for shape inference and manipulation. This paper introduced a new representation of functional maps and has sparked a large volume of follow-on research on shape matching. Read the paper on the ACM Digital Library.

Maks Ovsjanikov, Mirela Ben-Chen, Justin Solomon, Adrian Butscher, Leonidas Guibas

Eulerian Video Magnification for Revealing Subtle Changes in the World (2012)

This paper shows that cameras can capture subtle, yet important, motion that is too subtle for the human eye to see. Follow-on studies have found many application areas including video surveillance, visual vibrometry, and visual microphones. Read the paper on the ACM Digital Library.

Hao-Yu Wu, Michael Rubinstein, Eugene Shih, John Guttag, Frédo Durand, William Freeman

HDR-VDP-2: A Calibrated Visual Metric for Visibility and Quality Predictions in All Luminance Conditions (2011)

The metric presented in this paper includes a calibrated model of human vision across different luminance conditions and has become the default standard metric to predict the visibility and quality of images for a wide range of intensities. Read the paper on the ACM Digital Library.

Rafal Mantiuk, Kil Joong Kim, Allan G. Rempel, Wolfgang Heidrich

Optimizing Locomotion Controllers Using Biologically-based Actuators and Objectives (2012)

This paper introduced an innovative way to simulate human locomotion at the musculoskeletal level and inspired new research directions in how we view human movements and the extent to which they can be simulated. Read the paper on the ACM Digital Library.

Jack M. Wang, Samuel R. Hamner, Scott L. Delp, Vladlen Koltun

Thank you to Jehee Lee for gathering information about the Test-of-Time Awards.

Register for SIGGRAPH 2023, taking place 6–10 August in Los Angeles, to access the best of the best scholarly research in computer graphics and interactive techniques. Don’t miss a moment of Technical Papers excellence. Visit the full program to begin adding Technical Papers sessions to your schedule, and be sure to attend the Papers Fast Forward on Sunday, 6 August at 6 pm PDT.