“Image Features Influence Reaction Time: A Learned Probabilistic Perceptual Model for Saccade Latency” © Budmonde Duinkharjav, New York University; Praneeth Chakravarthula, Princeton University, University of North Carolina at Chapel Hill; Rachel Brown, Anjul Patney, NVIDIA Research; Qi Sun, New York University

Technical Papers is a pillar of the annual SIGGRAPH conference where scientists and researchers come to disseminate new scholarly work and propel the industry forward. This year, the program offered two ways to submit research — Journal Papers, which is the continuation of the same program from previous years, and Conference Papers, where ideas are shared in a shorter format but still make a significant impact.

The new format is not the only addition to SIGGRAPH 2022. New this year, SIGGRAPH 2022 introduces the Technical Papers awards for Best Papers and Honorable Mentions. These papers were selected for their research prominence and innovative contributions to the future of research in computer graphics and interactive techniques. The Best Papers Award winners and the Honorable Mentions will be honored during the SIGGRAPH 2022 closing session in Vancouver on Thursday, 11 August.

SIGGRAPH 2022 Technical Papers Chair Niloy Mitra is thrilled to showcase these award-winning papers and thanks the selection committee, SIGGRAPH 2023 Technical Papers Chair Alla Sheffer and a selection of senior International Papers Committee members, who chose the Best Papers and Honorable Mentions out of a pool of hundreds.

Celebrate the winners and honorable mentions below to get a taste of what to expect during SIGGRAPH 2022.

Best Papers

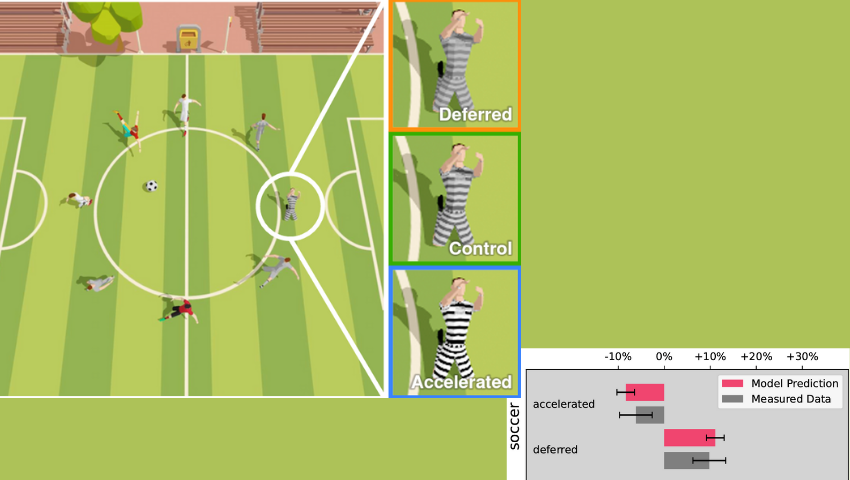

Image Features Influence Reaction Time: A Learned Probabilistic Perceptual Model for Saccade Latency

We present a neurologically inspired perceptual model to predict eye reaction latency as a function of observed image characteristics on screen. Our model may serve as a metric for predicting and altering reaction latencies in esports and AR/VR applications.

Budmonde Duinkharjav, New York University; Praneeth Chakravarthula, Princeton University, University of North Carolina at Chapel Hill; Rachel Brown, Anjul Patney, NVIDIA Research; Qi Sun, New York University

CLIPasso: Semantically Aware Object Sketching

We present CLIPasso, a method for sketching objects at different levels of abstraction. We define a sketch as a set of strokes and use a differentiable rasterizer to optimize the strokes’ parameters with respect to a CLIP-based perceptual loss. The abstraction degree is controlled by varying the number of strokes.

Yael Vinker, Tel Aviv University, École Polytechnique Fédérale de Lausanne; Ehsan Pajouheshgar, École Polytechnique Fédérale de Lausanne; Jessica Y. Bo, École Polytechnique Fédérale de Lausanne, ETH; Roman Christian Bachmann, École Polytechnique Fédérale de Lausanne; Amit Bermano, Tel Aviv University; Daniel Cohen-Or, Tel Aviv University; Amir Zamir, EPFL; Ariel Shamir, Reichman University

Instant Neural Graphics Primitives with a Multiresolution Hash Encoding

Neural networks emerged as high-quality representations of graphics primitives, such as signed distance functions, light fields, textures, and the likes. Our approach can train such primitives in seconds and render them in milliseconds, allowing their use in the inner loops of graphics algorithms where they previously may have been discounted.

Thomas Müller, Alex Evans, Christoph Schied, Alexander Keller, NVIDIA

Spelunking the Deep: Guaranteed Queries on General Neural Implicit Surfaces

This work develops geometric queries such as ray casting, closest-point, intersection testing, building spatial hierarchies, etc., for neural implicit surfaces. The key tool is range analysis, which automatically computes local bounds on the neural network output. The resulting queries have guaranteed accuracy, even on randomly initialized networks.

Nicholas Sharp, University of Toronto; Alec Jacobson, University of Toronto, Adobe Research

DeepPhase: Periodic Autoencoders for Learning Motion Phase Manifolds

We present the Periodic Autoencoder that can learn the spatial-temporal structure of body movements from unstructured motion data. The network produces a multi-dimensional phase manifold that helps enhance neural character controllers and motion matching for a variety of tasks, including diverse locomotion, style-based movements, dancing to music, or football dribbling.

Sebastian Starke, University of Edinburgh, Electronic Arts; Ian Mason, University of Edinburgh; Taku Komura, University of Hong Kong

Honorable Mentions

Joint Neural Phase Retrieval and Compression for Energy- and Computation-efficient Holography on the Edge

We propose a framework that jointly generates and compresses phase-only holograms and achieves high remote transmission efficiency and image reconstruction quality on edge devices. By asymmetrical distribution of computation between information encoding process and phase decoding procedure, we demonstrate low computation and energy costs on edge holographic display devices.

Yujie Wang, Shandong University, Peking University; Praneeth Chakravarthula, Princeton University, University of North Carolina at Chapel Hill; Qi Sun, New York University; Baoquan Chen, Peking University

Computational Design of High-level Interlocking Puzzles

We present a computational approach for designing high-level interlocking puzzles according to the user-specified puzzle shape, number of puzzle pieces, and level of difficulty. Our key idea is to leverage a new disassembly graph to represent all possible configurations of an interlocking puzzle and to guide the puzzle design process.

Rulin Chen, Singapore University of Technology and Design; Ziqi Wang, ETH Zürich; Peng Song, Singapore University of Technology and Design; Bernd Bickel, IST Austria

Sparse Ellipsometry: Portable Acquisition of Polarimetric SVBRDF and Shape With Unstructured Flash Photography

We present sparse ellipsometry, a portable polarimetric acquisition method that captures both polarimetric SVBRDF and 3D shape simultaneously. We also develop a complete polarimetric SVBRDF model that includes diffuse and specular components, as well as single scattering, and devise a novel polarimetric inverse rendering algorithm.

Inseung Hwang, Daniel S. Jeon, Korea Advanced Institute of Science and Technology (KAIST); Adolfo Muñoz, Diego Gutierrez, Universidad de Zaragoza – I3A; Xin Tong, Microsoft Research Asia; Min H. Kim, Korea Advanced Institute of Science and Technology (KAIST)

Umbrella Meshes: Volumetric Elastic Mechanisms for Freeform Shape Deployment

Umbrella meshes are bending-active structures that can be optimized to deploy from a compact fabrication state into a freeform design surface. We propose a complete inverse-design pipeline incorporating physics-based simulation to accurately model the elastic deformation of the structure when solving for the geometric parameters of the constituent unit-cell mechanisms.

Yingying Ren, Uday Kusupati, EPFL; Julian Panetta, UC Davis; Florin Isvoranu, Davide Pellis, EPFL; Tian Chen, University of Houston; Mark Pauly, EPFL

Sketch2Pose: Estimating a 3D Character Pose From a Bitmap Sketch

Artists often capture character poses via raster sketches then use these drawings as a reference while painstakingly posing a 3D character in a 3D animation software. We propose the first system for algorithmically inferring a 3D character pose from a single bitmap sketch, producing poses consistent with viewer expectations.

Kirill Brodt, Mikhail Bessmeltsev, University of Montreal

Facial Hair Tracking for High Fidelity Performance Capture

Facial hair is a largely overlooked problem in facial performance capture, requiring actors to shave clean before a capture session. We propose the first method that can reconstruct and track 3D facial hair fibers and approximate the underlying skin during dynamic facial performances.

Sebastian Winberg, ETH Zürich, DisneyResearch|Studios; Gaspard Zoss, Prashanth Chandran, Paulo Gotardo, Derek Bradley, DisneyResearch|Studios

Towards Practical Physical-optics Rendering

We present a practical framework for physical light transport, capable of reproducing global diffraction and wave-interference effects in rendering. Unlike existing methods, we are able to render realistic, elaborate scenes with complex coherence-aware materials, account for the light’s wave properties throughout, and at a performance approaching radiometric renderers.

Shlomi Steinberg, Pradeep Sen, Ling-Qi Yan, University of California Santa Barbara

Free2CAD: Parsing Freehand Drawings Into CAD Commands

We present Free2CAD, wherein the user can sketch a shape and our system parses the input strokes into CAD commands that reproduce the sketched object. Technically, we cast sketch-based CAD modeling as a sequence-to-sequence translation problem where input pen strokes are grouped to correspond to individual CAD operations.

Changjian Li, INRIA, University Côte d’Azur, University College London (UCL); Hao Pan, Microsoft Research Asia; Adrien Bousseau, INRIA, University Côte d’Azur; Niloy Mitra, University College London (UCL), Adobe Research

Grid-free Monte Carlo for PDEs With Spatially Varying Coefficients

We describe a method to solve partial differential equations (PDEs) with spatially varying coefficients on complex geometric domains without any approximation of the geometry or coefficient functions. Our main contribution is to extend the walk on spheres (WoS) algorithm by drawing inspiration from Monte Carlo techniques for volumetric rendering.

Rohan Sawhney, Carnegie Mellon University; Dario Seyb, Wojciech Jarosz, Dartmouth College; Keenan Crane, Carnegie Mellon University

Implicit Neural Representation for Physics-driven Actuated Soft Bodies

We present an implicit formulation to control active soft bodies by defining a function that enables a continuous mapping from a spatial point in the material space to the control parameters. This allows us to capture the dominant frequencies of the signal, making the method discretization agnostic and widely applicable.

Lingchen Yang, Byungsoo Kim, ETH Zurich; Gaspard Zoss, DisneyResearch|Studios; Baran Gözcü, Markus Gross, Barbara Solenthaler, ETH Zurich

Ready to experience the latest CG and interactive techniques research in person and online? Register for SIGGRAPH 2022 and gain access to 133 Journal Papers, 61 Conference Papers, and 53 ACM Transactions on Graphics papers to be presented across 31 sessions. Access the virtual pre-recorded presentations and participate in the virtual Technical Papers Fast Forward. Join us in Vancouver to participate in roundtable discussions with paper authors. View all Technical Papers content on the SIGGRAPH 2022 website to start planning your conference experience.