Image via Jon Peddie Research

It has taken 20 years to realize

The GPU, defined as a graphics device with a 3D transformation capability, became a commercial reality in the late 1990s. Leveraging Moore’s law, Nvidia was the fist to offer a fully integrated T&L engine in a graphics controller in 1999, and infused the industry with the term “GPU”. Since then, GPUs continue to exploit Moore’s law and, in 2021, boast of over 10,000 32-bit, floating-point processors.

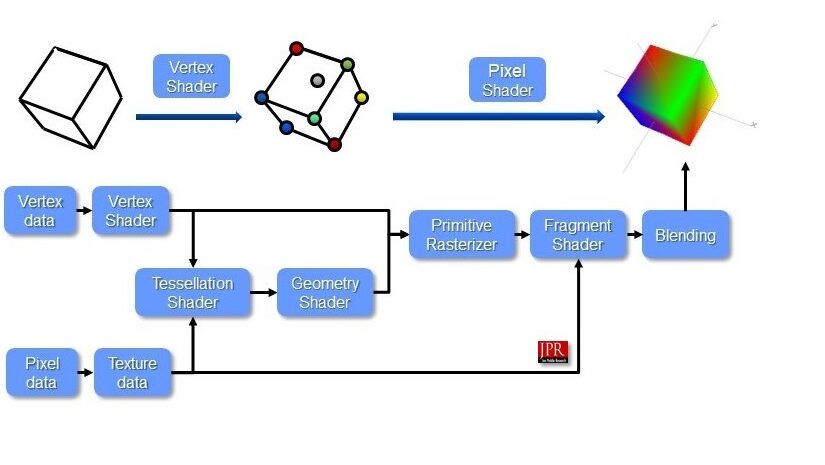

But, those processors were assigned to work in sequence, in what’s referred to as a pipeline, completing one operation and then passing the results down the line for the next operation.

By 2005, the GPU and the application program interfaces (APIs) had evolved to a dizzyingly list of functions:

- Vertex shaders

- Geometry shaders

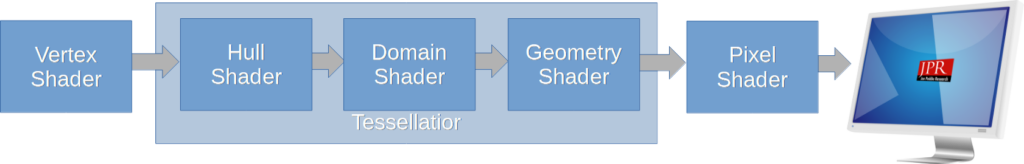

- Tessellation shaders

- Hull Shader Stage (only used for tessellation)

- Domain Shader Stage (only used for tessellation)

- Geometry Shader Stage

- Pixel Shader Stage

In addition to the major shading stages of the geometry pipeline, there are the setup processes.

One of the first things that is done when an object is drawn in 3D space is to run a shader that processes the vertices and creates vertex decisions in a common-view space. This is culling to determine if the primitive is inside or outside of the view.

It became painfully obvious by 2005 that the GPU was being overburdened with too many specialized fixed-function shading operations and wasting resources in the process. The first graphics add-in board (AIB) with a programmable pixel shader was the Nvidia GeForce 3 (NV20), released in 2001. Geometry shaders were introduced with Direct3D 10 and OpenGL 3.2. Eventually, graphics hardware evolved toward a unified shader model.

Unified shader 2006–2010—DirectX 9.0 and OpenGL 3.3

Unified shader architecture design allows all shader processors in a GPU to handling any type of shading tasks. This makes the GPU more efficient and uses more of its processing power. It also makes it more scalable; a unified architecture saves power better than a non-unified architecture.

In 2005, ATI (now part of AMD) introduced a unified architecture on the hardware they developed for the Xbox 360 that supported DirectX 9.0c. Nvidia quickly followed with their Tesla GeForce 8 AIB series design in November 2006. AMD introduced a unified shader in the TeraScale R600 GPU, graphics next architecture in 2007 used on the Radeon HD 2900 AIB.

The new unified shader functionality was based on a very long instruction word (VLIW) architecture in which the GPU executes operations in parallel.

The R600 core, like the Nvidia Tesla core, processed vertex, geometry, and pixel shaders as outlined by the Direct3D 10.0 specification for Shader Model 4.0, in addition to full OpenGL 3.0 support.

In DirectX, the first unified shader was model 4.0, introduced in 2006 as part of Direct3D 10 in Windows Vista. For OpenGL 3.3 (2010), it was the Unified shader model. The instructions for all three types of shaders are now merged into one instruction set for all shaders.

The die was cast so to speak, and the industry moved closed to an all-compute programming model for the GPU.

Task to Amplifier to mesh to meshlets

In 2017, to accommodate developers’ increasing appetite for migrating geometry work to compute shaders, AMD introduced a more programmable geometry pipeline stage in their Vega GPU that ran a new type of shader called a primitive shader. According to AMD corporate fellow Mike Mantor, primitive shaders have “the same access that a compute shader has to coordinate how you bring work into the shader.” Mantor said that primitive shaders would give developers access to all the data they need to effectively process geometry, as well.

Primitive shaders led to task shaders, and that led to mesh shaders.

Mesh shaders 2018–2020—DirectX 12 Ultimate, Vulkan extension

This is really the future of the geometry pipeline, by reducing the linear pipeline concept.

In 2019, Nvidia presented a paper on mesh and task shaders at SIGGRAPH. Then, Khronos announced it would use Nvidia mesh shader code as an extension to Vulkan and OGL. Next, in November 2019, Microsoft said DirectX 12 (D3D12) would support mesh and amplification shaders. And, finally, in March 2020 DirectX 12 Ultimate included Mesh Shaders, then in January 2021 Microsoft released code samples—the world shifted.

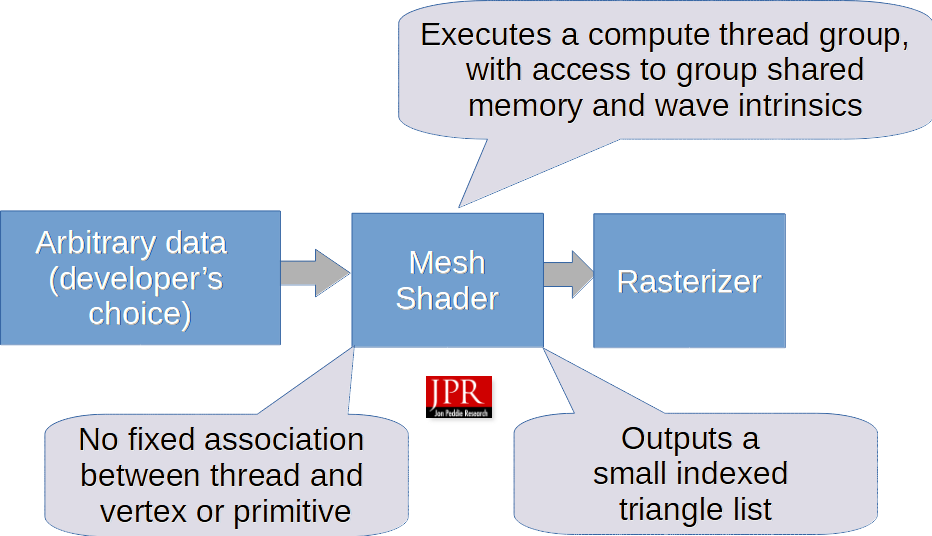

Mesh shaders will expand the capabilities and performance of the geometry pipeline. Mesh shaders incorporate the features of Vertex and Geometry shaders into a single shader stage through batch processing of primitives and vertices data before the rasterizer. The shaders are also capable of amplifying and culling geometry.

Graphics processors were originally imagined as a linear pipeline, but have evolved over time to incorporate parallel processors known as shading engines. Although the shaders are SIMD design, they have been expanded in the roles they play and organized as linear pipeline rather than a true compute SIMD architecture. Mesh shaders change all that—but with some costs.

The mesh shader outputs triangles that go to the rasterizer. It’s just a set of threads and it’s up to the developer how they work. With a mesh shader, you program in a group with many threads working together in cooperation rather than locally. When finished, it outputs a small index triangle list. All threads do individual things and vertex data remains unchanged.

Both mesh and task shaders follow the programming model of compute shaders, using cooperative thread groups to compute their results and having no inputs other than a workgroup index.

Meshlets

GPUs and associated (APIs) continued to evolve. In late 2020, a new general-purpose computational shader was developed based on Nvidia’s introdruction of its Turing architecture GPU in the fall of 2018. The new GPU had task shaders, which Nvidia labeled as mesh shaders, and introduced the concept of meshlets. The new capabilities were accessible through extensions in OpenGL and Vulkan and DirectX 12 Ultimate.

A demonstration

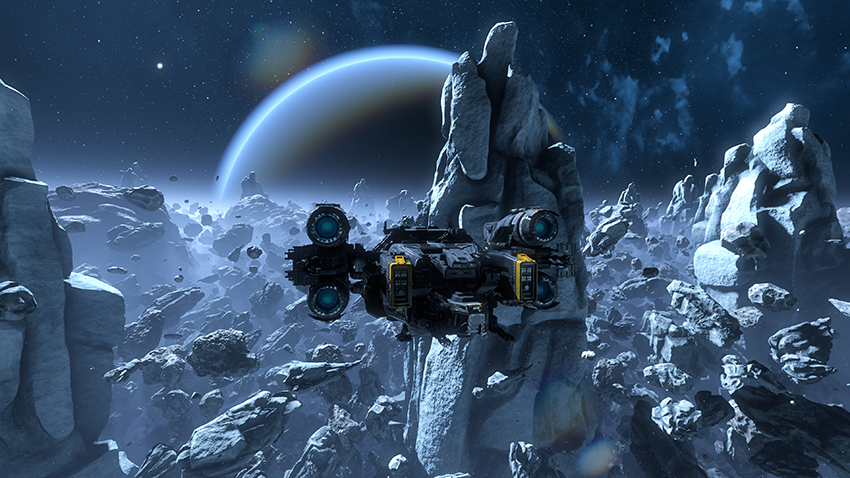

Epic developed a mesh shader demo for the PS5 called “Nanite virtualized micropolygon geometry”. It generates 20 million triangles.

There is an excellent demo and explanation of the Epic accomplishment that I’ve embedded below.

You can also see the Nvidia demo via the video below.

Mesh shaders replace the legacy pipeline with a new model that brings the power, flexibility, and control of a compute-programming model to the 3D pipeline.

Mesh techniques enable the expansion of models by orders of magnitude. This approach challenges ray tracing for realism and promises new frontiers for real-time.

Mesh shaders are fast, much faster than ray tracing.

And best of all—they are free.

Resources

- Reinventing the Geometry Pipeline: Mesh Shaders in DirectX 12

- Root Signatures

- How to deal with high-detail geometry

- Meshlet builder sample

- 3DMark Mesh Shader feature test

Dr. Jon Peddie is a recognized pioneer in the graphics industry, president of Jon Peddie Research, and has been named one of the world’s most influential analysts. Peddie has been an ACM distinguished speaker and is currently an IEEE Distinguished speaker. He lectures at numerous conferences and universities on topics about graphics technology and the emerging trends in digital media technology. Former President of SIGGRAPH Pioneers, he serves on the advisory boards of several conferences, organizations, and companies, and contributes articles to numerous publications. In 2015, he was given the Lifetime Achievement award from the CAAD society. Peddie has published hundreds of papers to date; and authored and contributed to eleven books, His most recent, “Augmented Reality, where we all will live.” Jon is a former ACM Distinguished Speaker. His latest book is “Ray Tracing, a Tool for all.”

Jon Peddie Research (JPR) is a technically oriented marketing, research, and management consulting firm. Based in Tiburon, California, JPR provides specialized services to companies in high-tech fields including graphics hardware development, multimedia for professional applications and consumer electronics, entertainment technology, high-end computing, and Internet access product development.