Image Credit: “Computational Interferometric Imaging” © 2023 Kotwal, Willomitzer, Gkioulekas

The realm of computational imaging continues to push the boundaries of possibility. We had the privilege of sitting down with the lecturers behind the SIGGRAPH 2023 Course “Computational Interferometric Imaging” — Alankar Kotwal, Florian Willomitzer, and Ioannis Gkioulekas — to uncover the inspiration behind their course, the fundamental principles of interferometric imaging, practical exercises that solidify understanding, and the real-world impact of this captivating domain. Join us on this journey to explore the convergence of optics, computation, and imaging, and how it’s shaping the future of technology and research.

SIGGRAPH: Interferometric imaging is a complex field that merges optics, computational techniques, and imaging. What inspired you to present a course on this topic at SIGGRAPH 2023?

Alankar Kotwal, Florian Willomitzer, Ioannis Gkioulekas (AK, FW, IG): Computational imaging — the joint design of optics and algorithms to make possible new imaging modalities — has always had a home at SIGGRAPH. However, interferometric imaging techniques specifically have not received as much attention by the SIGGRAPH computational imaging community as other imaging modalities. This is despite the tremendous potential of interferometric imaging methods for applications central to SIGGRAPH, such as micrometer-scale sub-surface and 3D imaging, especially in environments where the signal is buried in noise. Additionally, the guiding principles of interferometric imaging — the wave properties of light — are vital in designing the large number of display systems based on manipulating the coherence of light demonstrated at SIGGRAPH every year. Lastly, there is a lot of potential for the cross-pollination of ideas, techniques, and applications with other core research areas at SIGGRAPH, for example rendering and differentiable rendering, fabrication, and simulation. Bringing this imaging modality, relatively unknown but based on commonly used principles, to the community was the inspiration for this course.

SIGGRAPH: Share a brief overview of the fundamental principles and applications of and latest developments in computational interferometric imaging.

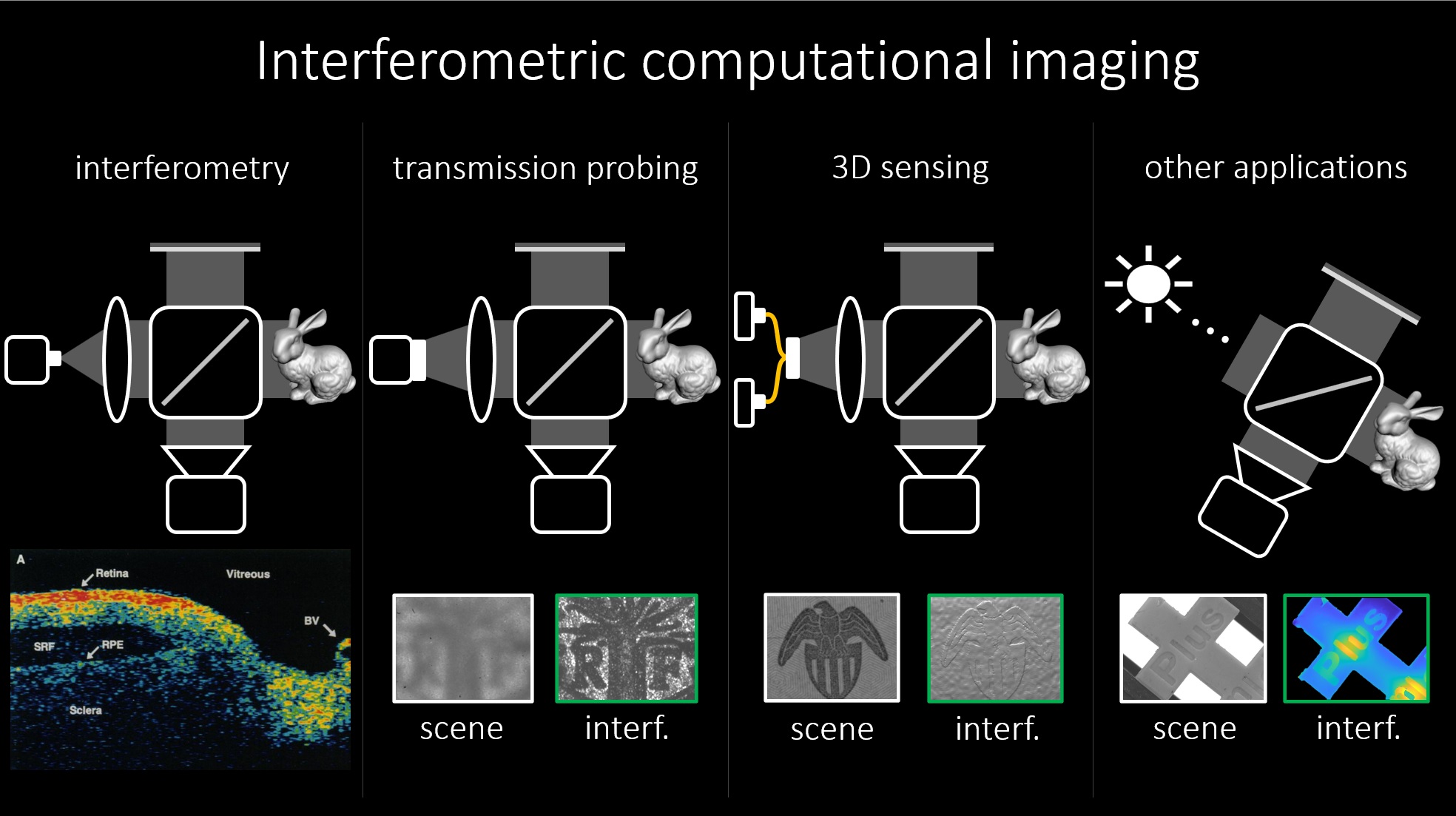

AK, FW, IG: Interferometric imaging systems are based on the wave nature of light. When two or more light beams are measured together, the total intensity is the sum of the individual intensities of the beams, plus a small variation called the interference between the beams. In everyday life, these variations are too tiny to see — but when engineered properly, interference is measurable and can provide a lot of information about the objects that these beams have interacted with. This engineering is the core concept in computational interferometric imaging. Variants of computational interferometric imaging have been around for several decades in medical imaging (such as optical coherence tomography used in ophthalmology) and industrial inspection (for high-resolution quality assurance in manufactured parts). Recent advances in the field include methods for high-resolution imaging through scattering media (like fog or tissue) and for imaging around corners.

SIGGRAPH: Share some details about the practical exercises or projects that SIGGRAPH 2023 participants engaged in to solidify their understanding of the subject matter.

AK, FW, IG: Throughout the course, participants were encouraged and motivated to think about applications of the subject matter in their own fields. Early in the course, we listed applications of interferometry in a broad set of fields spanning ophthalmology, soft tissue imaging, lidar, inspection, fabrication, and non-line-of-sight imaging — covering a large set of research areas that the experienced audience would be interested in. Presenting the trade-offs between different interferometric methods was an exercise in thinking practically about how interferometry would fit into their work. For the audience new to these research areas, this was also an introduction to the exciting possibilities in these areas. In the end, the audience received recommended resources and other courses to broaden their knowledge about graphics and wave optics — especially in rendering speckle, wave effects, and very general computational camera systems.

SIGGRAPH: What key takeaways did your course offer attendees so that they have the skills and knowledge to adapt to future developments in this area?

AK, FW, IG: The theoretical focus in the course was the wave properties of light and their use in imaging. However, the knowledge imparted on the way is useful to the broader graphics community as well. The course highlighted a new point of convergence between computer graphics, optics, imaging, robotics, and medicine — potentially leading to new research, engineering, and translational efforts within and across these areas. The course has the potential to benefit work in the non-research focus areas at SIGGRAPH as well — for example, 3D acquisition for art and design; display systems for gaming and interactive applications; and wave-optics rendering for production and animation. We put together a website, linked here, with all of the course materials to make it easier for attendees and other interested members of the SIGGRAPH community to refer to our course.

SIGGRAPH: What are some real-world applications where computational interferometric imaging is making a significant impact today, and how can professionals and researchers leverage the insights gained from your course in their work?

AK, FW, IG: The biggest impact of computational interferometric systems has been on noninvasive clinical soft tissue imaging. Optical coherence tomography (OCT) systems, invented three decades ago, continue to be used for retinal imaging to detect disorders such as macular degeneration, diabetic retinopathy, and glaucoma — this is the machine you look into when an optometrist tests your eye. More recently, OCT has picked up in cardiovascular health for monitoring atherosclerotic plaques in coronary arteries, monitoring osteoarthritis in joints, and microscopic visualization during surgery. Additionally, interferometric methods are used in shape sensing for quality assurance, in radio astronomy, and for detecting gravitational waves.

Despite delivering impressive image quality, these systems have drawbacks. Most interferometric systems are based in fiber optics due to their robustness and low signal-to-background and signal-to-noise ratios — this precludes their use in applications requiring large fields of view and fast acquisition. In our course, we demonstrated how to build interferometric systems that reconcile these requirements to build fast, high-resolution 3D imaging systems with large fields of view that could be the baseline for developing further applications in shape sensing, seeing through scattering and imaging around corners.

Alankar Kotwal is a postdoctoral researcher in neurosurgery at the University of Texas Medical Branch. His research focus is on building computational imaging systems to integrate and enhance visual feedback in neurosurgery. Previously, he completed his Ph.D. at Carnegie Mellon University working on interferometric imaging.

Florian Willomitzer is an associate professor at the Wyant College of Optical Sciences — University of Arizona where he directs the Computational 3D Imaging and Measurement (3DIM) Lab. He graduated from the University of Erlangen-Nuremberg, Germany, where he received his Ph.D. degree with honors (summa cum laude) in 2017. During his doctoral studies, Florian investigated physical and information-theoretical limits of optical 3D-sensing for medical imaging and industrial inspection and implemented sensors that operate close to these limits. In the 3DIM Lab, Florian and his students work on novel methods to image hidden objects through scattering media (like tissue) or around corners, high-resolution holographic displays, unconventional methods for precise VR eye-tracking, and the implementation of high-precision metrology methods in low-cost mobile handheld devices. Moreover, Florian’s group develops novel time-of-flight and structured light imaging techniques working at depth resolutions in the 100μm-range. Florian served as chair and committee member of several Optica COSI conferences; Optics Chair of the 2022 IEEE ICCP conference; committee member of Optica FiO conferences; and as reviewer for Nature, Optica (OSA), SPIE, IEEE, and CVPR. He is a recipient of the NSF CRII grant, winner of the Optica 20th Anniversary Challenge, and his Ph.D. thesis was awarded with the Springer Theses Award for Outstanding Ph.D. Research.

Ioannis Gkioulekas is an associate professor at the Robotics Institute, Carnegie Mellon University (CMU). He is a Sloan Research Fellow and a recipient of the NSF CAREER Award and the Best Paper Award at CVPR 2019. He has Ph.D. and M.S. degrees from Harvard University, where he was advised by Todd Zickler, and a diploma from the National Technical University of Athens, where he was advised by Petros Maragos. He works broadly in computer vision and computer graphics, but focuses on computational imaging: This is the joint design of optics, electronics, and computation to create imaging systems with unprecedented capabilities. Some examples include imaging systems that can see around corners or through skin; passive 3D sensing systems with extreme resolution; ultrafast programmable lenses; and imaging systems that adapt to their environments. Technical keywords that often show up in his research include: non-line-of-sight imaging, single-photon imaging, LiDAR, SONAR, interferometry, speckle, acousto-optics, physics-based rendering, differentiable rendering, Monte Carlo simulation, and probabilistic modeling.