Image credit: AXEREAL Co., Ltd., Keio University Graduate School of Media Design, Japan

SIGGRAPH 2020 Emerging Technologies selection SlideFusion is a novel, remote surrogacy wheelchair system that integrates implicit eye gaze modality communication between the wheelchair user and a remote operator. To learn more about SlideFusion, we connected with Ryoichi Ando, one of the team members on this project. Here, Ando shares the inspiration behind the project, how it was developed, and how he sees it assisting users in the future.

SIGGRAPH: Tell us about the process of developing SlideFusion. What inspired you and your team to pursue the project?

Ryoichi Ando (RA): We started this research based on the idea of adding human factors into assistive technologies and remote support. Our team has extensive work in the area of telepresence and remote collaboration, like in the SIGGRAPH 2018 Emerging Technologies program where we showcased Fusion. We also worked extensively on augmenting the wheelchair experience, as one of the projects we previously explored was SlideRift, which focused on enhancing the experience of wheelchair riding (Figure 1).

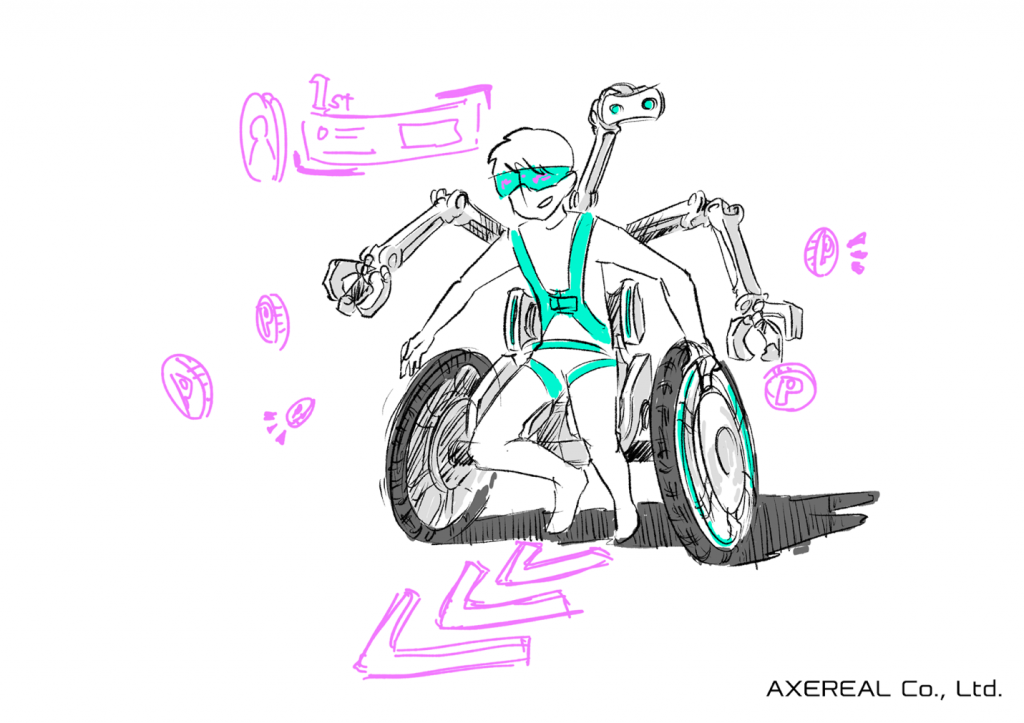

Early last year, we started basic prototypes of augmenting the wheelchair with an anthropomorphic telepresence system that allows remote users to engage with the wheelchair user. The core motivation was rethinking what an emerging wheelchair is and what the collaborative technology in an assistive context may look like. See Figure 2 for a conceptual image of the system.

In our initial prototypes, we only used direct and explicit forms of communication, such as speaking or hand gestures to share the wheelchair user’s intentions with the remote operator. However, we realized that for a person using a wheelchair, they would rely more on their visuals and eye coordination, which hints at an implicit modality that could be exploited in collaborative assistive technology. Thus, we modified our prototype to include eye gaze as input modality that can be shared with the remote user.

SIGGRAPH: Let’s get technical. How did you develop the technology?

RA: The wheelchair itself consists of three main systems:

- A motorized wheelchair that provides enhanced motion and navigation

- A surrogate system with two anthropomorphic arms and a three-axis head, equipped with stereo vision, binaural audio for hearing, and audio out

- The overall design of the system is based on a previously developed system by Saraiji et al. [1]

- Pupil tracking system worn by the chair user, used to track eye movement

Saraiji and I carried the hardware development, in which we focused on the ergonomic factors as well as the alignment of the surrogate system with the wheelchair user. Some technical details were required to be considered, such as the calculation of center of gravity so that the overall chair, including the mounted surrogate system, would remain balanced when the motorized wheelchair began moving.

Other considerations taken into the development of the software and control algorithm of the robot were the safety measurements of the robotic system to avoid potential collision with the wheelchair user.

On the remote operator side, we used a head-mounted display (HMD) to track head posture and present stereo vision to the user. Similarly, we employed a tracking system for the user’s hands and fingers to control the arms. The captured pupil position of the wheelchair user is translated into the operator’s head coordinates and then overlaid into the user’s visuals.

SIGGRAPH: SlideFusion aids in accessibility for wheelchair users. Why was it important for you to create an adaptive system?

RA: Our proposal was to socially reassess the potential value of the wheelchair as a mobile object, and, in order to do so, it was necessary to consider what was necessary for existing wheelchair users. When existing wheelchair users socially demonstrate the potential value of this vehicle, people will see it and consider using the wheelchair by themselves.

SIGGRAPH: Why is eye gaze modality such a significant part of your project? How did you develop the system to respond to a user’s eyes?

RA: As we mentioned earlier, we added the eye gaze modality after feedback from the remote user in our initial prototype and trials. It was due to two main factors:

- The difficulty of the wheelchair user to provide explicit feedback via hand gestures while driving the chair, as their hands are already occupied

- The cognitive load that the wheelchair user requires to describe their intentions

The eye gaze modality is not only limited to such scenarios, but it has potential to add implicit communication between multiple people. Some concerns arose during this experiment, such as privacy. For this, the wheelchair user should have full control whether or not to share eye gaze.

During several experiments, we found interesting usages of sharing eye gaze, such as knowing that a person is not aware of particular events happening in their peripheral vision or outside their visual field. The remote operator can take such clues to hint to the wheelchair user or guide them when intervention is required.

SIGGRAPH: What’s next for SlideFusion? How do you envision it helping users in the future?

RA: Using this system, we enable another layer of embodiment and augmentation to the wheelchair in particular and utilize eye gaze, a modality that has not been well explored in assistive technology. Embodied support is possible for people with physical or language impairment.

SIGGRAPH: Tell us what you’re most looking forward to in participating in the virtual SIGGRAPH 2020 conference.

RA: Our team has a lot of experience participating at SIGGRAPH in the past “physically.” This year, it will be unique for everyone with its virtual format.

SIGGRAPH: What advice do you have for someone looking to submit to Emerging Technologies at a future SIGGRAPH conference?

RA: Although preparations for the submission are quite tedious and take over six months going through prototyping, experimenting, iterations, and redoing this over and over, it is thrilling and fun for those who enjoy research and tinkering. Submitting to the Emerging Technologies track not only requires a well-done system/prototype, but it is very crucial to know how to present it in a self-contained, three-minute video that is easy to grasp, yet covers all the required details of the system. Getting accepted is usually half the journey — the days at conference are the real test and the reward!

Reference

[1] Saraiji, MHD Yamen, et al. “Fusion: full body surrogacy for collaborative communication.” ACM SIGGRAPH 2018 Emerging Technologies. 2018. 1-2.

SIGGRAPH 2020 registration is now open! Register here for the virtual conference and prepare to discover even more mind-blowing Emerging Technologies.

Ryoichi Ando studied at the Royal College of Art, Imperial College London, and the Pratt Institute in the 2015 KMD Global Innovation Design Program. In 2017, he completed the master’s program at Keio University Graduate School of Media Design. He is currently enrolled in the doctoral program at Keio University Graduate School of Media Design, and is the president of AXEREAL Co., Ltd., director of the Superhuman Sports Society, organizer of the augmented physical expression group IKA, and the executive committee chairman of SlideRiftCup.

Ryoichi Ando studied at the Royal College of Art, Imperial College London, and the Pratt Institute in the 2015 KMD Global Innovation Design Program. In 2017, he completed the master’s program at Keio University Graduate School of Media Design. He is currently enrolled in the doctoral program at Keio University Graduate School of Media Design, and is the president of AXEREAL Co., Ltd., director of the Superhuman Sports Society, organizer of the augmented physical expression group IKA, and the executive committee chairman of SlideRiftCup.

MHD Yamen Saraiji is the director at AvatarIn, Tokyo, leading the development of the cutting-edge telepresence robotics platform. He received his M.Sc and Ph.D. degrees in media design from Keio University in 2015 and 2018, respectively. His research, namely “Radical Bodies,” expands on the topic of the machines as an extension of our bodies and emphasizes the role of technologies and robotics in reshaping our innate abilities and cognitive capacities. His work, which is experience-driven, has been demonstrated and awarded at various international conferences such as SIGGRAPH, Augmented Human, and ICAT.

MHD Yamen Saraiji is the director at AvatarIn, Tokyo, leading the development of the cutting-edge telepresence robotics platform. He received his M.Sc and Ph.D. degrees in media design from Keio University in 2015 and 2018, respectively. His research, namely “Radical Bodies,” expands on the topic of the machines as an extension of our bodies and emphasizes the role of technologies and robotics in reshaping our innate abilities and cognitive capacities. His work, which is experience-driven, has been demonstrated and awarded at various international conferences such as SIGGRAPH, Augmented Human, and ICAT.