Copyright 2019 UMBC

Communicating with robots has become commonplace in many households, such as asking smart home devices to make a list or to adjust room temperature. We can command our devices, but can we really communicate? Mark Murnane and his team from the University of Maryland, Baltimore County (UMBC) think these devices are capable of much more.

In his SIGGRAPH 2019 Posters submission “Learning From Human-Robot Interactions in Modeled Scenes,” Murnane explores how to model more sophisticated human to robot communication. Here, we dive into how the team accomplished this feat, where the research is headed, and how we can expect this to impact the future of robots in our everyday lives.

SIGGRAPH: Talk a bit about the process for developing your Poster, “Learning From Human-Robot Interactions in Modeled Scenes.” What inspired your team to pursue this research?

Mark Murnane (MM): We were inspired by the idea that humans and robots should be able to learn from each other, but we’ve been held back in the past by the simple logistics of the problem. Getting a human in the same room as a robot and having them interact with each other in real time to produce data is a lot more complicated than it may seem. Robots, especially those being developed in research, tend to be rather temperamental. When you are working on a robot that will talk to people, understand and respond with natural gestures, or otherwise perform in the social dance of human communication, you don’t want to have to worry that your batteries are overly sulfated or that one of your joint encoders has worked its way loose. These are relatively isolated problems with well understood solutions; however, they can stand in the way of gathering the data needed to teach a machine to handle scenarios that are still on the frontier of understanding.

Of course, roboticists have faced this problem long before attempting to involve humans. To solve these issues, the community has produced an excellent set of simulators that allow software models of robots to navigate virtual worlds where randomness can be narrowed to only the variables needed for each test. However, while these existing simulators excel at modeling robot-world interactions, they are less adept at modeling robot-human interactions. In starting this project, we sought to construct a simulation environment that would accurately model a human and robot interacting with each other through speech, gaze, gestures, and motion, while building on existing systems to simultaneously model the robot interacting with the virtual world.

SIGGRAPH: Let’s get technical. How long did your research take? How many people were involved?

MM: This project took about four months from the first development to the release of the poster; however, it has formed the base of a much larger project that will be carried out through the end of 2020 and likely beyond. Two developers, one undergraduate student, and a research staff member were engaged for the initial development; however, the project that will continue forward is engaging two research labs with three principal investigators and roughly a dozen students.

SIGGRAPH: What technology was needed to develop your research? How did you combine the strength of two existing engines to create a simulation that collects the correct amount of data?

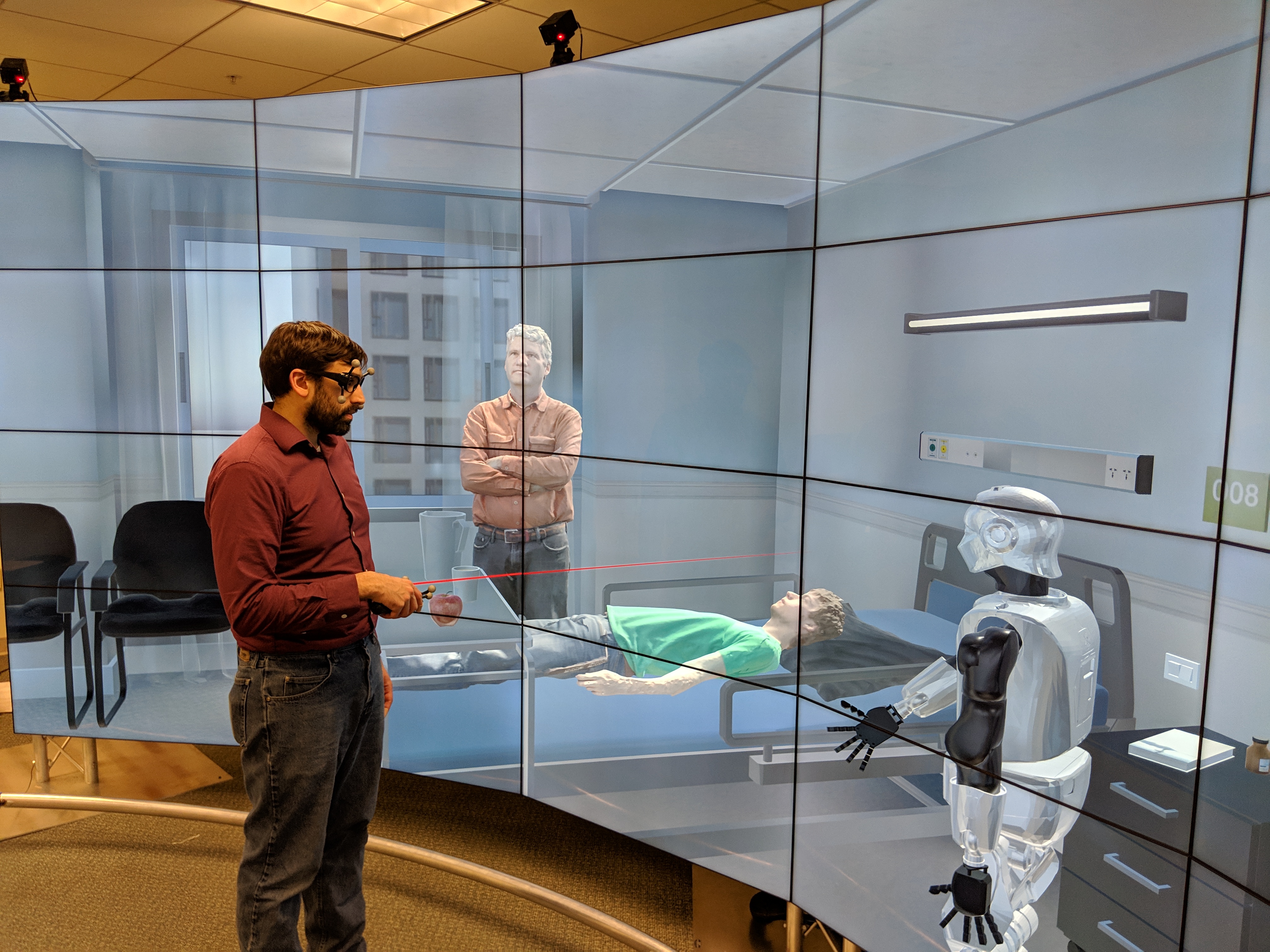

MM: In order to make the tool as broadly useful as possible, we started by choosing widely-used tools. We are using Robot Operating System (ROS) as the underlying network transport and the Gazebo simulator as the physics simulator for the robot-world interactions. Using ROS and Gazebo allowed us to use existing models and APIs to simulate our research vehicle, including the Husky mobile robotics platform. On the human side of the equation, we decided to use virtual reality (VR) to immerse the test participant in the same world as the robot and to allow the participant to interact with the virtual robot. To that end, we used the Unity game engine to drive one of several VR systems, including the CAVE2 system, as pictured on our poster, and the Valve Index when a more isolated experience was needed.

With the human able to see the world through VR and the robot able to sense the world through ROS, the remaining task was to enable the human to see the robot and the robot to see the human. By using ROS-Sharp, we were able to pass messages in real time from the Unity world to the ROS API and back. From there, we were able to tie a scale model of the robot to the joint angles calculated by the Gazebo simulation. With this, commanding the robot’s controller to move forward would generate virtual motor control commands. This caused the simulated motors in Gazebo to generate torque and move the linkages of the robot, causing a kinematic update to be broadcast via the ROS-Sharp bridge to Unity. This mapped the joint angle to an actor in the scene, ultimately allowing the human in VR to see the motion that resulted from the original command.

The robot is able to sense the world through virtual sensors modeled in the Gazebo simulator. When the wheels are commanded to turn, Gazebo calculates the resulting outputs for the corresponding encoders and sends signals back to the robot controller so that it can react to the change in position. This allows the robot to calculate its position within the virtual world. However, Gazebo is unaware of the motion of the human participant, and thus cannot generate any sensor data that includes the presence of the human. To allow the robot to sense the presence and actions of the human, we needed a model of the human participant. We used the UMBC photogrammetry system to scan people so that we had a real-world, scaled, full color, high-resolution model that could be used inside the Unity game engine. With this model hand-rigged in Maya and imported to Unity, we are able to use the VR tracking data to provide a real-time model of the participant that can generate realistic RGB camera data, as well as depth imagery. We outfitted the virtual robot inside the Unity game engine with an RGB camera as well as a depth camera, and then streamed the generated data back through ROS to the robot controller.

Other sensors, such as audio to capture speech, were more straightforward. Since the headset-based VR systems we use already contain microphones, the audio can be captured directly through the headset. For our wall-based VR system, we added a USB microphone.

With the robot-world and physics calculations feeding the sensor data generated from Gazebo — and the robot-human sensor data coming from Unity — we are now able to fully model the scenario from the perspective of the robot, complete with a human participant. Additionally, as the performance of the human may be separated from the photogrammetry acquired model, we are able to multiply our captured performance data, or even replay it with different robot controllers to study how they would react to precisely the same performance. From the human perspective, the robot is kept in the loop, potentially allowing more realistic reactions to be elicited from participants.

SIGGRAPH: What was your biggest challenge?

MM: The most challenging part of the project to date has been capturing the human performance through the sensors available in VR. While the currently available, three-point tracking systems are able to capture a large gamut of gestures, we want our system to be able to capture as wide a range of motion as possible. We are beginning to investigate using other methods, such as a Kinect or a Vicon motion-capture stage, to capture a much higher-quality rig.

SIGGRAPH: What do you find most exciting about the final research you presented?

MM: I’m excited that it’s building into something bigger. With this initial work completed, we are beginning work on using the system we developed to train neural networks on object and speech recognition in situ, as well as beginning to work with physical hardware to verify that results produced in the simulator are able to transfer to the real world. Ultimately, this simulator could become a real force-multiplier for research in this area.

SIGGRAPH: What’s next for “Learning From Human-Robot Interactions in Modeled Scenes”? What kinds of applications could this lead to?

MM: We’ve become quite accustomed to interacting with machines through voice in the form of smart voice assistants like Amazon Alexa or Google Assistant; however, these systems aren’t aware of the many other modes through which humans communicate. For example, when we ask a smart speaker to turn on a light in our house, we cannot point at the light in question to specify it as the target. By producing a simulator that can model these types of natural interactions, we hope that, in the future, devices will become more aware of our language.

SIGGRAPH: What did you most enjoy about your first SIGGRAPH Conference?

MM: My first SIGGRAPH was an excellent conference. Getting to see the projects in the Experience Hall in person, rather than in the 30-second clips online, was great.

SIGGRAPH: What advice do you have for someone looking to submit to Posters for a future SIGGRAPH conference?

MM: Bring business cards with you when you present your poster. There were a lot of people who saw our poster and either had good questions about what we did, or were working on something similar. SIGGRAPH is a great place to talk shop with other attendees.

Do you have in-progress or completed research to share? Consider submitting to the SIGGRAPH 2020 Posters program now through 28 April.

Mark Murnane is a research assistant at the University of Maryland, Baltimore County where he develops software to operate a custom photogrammetry rig, as well as cluster-based systems to process the captured images. He also assists several researchers in developing custom embedded systems for interactive art installations and to solve various photogrammetry-related challenges.