In partnership with Microsoft, the ACM Student Research Competition — presented annually at the North American SIGGRAPH conference — is a unique forum for undergraduate and graduate students to present their original research before a panel of judges and attendees. Each year first-, second-, and third-place winners are chosen in both undergraduate and graduate research categories.

Here, we caught up with SIGGRAPH 2017 first-place graduate winner Chloe LeGendre (whose recent research has been accepted to the SIGGRAPH 2018 Posters program) to learn about her experience.

My best advice for those interested in the science of computer graphics is to always pay attention to the things in movies or games that excite you ― and then later “deep dive” to understand how certain visual techniques were achieved, and how those techniques might be improved.

—Chloe LeGendre, ACM SIGGRAPH Student Research Competition graduate winner

SIGGRAPH: Your winning project was titled “Improved Chromakey of Hair Strands via Orientation Filter Convolution.” Tell us a bit about the project.

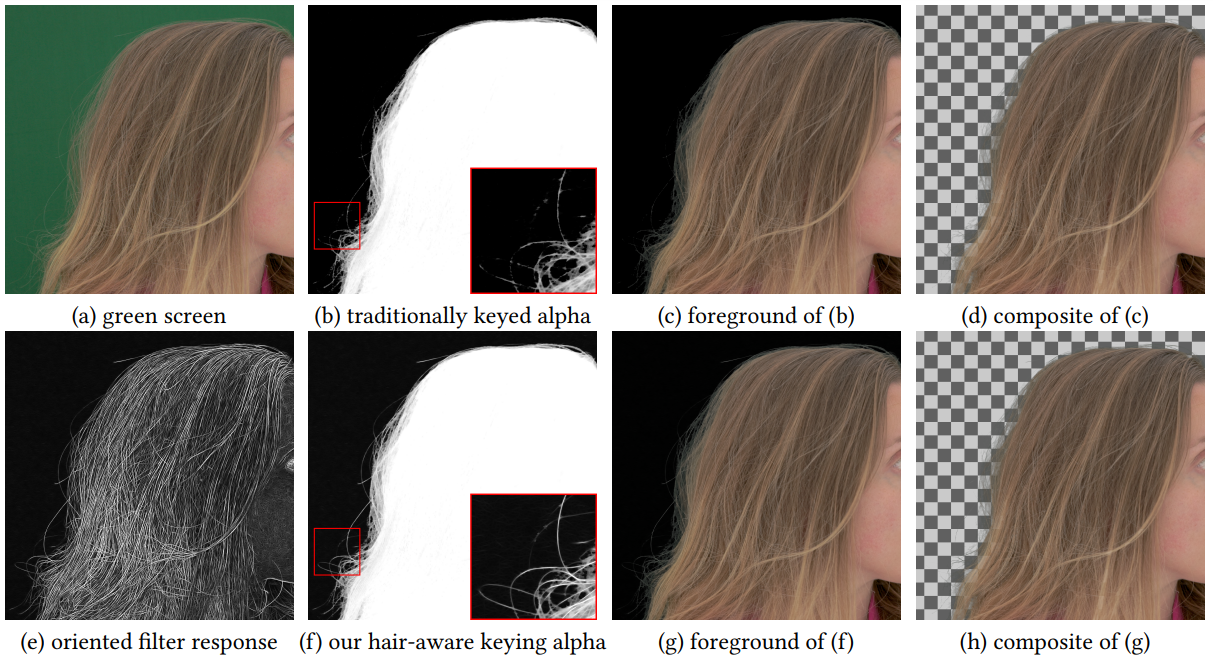

Chloe LeGendre (CL): “Chromakeying” is a widely employed visual effects technique for separating the foreground subject of an image or video from the background using color differences, with the goal of later compositing the foreground subject onto a new background. Actors are often filmed in a studio in front of a green or blue screen to ensure that color differences are large enough to easily detect which pixels are foreground and which are background.

Since hair strands are thin and can be semi-translucent, they’re especially hard to separate from backgrounds. These pesky strands can pose a major challenge to professional compositors, who have the difficult job of convincingly and realistically placing actors filmed in front of green screens into novel scenes. When hair strands are missing, our visual system tells us that something is wrong, and we immediately detect that the composite is “faked,” so visual effects production often includes a lot of manual compositing labor to keep those hair strand flyaways from, well, flying away with the background.

In this project, we proposed an image processing technique that would improve automated chromakeying for hair strands by using “oriented image filters,” which function by detecting “strand-shaped” objects in an image. These image filters have been known to the image processing and computer vision research communities for decades, but to the best of our knowledge, no prior work had applied them to the problem of chromakeying.

SIGGRAPH: Share your favorite story from the research gathering process.

CL: This research project was initiated by an informal discussion at the USC Institute for Creative Technologies (ICT) Vision and Graphics Lab, where artists and researchers can work together on projects that fall somewhere in the middle along the spectrum of research to production.

My colleague David Krissman was a professional compositor working on a project for USC ICT and the USC Shoah Foundation called “New Dimensions in Testimony” (NDT).The goal of the project was to record, preserve, and display the testimony of Holocaust survivors in a way that continues the dialogue between them and learners far into the future. Part of David’s role was to chromakey several hundred hours of the survivors’ recorded testimony footage, as they were filmed in front of green or blue screens. Many of the interviewees had white or gray, translucent hair, and David needed an automated way to ensure that the subjects didn’t get an accidental “algorithmic haircut” during chromakeying.

Separately, I had been working on detecting hair strands in images for another USC ICT research project (“Modeling Vellus Facial Hair from Asperity Scattering Silhouettes”), and David saw some of my preliminary results. Together, we had the idea to improve the compositing workflow for NDT using the image processing techniques that I had implemented for this other project. Essentially, the collaborative environment fostered by USC ICT enabled the birth of this project.

SIGGRAPH: Share what has happened with your research since it was presented at SIGGRAPH.

CL: David Krissman, Andrew Jones (former USC ICT senior researcher/technical director for NDT), and I worked to scale the method in this project so that it could be used in the NDT production workflow. It was applied on hundreds of hours of footage of Holocaust survivor testimony, and the resulting interactive exhibit is on view at The Museum of Jewish Heritage in New York, among other locations.

SIGGRAPH: What is your best advice for someone pursuing a career in the science of computer graphics?

CL: Frequently, as a scientist, I feel like I ought to be spending time working in the lab in front of a computer, but sometimes taking in culture is what I really need for inspiration. I’ve found, in particular, that the online magazine fxguide, written and produced by Mike Seymour, is one of my favorite places for inspiration. It includes detailed technical breakdowns and interviews with some very inspirational folks behind the research and effects of some of my favorite scenes in films.

One of my early career mentors told me when I quit my job to pursue a Ph.D. that moments of true scientific inspiration would come not only from time spent in the lab, but also from time spent elsewhere, out in the world. So, my best advice for those interested in the science of computer graphics is to always pay attention to the things in movies or games that excite you ― and then later “deep dive” to understand how certain visual techniques were achieved, and how those techniques might be improved.

SIGGRAPH: How did you first hear about SIGGRAPH, and what made you decide to submit?

CL: The ideas presented in a wide variety of technical papers drew me to SIGGRAPH. Before I started pursuing doctoral research at USC ICT, I was an imaging scientist working at both Johnson & Johnson and L’Oreal USA, where I studied the interaction between light and materials ― and particularly between light and facial skin. This naturally led me to study the premier research on this topic presented at SIGGRAPH over the years, including works like “A Practical Model for Subsurface Light Transport” (Jensen et al. SIGGRAPH 2001) and many works of my now doctoral research advisor, Professor Paul Debevec, including “Acquiring the Reflectance Field of a Human Face” (Debevec et al. SIGGRAPH 2000) and “Multiview Face Capture using Polarized Spherical Gradient Illumination” (Ghosh et al. 2011).

Since the mission of this project fell somewhere between visual effects practice and applied research, SIGGRAPH seemed like the logical place to submit this work, since both communities are widely represented at the conference.

SIGGRAPH: How do you think winning a SIGGRAPH award will affect your career post-graduation?

CL: The ACM Student Research Competition gave me a platform to show just the tip of the iceberg of what is possible when artists and visual effects practitioners work together with researchers. I aspire to work alongside artists like this in my future career, so I can point to the success of this project when applying for grants or exploring other future opportunities.

SIGGRAPH: Where do you currently work and what do you do in your position?

CL: I’m a graduate research assistant at USC ICT in the Vision and Graphics Lab, in Playa Vista, California, pursuing a Ph.D. in computer science, advised by Professor Paul Debevec. At USC ICT, I have the opportunity and privilege to develop new ideas (and, of course, aspire to present them at various conferences, including SIGGRAPH); however, I also assist with high-resolution facial scanning production work, including last year for the films “Logan,” “Valerian and the City of a Thousand Planets,” and “Bladerunner 2049.” I also work as an educator, as a teaching assistant for both undergraduate and graduate level courses at USC in computer graphics and multimedia. Finally, I’m currently a part-time student researcher at Google Daydream where I work on core technologies for mobile augmented reality.

SIGGRAPH: Since SIGGRAPH 2017, what has been your most exciting career accomplishment and why?

CL: Since SIGGRAPH 2017, I began working as a teaching assistant for the computer science department of USC. In particular, two professors that I worked with (Professor Hao Li and Professor Parag Havaldar) each trusted me to present a lecture for their courses, which were very rewarding experiences. As a woman studying computer science, I have not had a CS instructor or professor who was a woman at any point in my education. These lecture opportunities were important and special to me on a personal level, since I could help fill this gap for the next generation of CS students.

Interested in hearing from other ACM Student Research Competition winners? Click here for a Q&A with SIGGRAPH 2017 undergraduate winners.

Chloe LeGendre earned a B.S. in engineering in 2009 from the University of Pennsylvania and a M.S. in computer science from the Stevens Institute of Technology in 2015. From 2011 to 2015, she was an applications scientist in imaging and augmented reality for L’Oreal USA Research and Innovation. She is currently pursuing a Ph.D. in computer science at the University of Southern California’s Institute for Creative Technologies, advised by Professor Paul Debevec.

Chloe LeGendre earned a B.S. in engineering in 2009 from the University of Pennsylvania and a M.S. in computer science from the Stevens Institute of Technology in 2015. From 2011 to 2015, she was an applications scientist in imaging and augmented reality for L’Oreal USA Research and Innovation. She is currently pursuing a Ph.D. in computer science at the University of Southern California’s Institute for Creative Technologies, advised by Professor Paul Debevec.