Image provided by Tate Johnson

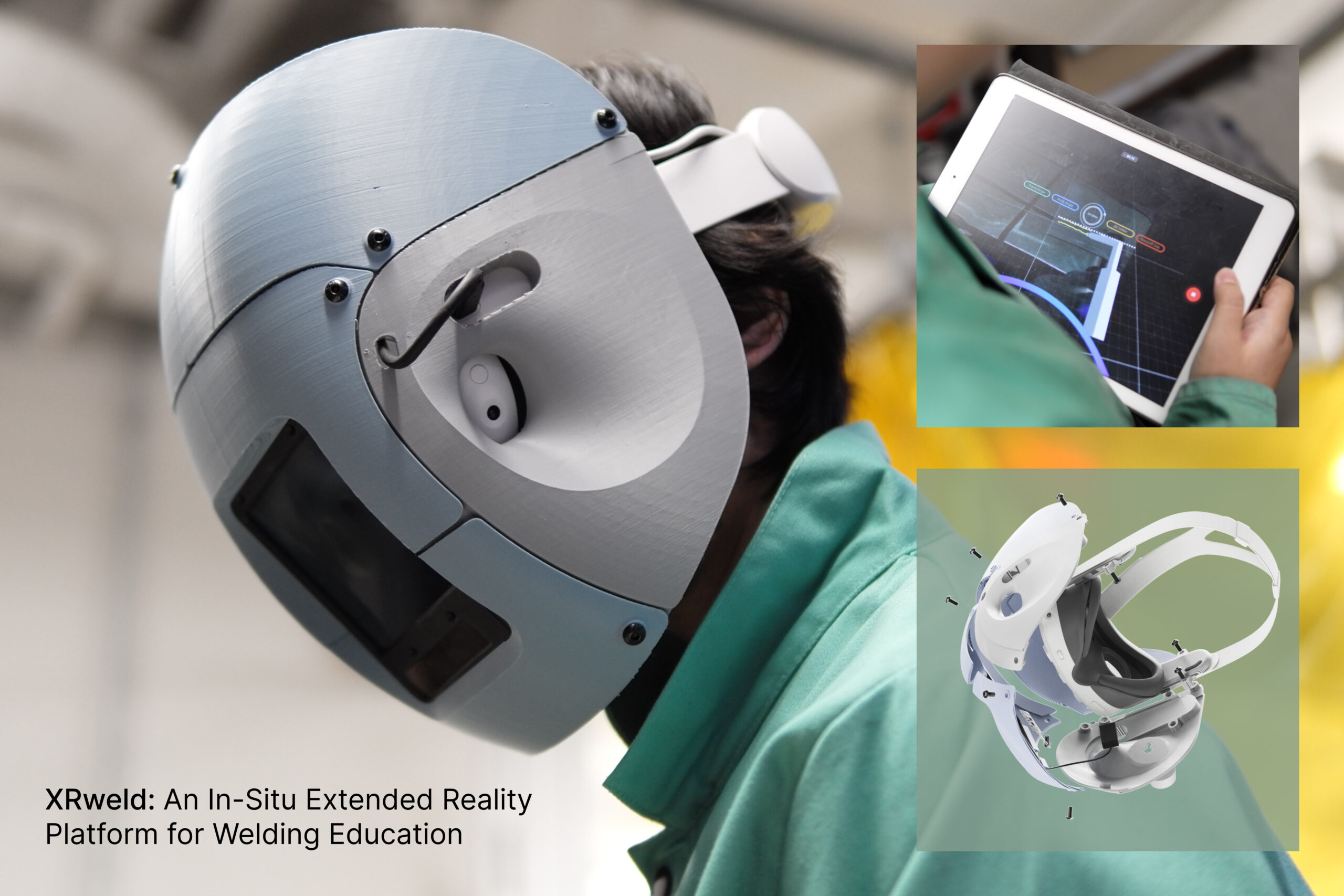

Explore the world of welding in extended realities with the SIGGRAPH 2024 Immersive Pavilion selection, “XRWeld: An In-situ Extended Reality Platform for Welding Education”. This innovative POV technology, inspired by predictions of welder shortages in future years, improves welding education’s efficiency, experience, and quality. Take a look behind the welding helmet in this interview with Tate Johnson and Dina El-Zanfaly.

SIGGRAPH: Tell us about your inspiration for XRweld. Was this a niche that you found in the welding industry?

Tate Johnson (TJ) & Dina El-Zanfaly (DE): We looked into craft and manufacturing fields that could benefit from augmentative technology, and welding became a natural fit.

After seeing statistics predicting shortages of trained welders in future years, we saw a social benefit to improving welding education’s efficiency, experience, and quality. We conducted some co-design sessions with a local workshop trained to weld, which allowed us to understand the current educational process and where specific points of tracking and feedback could be valuable.

One key aspect was using a freeform, handheld tool (welding torch) and a head-mounted optical device (welding helmet). These two components pair naturally with a handheld tracker and a head-mounted display, so it was very intuitive to integrate an extended reality system into this workflow.

SIGGRAPH: Welding requires strong hand-eye coordination and precision. How are you able to replicate XRweld to the real feel of welding?

TJ & DE: A key element of our project is that we don’t need to replicate anything — by designing our system for use with a live spark, the correct haptics, sounds, temperatures, frictions, and sensations are all authentic. As with a non-XR helmet, a darkening display in front of the passthrough cameras even correctly dims.

Our system provides real-time performance analytics by tracking the torch in space and overlaying augmentative UI onto the user’s POV while doing real welding. There are many unique situations in welding that beginners need to learn about, such as wire getting stuck, burning through material, positioning limitations, and configuring machine settings — all of these can’t be taught effectively by waving VR controllers effortlessly in the air, as previous simulators attempt to do.

SIGGRAPH: Tell us about the technology incorporated into the helmet and torch.

TJ & DE: To make our system accessible to smaller, community-based workshops and organizations, we’ve limited our technologies to the Quest 3 headset and Quest Touch Pro controllers. From our testing, we found that this system has the right balance of accessibility, development availability, and tracking performance.

An automatically dimming display glass sits in front of the Quest 3’s front sensor array, protecting the cameras and allowing the AR passthrough to continue with a live spark. In developing the project, we explored additional auxiliary sensors and other technologies for user performance monitoring.

Still, our core system uses only the Quest systems and our customized hardware that interfaces it with the functional MIG welding setup. We leverage the headset and controller’s inside-out tracking technologies to allow users to enter any welding environment and have our system function fully — removing the need to set up additional equipment they wouldn’t otherwise need for welding.

SIGGRAPH: Is the helmet used in XRweld a modified welding helmet or a completely new design? What was the process for creating the look of this helmet?

TJ & DE: The helmet is an entirely bespoke, 3D-printed helmet that protects both the Quest and the user from the harsh and dangerous light and heat environment of welding. Our design has evolved to this 3D-printed version as we had to place the Quest 3, face shielding, and auto-darkening screen within specific tolerances to each other, necessitating a fully custom design.

The helmet’s look has evolved from our testing and development process — the modular, panel-based sections allow the helmet to be prototyped quickly across multiple 3D printers, making it easy and fast to iterate upon designs. The contoured cutouts on the sides allow Quest 3’s auxiliary cameras to maintain tracking during active welding. The overall surfaces and sizing were built upon the Quest 3’s dimensions and references from other welding helmets.

SIGGRAPH: What advice do you have for those submitting to the SIGGRAPH 2025 Immersive Pavilion?

TJ & DE: We benefited from the broad and valuable feedback from the SIGGRAPH attendees and have made great connections related to our project since exhibiting at the Immersive Pavilion. I think designing the experience to allow for dialogue and discussion is very important. We saw our exhibition as a proposal or a work-in-progress style snapshot of where our system was, and I think this contributed to a lot of valuable discussions and engagement with attendees.

I would also recommend — with a large number of attendees and some of them with limited time to stop at each project — to consider the glanceability of your submission at different levels. We used printed posters and TV monitors to describe some of the projects at a high level to those who might only have time to walk by and take a picture. We also streamed one of our Quest headsets to a TV monitor, which was very important. Seeing someone else’s perspective makes for a much more engaging viewer experience. If only one person can experience it firsthand, many others can understand the experience through watching the monitor.

Submissions for the SIGGRAPH 2025 Immersive Pavilion open later this year. Visit our website to learn more.

Tate Johnson is a multidisciplinary designer whose work spans industrial design, engineering, materials, and interaction design. His human-computer interaction research publications and demos have been awarded at multiple ACM venues in the areas of fabrication, tangible interface design, and extended realities. His current work focuses on the use of composite materials in motorsports. He earned his bachelor’s in Industrial Design from Carnegie Mellon, with a minor in Physical Computing.

Dina El-Zanfaly is a computational design and interaction researcher. She has been recently named the Nierenberg Assistant Professor of Design in the School of Design at Carnegie Mellon University (CMU). She currently directs a research lab she recently founded, hyperSENSE: Embodied Computations Lab. The core of her research questions how designed interactions with computational technologies shape us, and how we shape them. She explores how these interactions enable new forms of creativity, communities of design practice, and cultures of collaboration, while seeking new ways to accommodate their growth and variation within larger systems and contexts. She earned her Ph.D. degree from the Design and Computation group at MIT, where she also earned her Master of Science while being a Fulbright scholar.