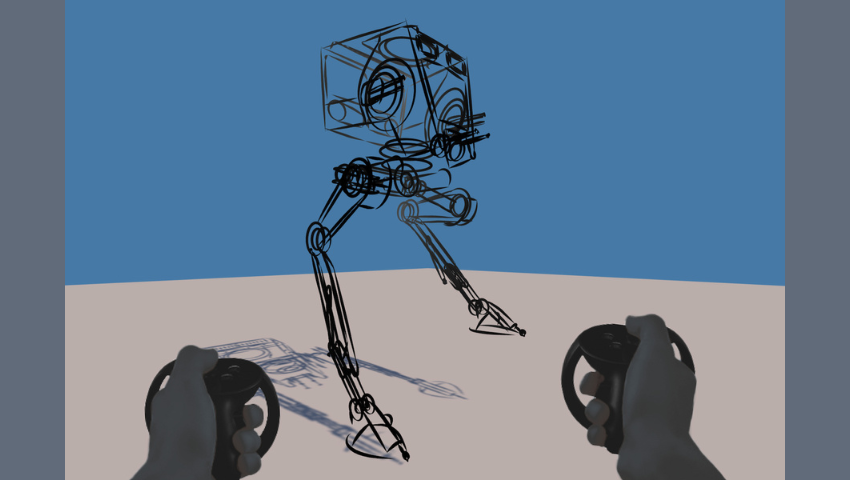

Image Credit: SketchLab, KAIST

Dive into how Joon Hyub Lee and his team developed “RobotSketch,” a groundbreaking system where robot designers can sketch robots in 3D through a transparent panel synchronized with a physical tablet in an immersive VR workspace.

SIGGRAPH: Tell us about the process of developing “RobotSketch: An Interactive Showcase of Superfast Design of Legged Robots.” What was your inspiration for this project?

Joon Hyub Lee (JHL): I am a postdoctoral researcher in SketchLab at the Department of Industrial Design, KAIST. Our team has been developing “3D sketching” tools that enable designers to quickly and effectively express and communicate 3D ideas through sketch-based interactions. The team has also been designing actual products with these tools and improving the 3D sketching technology through this process. A few years ago, a robot manufacturing company asked us to design the exterior of a new collaborative robot. At that time, our 3D sketching technology was not able to represent a moving robot, which led us to contemplate a 3D sketching tool specifically for robot design.

SIGGRAPH: Let’s get technical. How did you develop this project’s interactive system?

JHL: In our system, robot designers can directly sketch robots in 3D through a transparent panel synchronized with a physical tablet in an immersive VR workspace, allowing them to review the robot’s shape and structure at real scale. The sketched robots then learn to walk using reinforcement learning, and the robot designers can control them in real time with VR controllers. This system has been made possible by the recent development of three key component technologies. First, a 3D sketching technology that allows for the quick and effective creation of the shape and structure of articulated objects like robots using intuitive pen and multi-touch gestures, which was presented at SIGGRAPH 2022. Second, a technique that uses a tablet device as a transparent window in VR to interact with distant objects using touch and pen, which was presented at UIST 2023. Third, a technology that enables robots to learn fluent locomotion skills through reinforcement learning within a sophisticated physical simulation, based on a core technology from Professor Jemin Hwangbo’s research team in the Department of Mechanical Engineering, KAIST, which was presented in Science Robotics in 2019.

SIGGRAPH: What was the biggest challenge you faced when developing this project? And how did you overcome it?

JHL: Integrating all the aforementioned component technologies into a smoothly functioning, organic system and workflow was the most challenging part, requiring a significant amount of time and effort. In particular, we faced various trials and errors in synchronizing basic concepts, terminology, data formats, and communication protocols to combine the latest technologies in robotics with 3D sketching and VR systems. The key to our success was the close teamwork we formed by frequently meeting and adopting a learning attitude, which allowed us to leverage the expertise of team members specialized in these fields.

SIGGRAPH: How does “RobotSketch: An Interactive Showcase of Superfast Design of Legged Robots” contribute to advancements in the robotics industry?

JHL: We believe that, soon, many robots equipped with AI that can provide valuable services will appear in people’s lives, much like the “Cambrian explosion.” Until now, robots have been developed primarily from a technical perspective, such as sensing, locomotion, manipulation, and so on. However, to become great products, they will soon require a proper product design process similar to that of the automotive industry. “RobotSketch” has shown that such is possible in the robotics industry and may also trigger the development of futuristic robot design tools that help explore and develop a wide range of alternative shapes, structures, movements, and services of robots in a short period, helping to enhance the experiences robots can offer.

SIGGRAPH: What does the future hold for RobotSketch?

JHL: Currently, it is possible to sketch a robot in an empty space and make it move in the desired direction. However, in the future, we will utilize 3D scanning and reconstruction to help design more realistic robots within VR environments that closely mimic real-life settings. In these VR environments, we will teach robots how to grasp and manipulate objects through demonstrations and natural language instructions. Finally, we will rapidly prototype the completed designs into physical models using modular structures and actuators. Recently, experts in various fields of robotics, computer graphics, and human-computer interaction have joined forces and established the “SketchTheFuture” Research Center at KAIST led by Professor Seok-Hyung Bae in order to realize this superfast robot design process that combines 3D sketching with AI and VR, which is very exciting for me.

SIGGRAPH: What do you hope SIGGRAPH 2024 participants take away from interacting with “RobotSketch” during Emerging Technologies?

JHL: How humans can effectively collaborate with Generative AI is a major topic of interest. We believe that through sketching, the high creativity of humans can be married with the high productivity of AI. As demonstrated in our exhibition, if humans can effectively communicate key ideas and intentions to AI through sketching, AI can fill in the gaps and assist in pushing the design process forward. We hope that SIGGRAPH 2024 participants can witness the future of human and AI through the harmonious collaboration facilitated by sketching in the field of advanced robot design.

There’s more where that came from. Explore all of the Emerging Technologies you can interact with at SIGGRAPH 2024 here.

Joon Hyub Lee is a postdoctoral researcher affiliated with SketchLab in the Department of Industrial Design, KAIST, and the DRB-KAIST SketchTheFuture Research Center. He earned his B.S. in mechanical engineering (’12), M.S. in industrial design (’18), and Ph.D. in industrial design (’23) from KAIST. He has developed intuitive spatial interactions that integrate advanced technologies in computer graphics, human-computer interaction, and robotics, and applied them to various design fields. He has 29 publications in international conferences and journals including ACM TOG, ACM SIGGRAPH, ACM CHI, ACM UIST, and IEEE ICRA. His paper “Rapid Design of Articulated Objects,” published in ACM TOG in 2022, received an Honorable Mention award at SIGGRAPH 2022 Emerging Technologies.