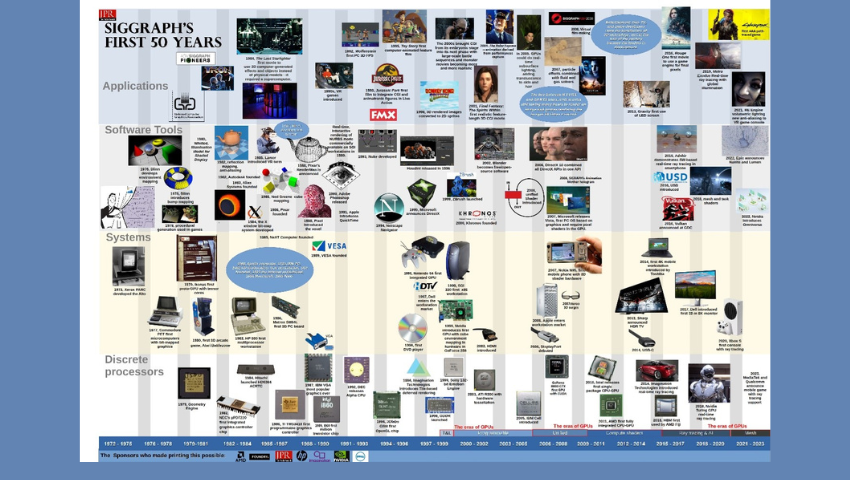

Image Credit: Jon Peddie

The changes astounding — the goals unchanged.

In 1973, a 24-bit frame buffer was considered big, maybe huge, it was 1024 kB. GPUs hadn’t been invented yet, and VLSI was just getting mentioned. Displays for CAD were calligraphic stroke writers, also known as vector, displays and about eight companies were making them. The Tektronix 4014 19-inch graphics storage tube display had just been introduced and was making headway. Raster displays were still in the lab. Graphics controllers were large boards — 18 ×18 inches — with a sea of discrete logic circuits and very little RAM. Moore’s law had been introduced but most people only thought of it as being an economical model to predict memory. VGA hadn’t been introduced yet, and most graphics terminals were hung off a mainframe computer or a PDP 8 or 11. APIs were not a thing yet. Some standards were emerging. Tektronix’s Plot 10 library and ACM’s CORE were the first industry and quasi-industry standard APIs.

By the mid-80s VLSI was taking off and two integrated graphics controllers had emerged and became industry standards, the NEC μPD7220 and Hitachi’s HD63484. AMD introduced the 2901 bit-slice 4-bit CPU that Ikonas had successfully used in the late 1970s. Memory was getting larger — 16kb in one chip, and adhering to Moore’s prediction, more meant less and memory prices started failing. Graphics lives on memory and as memory prices dropped and capacity increased so did frame buffers and performance and overall capabilities.

The Catmull–Clark algorithm in the late 1970s followed by the introduction of accelerated ray tracing and a string of graphics procedures from Jim Blinn. The early ‘80s saw the introduction of the geometry engine leading to the founding of Silicon Graphics — one of the most important graphics companies in the computer graphics industry.

In the late 1980s and early 1990s, Renderman was announced, pixels got depth with the introduction of Voxels, and NURBs made curved surfaces more manageable.

The first integrated OpenGL processor was introduced by 3Dlabs, and ID Labs released the first 3D game, “Wolfenstein” for the PC. “Toy Story” came out in 1995, the first computer animated full-length feature film, the goal and dream of so many early participants in the CG field.

Moore’s law was now recognized as being a factor on all semiconductors from memory to processors, communications, and even LCD panels. Things started getting smaller, less expensive, and faster, and as soon as a new processor was introduced, clever programmers found ways to exploit it.

The mid to late 1990s saw new CG programs like Houdini and Zbrush. The first fully integrated GPU showed up in the Nintendo 64 in 1996, and it was quickly followed by the first commercially available GPU, the Nvidia GeForce 256, in late 1999. This firmly entrenched the term GPU into our vocabulary.

In 2001, one of pioneering and most ambitious virtual humans was created in the “Final Fantasy the Spirits Within,” an all-CG movie.

The processors in GPUs, originally less than five and known as shaders, were now in the hundreds and evolved from fixed function to general purpose compute engines known as unified shaders first proposed by AMD. Subsurface lighting improved skin tones, and particle physics created smoke, flames, and waves so realistic they revolutionized special effects in the movies and games. By the end of the first decade of the new century, virtual filmmaking wasn’t a discussion, it was a big business.

3D graphics, aided by a universal API from Kronos, was now being run on first-gen smartphones and game consoles. In 2010, Intel and AMD successfully integrated a GPU with an x86 processor.

Virtual humans progressed to “Gravity,” an epic film in space, and “Rouge One” became the first film to use a game engine. Then ray tracing became real time and one of the holy grails had be found. “Metro Exodus” was one of the first games to effectively use the new processing power of Nvidia’s breakout GPU.

Real-time ray tracing was introduced on a mobile device by Adshir and Imagination Technologies, and Mesh shaders were introduced on GPUs. Epic demonstrated “Nanite” and “Lumen” and volumetric lighting was shown in a VR game on a console.

Pixar gave its Universal Scene Description API to the world, and Nvidia embraced it and offered Omniverse. MediaTek and Qualcomm announced real-time ray tracing processors for smartphones, and Nvidia introduced real-time path tracing for games which was used in “Cyberpunk 2077.”

But it’s not over. Moore’s law often described as moribund is far from it, and although the rate of miniaturization of transistor size has slowed, compute density has not as semiconductions continue to scale using 3D stacking techniques. Optical processors are being experimented with promising orders of magnitude improvements in performance, and communications and cloud computing is creating a vast network of local and remote computing resources for everyone. The science fiction scenario of instant data access anywhere, anytime is now a realizable reality albeit if slightly in the future. But the future is racing toward us faster than ever, and CG will let us see it coming and allow us to successfully use it.

May the pixels be with you — forever.

This article was authored by Jon Peddie of Jon Peddie Research.