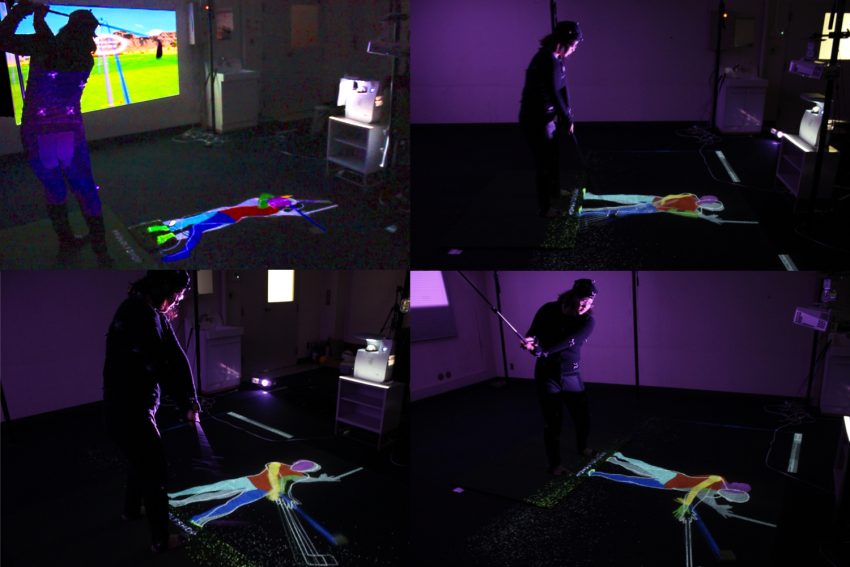

Golf Training System Using Sonification and Virtual Shadow © 2019 Tokyo Institute of Technology

Are you a golf novice? Expert? Spectator? No matter your level of interest, expertise, or knowledge, SIGGRAPH 2019 Emerging Technologies is bringing Tokyo Institute of Technology’s “Golf Training System Using Sonification and Virtual Shadow” to a convention center near you! This innovative approach to athletic training offers a look at the future of just about any physical training that might help humans of tomorrow thrive. As a highlight for what’s next in Adaptive Technology, the possibilities are endless…

We sat down with contributor Atsuki Ikeda (M.S. student, Tokyo Institute of Technology School of Computing) to learn how the team brought this groundbreaking technology to life.

SIGGRAPH: Talk a bit about the process for creating “Golf Training System Using Sonification and Virtual Shadow.” Are you or any of your team members golfers yourselves? What inspired the idea?

Atsuki Ikeda (AI): Yes, a couple of our team members play golf. The desire to practice golf without changing the vision and the fact that golfers often recognize their form from shadows on the ground inspired the idea. Many golfers check and revise their swing using photos, mirrors, or videos. During the actual golf swing, golfers must look at the ball on the ground. Thus, golfers cannot use traditional training methods. In order to solve this problem, we designed our system as the “virtual shadow,” which is displayed at the user’s feet.

Golfers also face an issue where they cannot tell if the club head is in a good position behind their back. Therefore, we added the ability to hear the 3D position and posture of the club head by using sound.

SIGGRAPH: Let’s get technical. What was it like to develop the technology in terms of research and execution?

AI: Four researchers gathered to create this system. Two were in charge of visual feedback, and the other two were in charge of audio feedback.

The implementation took two months to create each feedback and about two months to integrate them. Performing motion matching in real time was a major barrier. Golf is similar to swinging up and down in simple form comparison.

SIGGRAPH: How does the system determine and teach the perfect swing?

AI: The system uses a pre-recorded swing motion data obtained from an expert golfer. The user’s motion is captured by the optical motion-capture system and is sent to a PC. It then looks for the expert’s posture, which is closest to the user’s posture in real time, by using the dynamic time warping algorithm. Both postures are projected on the floor as “virtual shadows.”

The position and posture of the club head are also tracked. If the clubhead moves along the target trajectory, the system produces the sound from the 3D position of the club head, and if the posture of the club head is wrong, the system adds noises to the sound.

From the shadow, the user understands their form. From the sound, the user understands the position and posture of the club head.

SIGGRAPH: What kind of cameras and microphones did you use for the video and audio?

AI: We used Optirack for capturing motion. Audio is edited from the electronic sound and provided to the user through earphones (or, headphones).

SIGGRAPH: Does the project need to be adapted based on which type of club the user swings with?

AI: This system uses pre-recorded motion of the golf swing as a model data; however, the ideal swing form is different for users’ age, physique, kind of golf club used, and so on. In this demonstration, we use a teacher’s swing, which assumes a full swing using an iron club. We do, however, want to collect more golf-swing motion data and change our system so that users can select their own ideal form as teacher data.

SIGGRAPH: This seems like a project attendees will have a lot of fun with at the conference. Technologically, what do you find exciting about the final experience you will present to SIGGRAPH 2019 participants?

AI: We aim to intuitively understand forms and clubs using real-time virtual shadows and stereophonic sound, and we devised an algorithm so that the user can practice at their favorite tempo. We want them to experience golf as they know it.

SIGGRAPH: What’s next for “Golf Training System Using Sonification and Virtual Shadow”? What kind of application do you hope to see long term? Is this something for professional golfers to adapt, training young children, etc.?

AI: Although this system aims to achieve perfect form in only one motion, I think that the optimal form differs depending on the physical constitution, etc. The aim is to create a motion database and to teach the user a form suited for them, or even an important element regardless of physical constitution. However, even with this system, I think that it makes a lot of sense for professional golfers to use and compare their current and past swings.

SIGGRAPH: Tell us what you are most looking forward to at SIGGRAPH 2019.

AI: This is my first time attending SIGGRAPH. I am looking forward to seeing and experiencing many demos in the Emerging Technologies venue.

SIGGRAPH: What advice do you have for someone looking to present their work at SIGGRAPH?

AI: Training for a sport is not always pleasant. Hence, when we developed our system, we thought about how users would be able to enjoy using it. I consider that many systems which are presented in SIGGRAPH must be architected for an enjoyable user experience.

The SIGGRAPH 2019 Emerging Technologies program is open to registrants with an Experiences pass and above. Click here to register for the conference, 28 July–1 August, and preview the full program below.