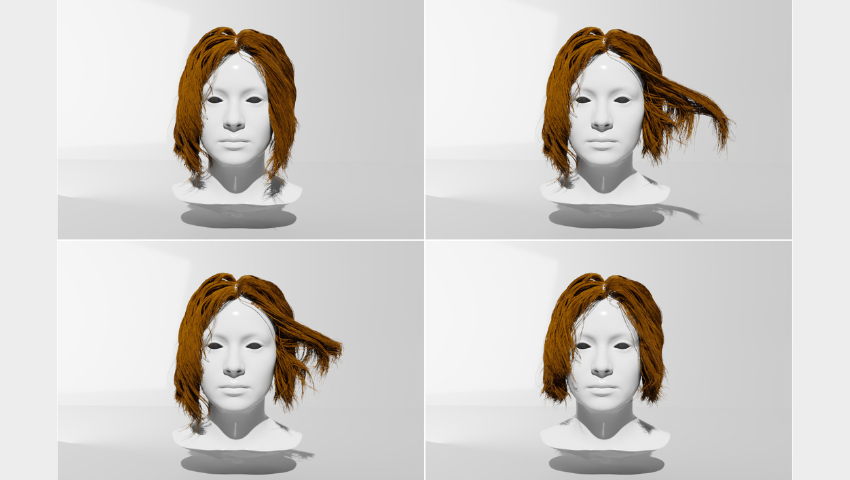

Image Credit © NVIDIA

All you need is a computer, modern GPUs, and great hair. The SIGGRAPH 2023 Technical Paper “Interactive Hair Simulation on the GPU Using ADMM” has been on everyone’s mind lately. To learn what all of the buzz is about, SIGGRAPH hopped in the barber’s chair and caught up with Gilles Daviet (research scientist, NVIDIA) to take a closer look at his research, why he chose to use ADMM for this project, and how this technology could transform the future.

SIGGRAPH: Share some background about “Interactive Hair Simulation on the GPU Using ADMM.” What inspired this research?

Gilles Daviet (GD): The inspiration came from a longstanding problem in hair animation: how to ensure that a digital groom — modeled to precisely match a reference model or photograph — behaves as expected when simulated. Traditional tools use a purely geometric modeling approach, meaning that the resulting groom doesn’t react to physical constraints and forces. Once simulated, it tends to sag under gravity, losing its artistry — and sometimes contains layering issues that prevent the hair from behaving naturally.

Our approach’s significant breakthrough is modeling hair in an always-on physics environment. As long as the grooming simulator and animation simulator are consistent (which we can assess by comparing their behavior in a set of controlled experiments), we know the overall look will not be degraded when simulated in real shots.

SIGGRAPH: This research is the first of its kind. What challenges did you face while pioneering this research?

GD: Hair simulation has been the subject of active research for many years. The extremely large computation times hindered past attempts’ usability. The major breakthrough in this paper is an algorithm leveraging the potential of modern GPUs, which provide faster feedback times than previously possible.

SIGGRAPH: Why did you choose ADMM to create this project?

GD: Hair simulation is challenging for two main reasons. First, hair strands are stiff and slender objects, exhibiting many nonlinearities that create numerical difficulties — such as the coupling between the bending and twisting modes, which result visually in the formation of plectonemes. Second, hair comes in quantities of thousands of strands, all interacting through dry, frictional collisions.

ADMM allows us to separate these two difficulties and tackle each problem with dedicated, GPU-friendly optimizers for a more efficient solution, and each iteration of the algorithm is more affordable. While many iterations are required to achieve final convergence, in a quasi-static environment — such as those used for physics-based editing — we can quickly provide intermediate results to the user before continuing optimization at the next frame. This leads to a more interactive experience for the developer.

One challenge of ADMM is its hyperparameters, or constraint weight, which can drastically slow down convergence if set improperly. However, within our context of hair simulation, the range of physical parameters we encounter is fairly narrow, making it possible to devise heuristics for setting those hyperparameters in a way that will keep the solver running smoothly.

SIGGRAPH: How do you imagine this technology will transform in the future? What do you envision it being used for?

GD: The original goal of this research is to make the creation of physics-ready digital grooms more tractable — but it has taken on an exciting life of its own, with some readers imagining it powering a hair salon simulator game. To make such applications possible, we’ll need to imagine new ways of interacting with the digital hair on top of the raw simulation technology to make the experience more lifelike.

SIGGRAPH: What are you looking forward to most at SIGGRAPH 2023?

GD: SIGGRAPH brings together a very diverse audience showcasing unique, unexpected ideas. I look forward to seeing the surprises in store.

SIGGRAPH: What advice do you have for researchers who are considering submitting to SIGGRAPH Technical Papers in the future?

GD: Submit your work! Feedback from the SIGGRAPH reviewers and community is invaluable. If the validation and comparisons required for the Journal Track sound too intimidating, the Dual-Track submissions is a more approachable way to get your paper published.

SIGGRAPH 2023 is just around the corner. Explore high-quality research papers, such as this, when you join us this August in Los Angeles.

Gilles Daviet is a research scientist at NVIDIA developing interactive, high-fidelity simulations for NVIDIA Omniverse. Prior to NVIDIA, Daviet spent several years in the VFX industry, working on physics-based animation of virtual characters and their environments. He was a co-recipient of the Technical Achievement Award from the Academy of Motion Picture Arts and Sciences and received his Ph.D. in mathematics and computer science from Grenoble Alpes University.