Image via Jon Peddie Research

What do developers think of the mods?

When Nvidia introduced its deep learning super sampling (DLSS) as a key feature of the GeForce RTX 20 series add-in boards (AIBs) in September 2018, it seemed like a miracle. You could actually run compute-intensive ray tracing in real time at ultra-high 4K resolution — at not just an acceptable frame rate, but even higher. How could that be possible? It seemed to defy the laws of physics. The feature was released in February 2019.

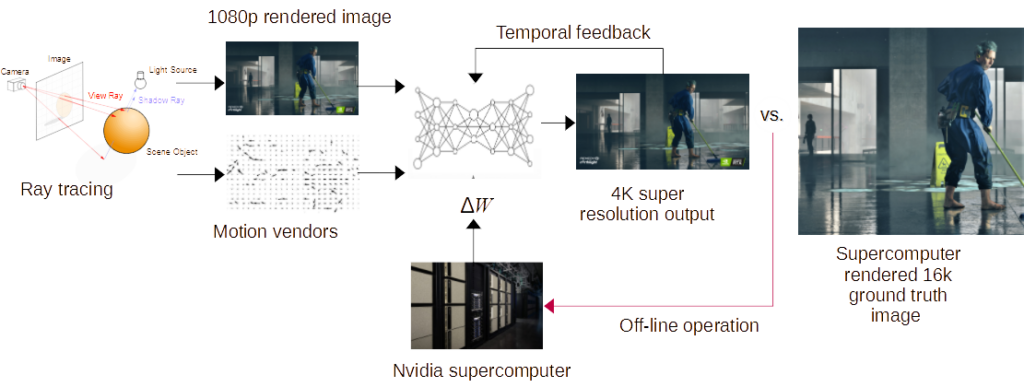

The trick was to sample the frame, then reduce its resolution, then ray trace it, and then scale it up. Sounds simple once it’s all worked out, but it was not simple to do, and it took a team of people to make it work.

The first version did work, and the second version that added temporal feedback worked even better.

DLSS 2.0, released in April 2020, used the tensor cores in Nvidia’s GTX AIBs; however, the AI didn’t require specific training on each game. Even though both versions of DLSS had the same branding, the two versions were significantly different, and version 2 was not backwards-compatible with version 1.0 — but it was significantly faster.

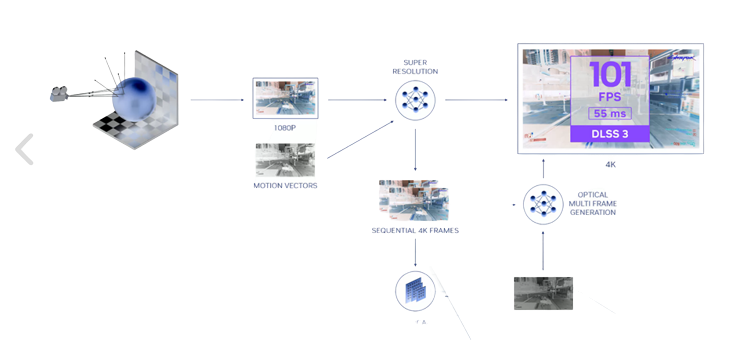

DLSS 3 moved a step beyond DLSS Super Resolution with a new DLSS Frame Generation convolutional autoencoder. The autoencoder generates an entire frame on its own, using optical flow fields calculated by an optical flow accelerator (OFA).

Nvidia GPUs have had optical flow accelerators since the Turing architecture. However, the new AIBs have a more advanced and faster version of the OFA. DLSS 3, like the previous versions, is a feature of GeForce RTX AIBs, and DLSS 3 is only available on the 4000 series AIBs, so it’s not backward compatible, either.

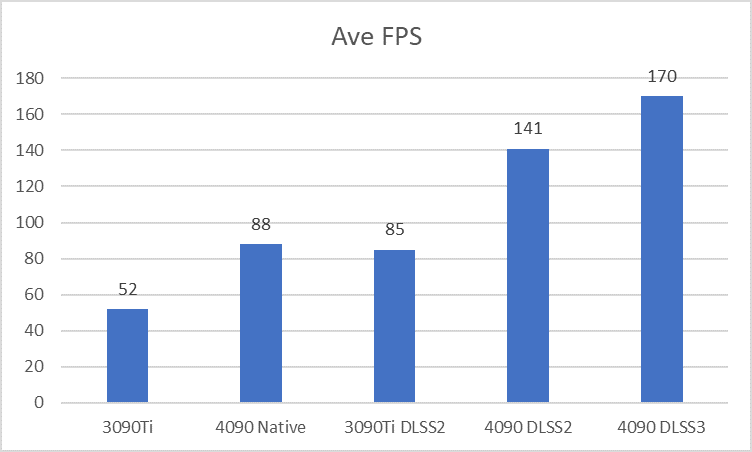

However, the results are truly amazing. Based on some tests Nvidia had run using a popular and demanding game, “CyberPunk 2077,” they got the following results.

Intel announced they, too, have a DLSS-like capability in their Arc Xe GPUs they call XeSS. Intel says machine learning is used to synthesize images that are remarkably closeto the real image of a native ultra-high-res rendering. They do it by reconstructing subpixel details from neighboring pixels, as well as motion-compensated vectors from previous frames. The reconstruction is completed by a neural network that has been trained on similar data.

However, unlike Nvidia’s proprietary solution, Intel says they have implemented XeSS using open standards to enable availability on many games and across a broad set of hardware from both Intel and other GPU vendors.

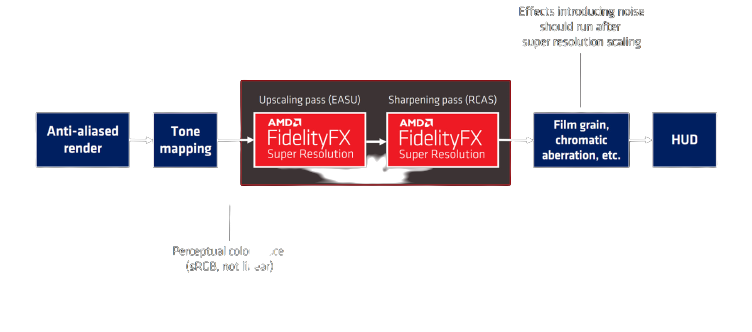

Currently AMD is not offering a deep learning super sampling capability with their GPUs. Instead, the company has chosen to offer FidelityFX Super Resolution (FSR). AMD’s FSR requires less computational work than DLSS or XeSS. It is a post-processing process designed to upscale and enhance frames and doesn’t require tensor cores and extensive computation.

FSR upscales an image using a spatial algorithm (only examining the current frame), and then applies Lanczos upscaling edge detection and sharpening filters to improve the image. AMD’s solution is not processor specific and, if incorporated in a game, can be used on other companies’ AIBs or GPUs. AMD offers ray tracing, and presumably it could do the ray tracing at a lower resolution (ray tracing is very sensitive to resolution) and then scale it up and offer comparable results to the DLSS and XeSS results in frame rate.

Fast, but is it faithful?

Movie studios began experimenting with colorizing black-and-white films, such as “It’s a Wonderful Life,” and Orson Welles’ “Citizen Kane” in the 1970s. (It’s rumored that Welles had asked on his deathbed that “Kane” never be colorized. Ted Turner ignored that wish and ordered a colorized version of the film. The result was mediocre, and the project was scrapped.)

Colorization started as a hand-drawn process and developed into a digital technique — photos and videos are colored today by colorization artists and historians hoping to re-create classical moments. These days, it’s never been easier to colorize films. AI will colorize films in the public domain and release them to the internet, autonomously.

Artists, directors, actors, and photographers decry the colorization and say it violates their copyrights and trademarks, and, more importantly, changes the intent they had and the meaning behind their work — if it was meant to be in color, they would have put it in color.

Jordan Lloyd, a colorization artist, said, “These [colorization] things are not supposed to be substitutes for original documents. They sit alongside the original, but they’re not a substitute, they’re a supplement.”

The concept of using AI to imagine what the image might be is fascinating to say the least. But is it faithful to the creator’s intention? Nvidia’s DLSS 3 can use just one pixel in eight to estimate the other seven. But they are not the original pixels, and it means 88% of the image is fake, a forgery.

Will the game developers care? There’s a couple of points of view. One is that scenes in a game are changing so quickly, especially in a first-person shooter, that no one has time to look at the scenery or admire the artwork — which is a shame because the artwork in games today is truly amazing. Two, since many games support modding, the game developers and publishers have already agreed to having their work altered. The third point of view is that games are ethereal, not eternal, like a painting or film. A scene is generated and almost immediately abandoned for the next one — it has no life unless a screen capture is made. Finally, using DLSS techniques almost never produces the same results, so frame 125 in CP77 that is run now will not look the same 10 minutes from now, and it will not look the same on anyone else’s machine. So, how is a lawyer going to prove the process actually tampered with the original copy-written and trademarked work?

It’s probably a tempest in a tea pot, but it certainly is going to be discussed on the internet.

This article was authored by Jon Peddie of Jon Peddie Research.