“The Human Touch: Measuring Contact with Real Human Soft Tissues” © 2018 The University of British Columbia, Vital Mechanics Research

What if there were a way to measure how objects, like clothing, wearables, or furniture, touch and interact with different parts of the human body?

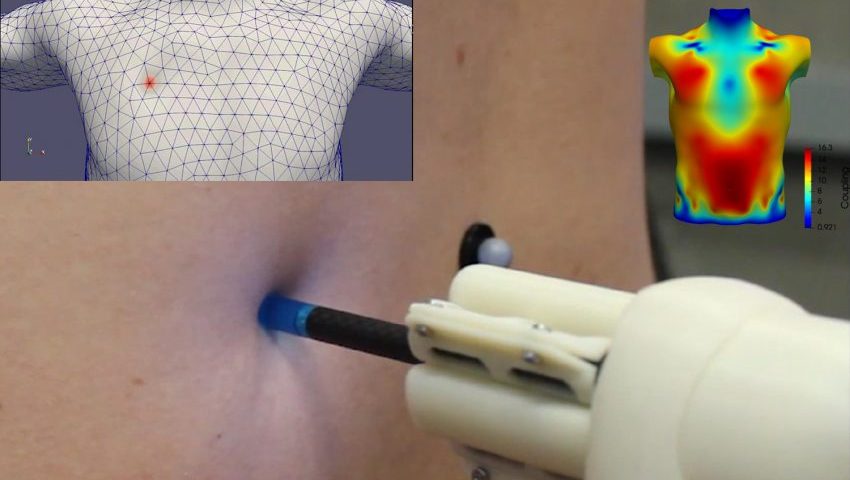

“The Human Touch: Measuring Contact with Real Human Soft Tissues,” presented in the SIGGRAPH 2018 Technical Papers program, claims to be able to do just that with a novel, hand-held skin probe. The University of British Columbia (UBC) and a spin-off company called Vital Mechanics worked together to develop measurement-based models of the human body, specifically what the human body feels like to the touch. While there are, of course, many measurements in medicine of specific parts of the body for diagnosing diseases like cancer, this is the first effort to make a distribution measurement of how the human body actually feels. See the video below for a quick explanation of the probe and process.

SIGGRAPH: Where did the idea for the project come from? What inspired the work?

Dinesh K. Pai (DP): The beginning of the process dates back at least 20 years, as we’ve been working on simulating soft bodies in my lab for quite some time. I think graphics, as a whole, has also been working on soft body simulation for at least 25 years, but it’s always bothered me that people have been selecting the material properties of the body at random just to get any effect. I’ve always been interested in seeing if we can measure the specifics.

Around 2001, we actually built a robotics system to measure material properties of things like toys and other objects you could simply place in the robot, which was part of the inspiration. The other part was that we are now focused on building this technology for humans. When we searched for data we looked everywhere, but there hasn’t been anyone who has actually conducted research on these kind of human measurements, so we said we would do it ourselves. We then wanted to create these digital human models to actually have predictive value, not just for visual effects or games, where just having a visually appealing model is sufficient.

We have a spin-off company from my lab called Vital Mechanics, which has been working to develop a software that has this predictive value, and we realized that we really need to know what the body feels like. For example, how the stiffness of your shoulder is different from the stiffness of your chest or of your head. All of this varies dramatically throughout the body, and once we realized we had to measure this, we got started.

SIGGRAPH: How did the team for this project come together?

DP: A project this large and complex requires many different areas of expertise. Part of the team came from the spin-off company and they worked in collaboration with the university. We had great software people from Vital Mechanics, along with a few engineers who were more experienced with building the hardware, which was necessary because of our big challenge surrounding the probe. We had to build a new probe to make contact with the body, and measuring humans is no simple task. Unlike toys or other material objects, humans don’t sit still. They’re constantly moving around, and we also wanted to measure them as naturally as possible. This obstacle required expertise in trying to track how the body moves using motion capture, as well as how to design and 3D print this probe, which some of my students are experienced in.

Another major ingredient in designing a team that could take on such complexity was research. We carried out a research project that was focused on innovative and translational research, so we could take research from our lab and turn it into something useful. This came from a program that allowed us to fund such a large team.

Additionally, my lab has always been multi-disciplinary. We have a student in neuroscience, engineers, software people, and my background is in engineering and computer science. So, having worked on multi-disciplinary projects in the past made it relatively natural to put together such a multi-disciplinary piece.

SIGGRAPH: Walk us through the creation process. What technology came together to make this happen?

DP: One ingredient of the technology is just soft body simulation, which had been developed by [U.S.-based] mechanics. They developed this general model of a thick skin that could slide on top of the body, which we can use to simulate scenarios like how clothing will fit.

The second part is an actual probe to measure the tissue properties. It’s important to note that we are interested not just in how the tissue moves, but the feel from a light touch. For example, when you put on a tight shirt. This was a challenge because it isn’t possible to just use video-based measurements — you have to actually touch the body. You have to push on it and take measurements. For example, when you push just a millimeter into your shoulder, the forces rise quite a bit. To take these kind of contact measurements, we had to build a probe with force sensors and built-in cameras. This led us to build a probe that didn’t exist before, which my student Allister designed and developed, and then we 3D printed it. We made several prototypes before building the final one.

The third aspect was that we had to measure humans, and there’s a lot that goes into measuring humans, especially in large numbers. At this point, we had already measured 50 participants, but we had to get a population of people to come in because we needed different ages, since we didn’t want to just measure one type of person. We also had to bring them into the lab, make sure we were following all the ethics guidelines, and abide by a number of other standards. Due to the complexity, this type of human measurement is not that common in computer graphics, like it is in other areas of science. Our bringing real people in to measure is actually ongoing. We’re continuing with adding new measurements to our system since the published paper.

The fourth component is actually estimating the tissue properties from all this data, as data alone is not enough. One important thing we realized here is that when you work with awake, conscious humans, they move slightly when you touch them. We still wanted to make it as easy and natural as possible when taking these measurements. We didn’t want them to be fixed in place or anything of that nature. To account for this bouncy castle for sale extra movement, we track the body, too. We have motion-capture markers on the body and on the handheld probe simultaneously, so we can let the participants who are being measured sit down and act natural. We also had to develop new tissue property estimation methods.

SIGGRAPH: How do you see your research being used? How might it be built upon in the future?

DP: We’re still getting more measurements. Additionally, we’re now measuring peoples’ body geometry and other skill properties at the same time. Our goal is to build a digital human model that could be used the same way as a computerized design is used in other areas, like for an airplane. Physical airplanes are not built until those building it have simulated exactly how certain aspects will function. We want to do the same thing for objects that interact with the human body, starting with clothing. This way, when you want to design clothing or see how that clothing will fit, we want designers to be able to simulate this with our digital human models and predict how these aspects will work before building any kind of physical prototype.

Clothing is just one example. The same idea applies to anything humans interact with. For example, this simulation would be used on a wrist watch or furniture, like a chair a person would sit on. It makes sense to see how these objects work for real humans, instead of just building things that never fit, never work, and then end up wasted. My hope is that we can use our digital human models to build things that work for humans much better than they have in the past.

SIGGRAPH: What excites you about this work?

DP: Everything about this excites me! Specifically, we’re learning a lot about the human body, like how the body isn’t uniform. Some parts, such as the bones and the connective tissue, vary drastically in behavior from others. For example, sweating and oil on the body have a number of different effects on different areas.

Another exciting outcome is the practical application in the apparel industry. Clothing is designed and purchased without using too much of the 3D simulation technology that has been used in graphics for years, so I’m very excited to bring these techniques to the real world and make a difference. I’d love to have a broader impact and make these practices more efficient. If we can simulate these outcomes before we make the clothes, we can prevent a lot of waste in the industry.

Dinesh K. Pai is a Professor of Computer Science and Tier 1 Canada Research Chair at the University of British Columbia, where he directs the Sensorimotor Systems Laboratory. His research is multi-disciplinary, spanning computer graphics, scientific computing, computational mechanics, robotics, biomechanics, and the neural control of movement. He believes in building working hardware and software systems based on strong theoretical foundations. He founded Vital Mechanics, a startup that is building the first digital human models based on measurements of human skin, soft tissues, and clothing. Vital Mechanics’ mission is to make accurate predictions of how real human bodies behave when they interact with clothing, wearables, and the world around us.

Dinesh K. Pai is a Professor of Computer Science and Tier 1 Canada Research Chair at the University of British Columbia, where he directs the Sensorimotor Systems Laboratory. His research is multi-disciplinary, spanning computer graphics, scientific computing, computational mechanics, robotics, biomechanics, and the neural control of movement. He believes in building working hardware and software systems based on strong theoretical foundations. He founded Vital Mechanics, a startup that is building the first digital human models based on measurements of human skin, soft tissues, and clothing. Vital Mechanics’ mission is to make accurate predictions of how real human bodies behave when they interact with clothing, wearables, and the world around us.

Leave a Reply