“Reflections” © 2018 Epic Games, Inc. All rights reserved.

Real-Time Live! is the highly anticipated showcase of real-time graphics — live and on stage at SIGGRAPH 2018! One of the projects that will be presented and deconstructed by its creators in Vancouver is “Ray-Traced, Collaborative Virtual Production in Unreal Engine,” a demo originially presented at GDC 2018 of “Reflections” and set in the Star Wars™ universe. Creators Mohen Leo (director of content and platform strategy, ILMxLAB), Kim Libreri (CTO, Epic Games), and Gavin Moran (creative cinematic director, Epic Games) sat down with us to discuss ray tracing versus rasterization, the process behind “Reflections,” and the future of machine-learning tools. They also offer a sneak peek of their expanded demo for SIGGRAPH 2018.

From Left: Mohen Leo, Kim Libreri, and Gavin Moran

SIGGRAPH: “Reflections” utilizes ray tracing rendered in real time, which is very exciting. What has prevented ray tracing from entering real-time rendering until now? What changes (to processes/hardware/software) are making it possible now?

Kim Libreri (KL): Limited use of ray tracing actually has occurred in real time before — most often, screen-space ray tracing, but also with a few very heavily customized renderers. What’s changed recently are two things, mainly. First, with Microsoft’s DirectX ray-tracing capabilities (DXR) there’s a formal specification for parts of ray tracing that can be standardized, and eventually, hardware-accelerated. Second, there have been massive improvements in performance in recent years, and now it is possible to trace hundreds of millions of rays per second with last-gen hardware. Besides, state-of-the-art denoisers have reached a point where they can produce high-quality images with very few samples per pixel, which makes them an essential tool for real-time ray tracing.

SIGGRAPH: Tell us a bit about how this project started and what your roles were in its development.

KL: In 2017, NVIDIA approached Epic about the revolutionary ray-tracing technology they were working on and we discussed collaborating on a demo which would show off this capability. We thought that Lucasfilm and their immersive entertainment division, ILMxLAB, would be the perfect partner because Star Wars is such a great IP to demonstrate such forward-looking technology, especially given the possibilities with real-time ray tracing. Epic, NVIDIA, and ILMxLAB got together to produce the original “Reflections” demo for GDC 2018.

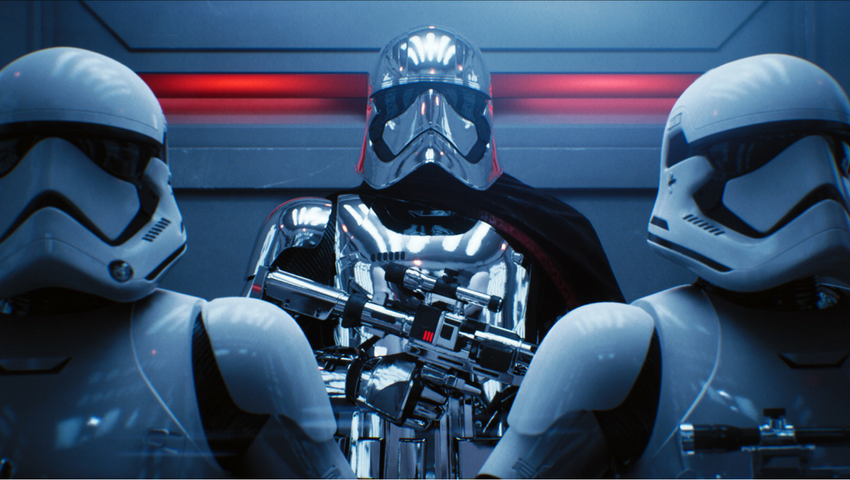

Mohen Leo (ML): I thought Captain Phasma would be the ultimate test of shiny reflectivity. We worked with Epic to craft a brief story that would feature Phasma and two stormtroopers, have some humor, yet still feel true to the Star Wars universe. That’s why it was really important to all of us that the rendered characters look just like they do in the movies.

Gavin Moran (GM): I was asked to work on a cinematic for the ray-traced demo that was a fun interaction for the stormtroopers with Captain Phasma. I helped to write and then direct and act out most of the parts for the cinematic. I was also the voice of one of the stormtroopers, which was a real bucket-list moment for me. That was the original demo, which we expanded upon for this year’s SIGGRAPH Real-Time Live! program.

KL: For SIGGRAPH, we always like to do something special. We thought it would be fun to give people an idea of how we made the initial demo, especially given how virtual production is a big part of how we make all of our cinematic pieces at Epic. Through the immersive capabilities of virtual reality, Gavin was able to act out all of the roles against himself. We thought this demonstration would be a great preview of many new features that we’ve been building for Epic and for all Unreal Engine developers — namely, live multi-user collaboration using the Unreal Editor.

SIGGRAPH: Tell us a bit more about the SIGGRAPH demo and what will be different.

KL: More than just a pure ray-tracing demo, this is actually a full virtual production demo. It’s a glimpse into what will be the future of filmmaking, where final pixels can be achieved on set, and that set can be virtual. This means that a team of creatives can work together on all phases of virtual production within the sandbox of the engine. Think about it: with multi-user collaboration and editing, you can have set decorators, greens people, camera operators, actors, DPs, and directors — all working together just like they would on a film set. Whether in VR or on a workstation, everyone is working together in the same environment, all in real time.

Not only will people be able to collaborate as they do on a real film set, but we’ll also be able to realistically achieve real-time, photorealistic pixels, effectively removing the “post” from “post-production.” And the real-time ray tracing is what gives you these final-quality pixels.

GM: This demo shows one way to approach multi-user virtual filmmaking. The workflow we used when creating “Reflections” is as follows:

- Record a performance using Unreal Engine 4’s Sequencer cinematic tool.

- Use Sequencer to play back the recording to an actor wearing a VR headset and body-tracking sensors.

- Have the actor perform against the action as a character, responding to the VR scene, while Sequencer captures everything in real time.

- Edit the new shot into the main sequence.

- Repeat the process within the virtual set until all performances achieve the desired creative vision. (It’s worth mentioning that you can also adjust a scene’s lighting, set dressing, material properties, and so forth… in-between takes!)

ML: For me, one of the most exciting aspects of being on a real film set is the sense of collaboration. A bunch of really talented experts from different fields and professions get together to create something fantastic. Computer graphics content, on the other hand, has traditionally been done in more of an assembly line approach, with each department doing work on their own and then handing it off to the next department. With these new multi-user techniques, you can imagine how, in the future, CG artists from different departments can collaborate more closely. Lighting, camera layout, animation, and so on, will be able to work live within the same scene, seeing and discussing each other’s adjustments in real time, just like on a real film set.

SIGGRAPH: Traditionally, reflections and shadows have been rendered through rasterization. How does ray tracing differ from rasterization?

KL: Traditionally, the techniques that we use in real time, such as shadow maps and screen space reflections, can produce visible artifacts that one would not see in the real world or in cinematic-quality rendering. By having a ray tracer at the core of our rendering system, we are able to able to imitate reality with much more fidelity. Reflections can be accurate and represent the whole scene. We can have cinematic lighting through textured area lights and their corresponding ray-traced shadows. And, these pixels will be final quality without the need for a long and expensive post-production process.

SIGGRAPH: Players would love to see this kind of photorealism in their games. When might we see ray tracing in games?

KL: At the end of this year we are planning to have official Unreal Engine 4 support for DXR checked-in and available to the GitHub community. As the first official feature, this will include the ray-traced area light shadows. You could see hybrid features such as ray-traced area lights on high-end hardware next year, depending on developer adoption. More features and algorithms will come online as people become more familiar with the API and performance characteristics.

SIGGRAPH: Thinking ahead, could machine-learning tools potentially be used to optimize the creation of even more accurate real-time ray tracing?

KL: Machine learning will play a vital role in improving existent denoising algorithms; there are already implementations available that produce impressive results. In the future, machine learning could be used for many other purposes, such understanding better where light comes from and sampling lights and materials in a more efficient way. Beyond ray tracing, machine learning could revolutionize many other aspects of filmmaking. Pulling a green screen for a composite live-action, CG shot is extremely detailed and time consuming work, and I am confident that machine learning will be able to revolutionize that process. Similarly, animation and motion-capture processing and cleanup are also extremely lengthy processes, and I believe that machine learning will absolutely yield huge gains in this field.