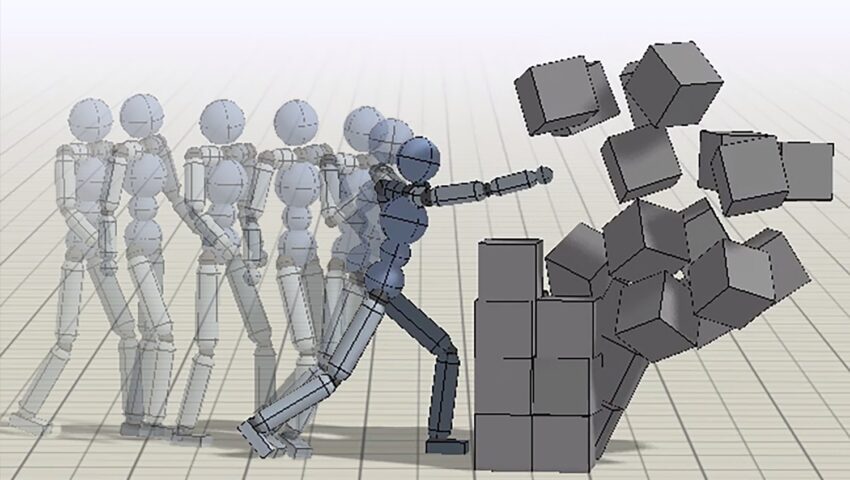

Adversarial motion priors allow simulated character to perform challenging tasks by imitating diverse motion datasets.

At SIGGRAPH 2021, participants will explore the latest research in groundbreaking topics, such as generative adversarial networks, neural and real-time rendering, simulation, meshing, character control, and more. To discover more about what’s to come at the virtual conference, we sat down with Jason Peng and Edward Ma, who led research on “AMP: Adversarial Motion Priors for Stylized Physics-based Character Control,” to learn more about their project, what problems it solves, why they are looking forward to SIGGRAPH 2021, and what advice they have for those planning to submit to Technical Papers in the future.

SIGGRAPH: Share some background about “AMP: Adversarial Motion Priors for Stylized Physics-based Character Control.” What inspired this research?

Jason Peng (JP): Developing procedural methods that are able to automatically synthesize natural behaviors for simulated characters has been one of the fundamental challenges in computer animation. In recent years, the most popular approaches for producing realistic motions have been using some form of motion tracking. But this approach can be fairly restrictive, since a character is limited to tracking one motion at a time, and it has to closely follow the pose sequence from a particular reference motion. Therefore, it can be difficult to apply motion tracking techniques to large and diverse motion datasets. Our work is inspired by the success of generative adversarial networks (GANs) for tasks like image generation. We also drew inspiration from some of Professor Kanazawa’s previous work, called HMR, which proposed using adversarial pose priors for 3D human mesh recovery. Our work adapts this idea of adversarial priors to motion-control problems. One of the advantages of these adversarial techniques is that it allows characters to imitate large unstructured motion datasets without having to explicitly track a particular reference motion. This provides the characters with much more flexibility to transition and combine different behaviors in order to perform more complex tasks.

Edward Ma (EM): It is always difficult to develop complicated and natural behaviors for robotics and virtual characters, and one of the promising solutions is to imitate the natural behaviors of the creatures in the world. Usually, works of imitation learning show exciting results but also require dedicated curriculums and complex systems. We want to go in this direction, but we long for how simple such methods could be and how to apply them to more scenarios. To achieve this, we explored an adversarial way to leverage the motion priors for computer animation and get interesting results.

SIGGRAPH: How did you develop your adversarial imitation learning approach. How many people were involved? How long did it take?

JP: The project took about a year, and Edward and I led it with support from our advisors Pieter Abbeel, Sergey Levine, and Angjoo Kanazawa. Our work started out by adapting a previous adversarial imitation learning algorithm to our motion imitation tasks, called Generative Adversarial Imitation Learning (GAIL). Prior works have attempted to apply similar techniques to motion imitation, but the results have generally fallen short of what has been achieved with more standard motion-tracking methods. To address these shortcomings, we incorporated a number of modifications to the algorithms that ended up leading to substantially higher quality results. We were pleasantly surprised that the relatively simple modifications lead to pretty large improvements, to the point where our method is now competitive with state-of-the-art, tracking-based techniques in terms of motion quality.

EM: At first, we built a vanilla model of motion prior in the particle environment. We tried to make the particle learn specific ways to approach the goal with priors we designed, and it worked. Then, we used the bipedal to tune the algorithm. Finally, we applied the motion prior on the humanoid and other complex characters.

SIGGRAPH: What challenges did you face while developing your research?

JP: Some of the main challenges that we faced were long training times and data collection. Training these models requires a fair bit of computing, and a typical AMP model took about a week to train. This significantly slowed our iteration cycles. We are currently exploring ways to speed up training these models.

Collecting motion datasets also was a challenge for us. Publicly available motion datasets are still fairly limited, particularly for more athletic behaviors like martial arts. Processing and cleaning the data also is labor intensive, so we ended up devoting a large chunk of our time just preparing the datasets. Once that was done, training the models was relatively easy. We could just feed the model whatever data we had, and it was able to learn some pretty life-like and intricate behaviors.

EM: [Agreed.] The most difficult challenge in this work was to train a GAN. It is well known that GANs suffer from mode collapse, and it’s difficult to make it work the way you want. Thus, we had to try different ways to make the training stable.

SIGGRAPH: How do you envision this research being used in the future? What problems does it solve?

JP: Motion-tracking methods have been the dominate class of techniques for producing high-quality motions in physics-based character animation. We now have a viable alternative that can be much more flexible and is potentially more scalable to large motion datasets. This is a fairly large departure from how motion imitation has been done in the past. My hope is that these AI-driven animation systems will eventually lead to “virtual actors.” Like real-life actors, these agents can take high-level directions from users and then act out the appropriate behaviors. Rather than requiring animators to painstakingly craft animations for every behavior that we want a character to perform, we can instead use these AI systems to automatically synthesize the desired motions.

EM: It is expected that the machine-learning-based methods could help to reduce the human labor required in computer animation in the future. Our method could provide an alternative way to easily generate more natural motions instead of capturing the behaviors of actors in the studio.

SIGGRAPH: What excites you most about your research?

JP: One of the things that I find most gratifying about this line of work is seeing all of the behaviors that these AI systems can learn. There have been many instances where our characters learn things that we didn’t expect and discover interesting ways of solving difficult tasks.

EM: I’m most excited by the utilization of machine learning in computer animation and the smooth, life-like motions it presents.

SIGGRAPH: What are you most looking forward to about SIGGRAPH 2021?

JP: Graphics is such a diverse and dynamic field that folks are always coming up with creative and unexpected problems to solve. SIGGRAPH is a great venue for getting a glimpse of all the interesting problems that people are tackling, and I find it to be a good place to draw inspiration from very diverse areas.

EM: I’m looking forward to hearing about other exciting works in computer animation and related areas.

SIGGRAPH: What advice do you have for someone who wants to submit to Technical Papers for a future SIGGRAPH conference?

JP: One lesson that I have learned throughout my work is that simple methods, when implemented properly, can work surprisingly well. AMP is one such example. A number of prior works have tried to apply similar adversarial techniques for motion control, but the quality of the results has generally been not very good. As a result, these past systems incorporate more and more complex modifications to the underlying algorithm in order to try and patch the shortcomings. In this work, we started from the most basic version of the algorithm and made simple and succinct modifications in order to adapt it to our domain. This then lead to a simple and elegant method that can produce surprisingly high-quality results. Hopefully, this simplicity will make the work easier to adopt and build on by others in the community.

EM: Find a good topic and put all your efforts into it. Keep communicating, and get feedback from seniors.

The countdown to SIGGRAPH 2021 is on! Register now for your opportunity to explore Technical Papers research in our virtual venue, 9–13 August.

Jason Peng is a Ph.D. candidate at UC Berkeley, advised by Professor Pieter Abbeel and Professor Sergey Levine. His work focuses on reinforcement learning and motion control, with applications in computer animation and robotics. His work aims to develop systems that allow both simulated and real-world agents to reproduce the agile and athletic behaviors of humans and animals.

Jason Peng is a Ph.D. candidate at UC Berkeley, advised by Professor Pieter Abbeel and Professor Sergey Levine. His work focuses on reinforcement learning and motion control, with applications in computer animation and robotics. His work aims to develop systems that allow both simulated and real-world agents to reproduce the agile and athletic behaviors of humans and animals.

Edward (Ze) Ma is a senior student at Shanghai Jiao Tong University, advised by Professor Chao Ma. He will pursue a master’s degree in computer science at Columbia University. He was a research intern at UC Berkeley, advised by Professor Angjoo Kanazawa, Professor Pieter Abbeel, and Professor Sergey Levine.

Edward (Ze) Ma is a senior student at Shanghai Jiao Tong University, advised by Professor Chao Ma. He will pursue a master’s degree in computer science at Columbia University. He was a research intern at UC Berkeley, advised by Professor Angjoo Kanazawa, Professor Pieter Abbeel, and Professor Sergey Levine.

Angjoo Kanazawa is an assistant professor in the Department of Electrical Engineering and Computer Science at the University of California at Berkeley and a part-time research scientist at Google Research. Previously, she was a BAIR postdoc at UC Berkeley advised by Jitendra Malik, Alyosha Efros, and Trevor Darrell. She completed her Ph.D. in computer science at the University of Maryland, College Park, with her advisor David Jacobs. She also spent time at the Max Planck Institute for Intelligent Systems with Michael Black. Her research is at the intersection of computer vision, computer graphics, and machine learning, focusing on visual perception of the dynamic 3D world behind everyday photographs and videos, such as 3D perception of people and animals along with their environment. She has been named a Rising Star in EECS and is a recipient of Anita Borg Memorial Scholarship, Best Paper Award in Eurographics 2016, and the Google Research Scholar Award 2021. She also serves on the advisory board of Wonder Dynamics, whose goal is to utilize AI technologies to make VFX effects more accessible for indie filmmakers.

Angjoo Kanazawa is an assistant professor in the Department of Electrical Engineering and Computer Science at the University of California at Berkeley and a part-time research scientist at Google Research. Previously, she was a BAIR postdoc at UC Berkeley advised by Jitendra Malik, Alyosha Efros, and Trevor Darrell. She completed her Ph.D. in computer science at the University of Maryland, College Park, with her advisor David Jacobs. She also spent time at the Max Planck Institute for Intelligent Systems with Michael Black. Her research is at the intersection of computer vision, computer graphics, and machine learning, focusing on visual perception of the dynamic 3D world behind everyday photographs and videos, such as 3D perception of people and animals along with their environment. She has been named a Rising Star in EECS and is a recipient of Anita Borg Memorial Scholarship, Best Paper Award in Eurographics 2016, and the Google Research Scholar Award 2021. She also serves on the advisory board of Wonder Dynamics, whose goal is to utilize AI technologies to make VFX effects more accessible for indie filmmakers.

Leave a Reply