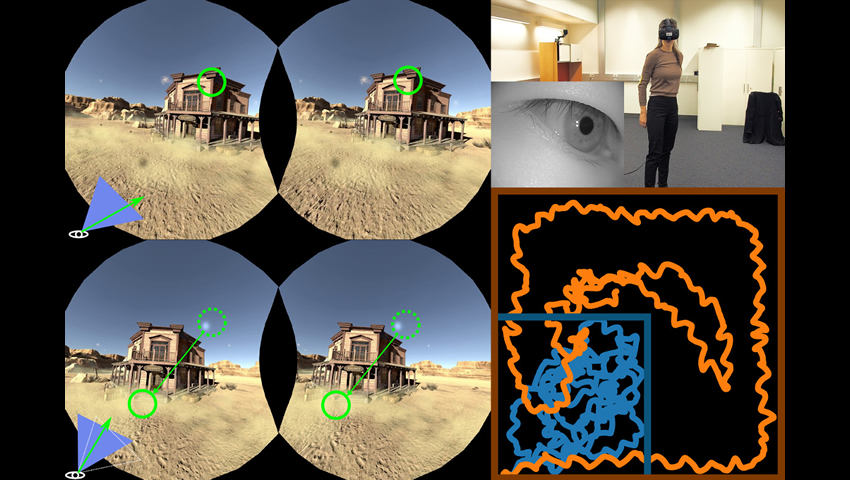

Image: “Infinite Walking in VR,” courtesy of Qi Sun

In the ever-evolving landscape of virtual reality (VR) technology, a number of key hurdles remain. But a team of computer scientists have tackled one of the major challenges in VR that will greatly improve user experience–enabling an immersive virtual experience while being physically limited to one’s actual, real-world space. The team who authored the research, titled “Towards Virtual Reality Infinite Walking: Dynamic Saccade Redirection,” will present their work at SIGGRAPH 2018, held 12-16 August in Vancouver, British Columbia.

Computer scientists from Stony Brook University, NVIDIA and Adobe have collaborated on a computational framework that gives VR users the perception of infinite walking in the virtual world – while limited to a small physical space. The framework also enables this free-walking experience for users without causing dizziness, shakiness, or discomfort typically tied to physical movement in VR. And, users avoid bumping into objects in the physical space while in the VR world.

To do this, the researchers focused on manipulating a user’s walking direction by working with a basic natural phenomenon of the human eye, called saccade. Saccades are quick eye movements that occur when we look at a different point in our field of vision, like when scanning a room or viewing a painting. Saccades occur without our control and generally several times per second. During that time, our brains largely ignore visual input in a phenomenon known as “saccadic suppression” – leaving us completely oblivious to our temporary blindness, and the motion that our eyes performed.

“In VR, we can display vast universes; however, the physical spaces in our homes and offices are much smaller,” says lead author of the work, Qi Sun, a PhD student at Stony Brook University and former research intern at Adobe Research and NVIDIA. “It’s the nature of the human eye to scan a scene by moving rapidly between points of fixation. We realized that if we rotate the virtual camera just slightly during saccades, we can redirect a user’s walking direction to simulate a larger walking space.”

Using a head- and eye-tracking VR headset, the researchers’ new method detects saccadic suppression and redirects users during the resulting temporary blindness. When more redirection is required, researchers attempt to encourage saccades using a tailored version of subtle gaze direction–a method that can dynamically encourage saccades by creating points of contrast in our visual periphery.

To date, existing methods addressing infinite walking in VR have limited redirection capabilities or cause undesirable scene distortions; they have also been unable to avoid obstacles in the physical world, like desks and chairs. The team’s new method dynamically redirects the user away from these objects. The method runs fast, so it is able to avoid moving objects as well, such as other people in the same room.

The researchers ran user studies and simulations to validate their new computational system, including having participants perform game-like search and retrieval tasks. Overall, virtual camera rotation was unnoticeable to users during episodes of saccadic suppression; they could not tell that they were being automatically redirected via camera manipulation. Additionally, in testing the team’s method for dynamic path planning in real-time, users were able to walk without running into walls and furniture, or moving objects like fellow VR users.

“Currently in VR, it is still difficult to deliver a completely natural walking experience to VR users,” says Sun. “That is the primary motivation behind our work – to eliminate this constraint and enable fully immersive experiences in large virtual worlds.”

Though mostly applicable to VR gaming, the new system could potentially be applied to other industries, including architectural design, education, and film production.

The SIGGRAPH Technical Papers program is the premier international forum for disseminating new scholarly work in computer graphics and interactive techniques. Press play below to watch the 2018 Technical Papers Preview trailer for program highlights, then take a deeper dive into the full program at the SIGGRAPH 2018 website.

Registration is now open for SIGGRAPH 2018. For the best value, register before 22 June, 2018!